Top 7 Benefits Enabled By an On-Premises Data Warehouse

Actian Corporation

July 8, 2024

In the ever-evolving landscape of database management, with new tools and technologies constantly hitting the market, the on-premises data warehouse remains the solution of choice for many organizations. Despite the popularity of cloud-based offerings, the on-premises data warehouse offers unique advantages that meet a variety of use cases.

A modern data warehouse is a requirement for data-driven businesses. While cloud-based options seemed to be the go-to trend over the last few years, on-premises data warehouses offer essential capabilities to meet your needs. Here are seven common benefits:

-

Ensure Data Security and Compliance

In industries such as finance and healthcare, data security and regulatory compliance are critical. These sectors manage sensitive information that must be protected, which is why they have strict protocols in place to make sure their data is secure.

With the ever-present risk of cyber threats—including increasingly sophisticated attacks—and stringent regulations such as General Data Protection Regulation (GDPR), Health Insurance Portability and Accountability Act (HIPAA), and Payment Card Industry Data Security Standard (PCI-DSS), you face significant risk and likely penalties for non-compliance. Relying on external cloud providers for data security can potentially leave you vulnerable to breaches and complicate your compliance efforts.

An on-prem data warehouse gives you complete control over your data. By housing data within your own infrastructure, you can implement robust security measures tailored to your specific needs. This control ensures compliance with regulatory requirements and minimizes the risk of data breaches.

-

Deliver High Performance With Low Latency

High-performance applications and databases, including those used for real-time analytics and transactional processing, require low-latency access to data. In scenarios where speed and responsiveness are critical, cloud-based solutions may introduce latency that can hinder performance, while on-premises offerings do not.

Latency issues can lead to slower decision-making, reduced operational efficiency, and poor user experiences. For businesses that rely on real-time insights, any delay can result in missed opportunities and diminished competitiveness. On-premises data warehouses offer the advantage of proximity.

By storing data locally, within your organization’s physical building, you can achieve near-instantaneous access to critical information. This setup is particularly advantageous for real-time analytics in which every millisecond counts. The ability to process data quickly and efficiently enhances overall performance and supports rapid decision-making.

-

Customize Your Infrastructure

Standardized cloud solutions may not always align with the unique requirements of your organization. Customization and control over the infrastructure can be limited in a cloud environment, making it difficult to tailor solutions to specific business or IT needs.

Without the ability to customize, you may face data warehouse constraints that limit your operational effectiveness. Plus, a lack of flexibility can result in suboptimal performance and increased operational costs if you need to work around the limitations of cloud services. For example, an inability to fine-tune your data warehouse infrastructure to handle high-velocity data streams can lead to performance bottlenecks that slow down critical operations.

An on-premises data warehouse gives you control over your hardware and software stack. You can customize your infrastructure to meet specific performance and security requirements. This customization extends to the selection of hardware components, storage solutions, and network configurations, enabling you to fully optimize your data warehouse for unique workloads and applications.

-

Manage Costs With Visibility and Predictability

Cloud services often operate on a pay-as-you-go model with unlimited scalability, which can lead to unpredictable costs. While a cloud data warehouse can certainly be cost-effective, expenses can escalate quickly with increased data volume and usage. Costs can fluctuate significantly based on how much the system is used, the amount of data transferred into and out of the cloud, the number and complexity of workloads, and other factors.

Unpredictable costs can strain budgets and make financial planning difficult, which makes the CFO’s job more challenging. On-premises data warehouses solve that problem by offering greater cost predictability.

By investing in your on-prem data warehouse upfront, you avoid the variable costs associated with cloud services. This approach leads to better budget planning and cost control, making it easier to allocate resources effectively. Over the long term, on-premises solutions can be cost-efficient with a favorable total cost of ownership and strong return on investment, especially if you have stable and predictable data usage patterns.

-

Meet Data Sovereignty Regulations

In some regions, data sovereignty laws mandate how data is collected, stored, used, and shared within specific borders. Similarly, data localization laws may require data about a region’s residents or data that was produced in a specific area to be stored inside a country’s borders. This means data collected in one country cannot be transferred and stored in a data warehouse in another country.

Navigating complex data sovereignty requirements can be challenging, especially when you’re dealing with international operations. An on-premises data warehouse helps ensure compliance with local data sovereignty laws by keeping data within a physical building.

This approach simplifies adherence to regional regulations and mitigates the risks associated with cross-border data transfers in the cloud. You can confidently operate within legal frameworks using an on-prem data warehouse, safeguarding your reputation and avoiding legal problems.

-

Integrate Disparate Systems

Many organizations operate by using a mix of legacy and modern systems. Integrating these disparate technologies into a cohesive ecosystem to manage, store, and analyze data can be complex, especially when using cloud-based solutions.

Legacy systems often contain critical data and processes that are essential to daily business operations. Migrating these systems to the cloud can be risky and disruptive, potentially leading to data loss or downtime, or require significant recoding or refactoring.

On-premises data warehouses enable integration with legacy systems. You also have the assurance of maintaining continuity by leveraging your existing infrastructure while gradually adding, integrating, or modernizing your data management capabilities in the warehouse.

-

Enable High Data Ingestion Rates

Industries such as telecommunications, manufacturing, and retail typically generate massive amounts of data at high velocities. Efficiently ingesting and processing this data in real time is crucial for maintaining operational effectiveness and gaining timely insights.

On-premises data warehouses are well suited to handle high data ingestion rates. By keeping data ingestion processes local, you can be ensured that data is captured, processed, and analyzed with minimal delay. This capability is essential for industries where real-time data is critical for optimizing operations and identifying emerging trends.

Choosing the Most Optimal Environment for Your Data Warehouse

While cloud-based data warehouses offer many benefits, the on-premises data warehouse continues to play a vital role in addressing specific database management challenges. From ensuring data security and compliance to providing low-latency access and the ability to customize, the on-premises data warehouse remains a powerful tool to meet your organization’s data needs.

By understanding the benefits enabled by an on-premises data warehouse, you can make informed decisions about your database management strategy. Whether it’s for meeting your regulatory requirements, optimizing performance, or controlling costs, the on-premises data warehouse stands as a robust and reliable option in the diverse landscape of database management solutions.

As we noted in a previous blog, the on-prem data warehouse is not dead. At the same time, we realize the unique benefits of a cloud approach. If you want on-prem and the cloud, you can have both with a hybrid approach.

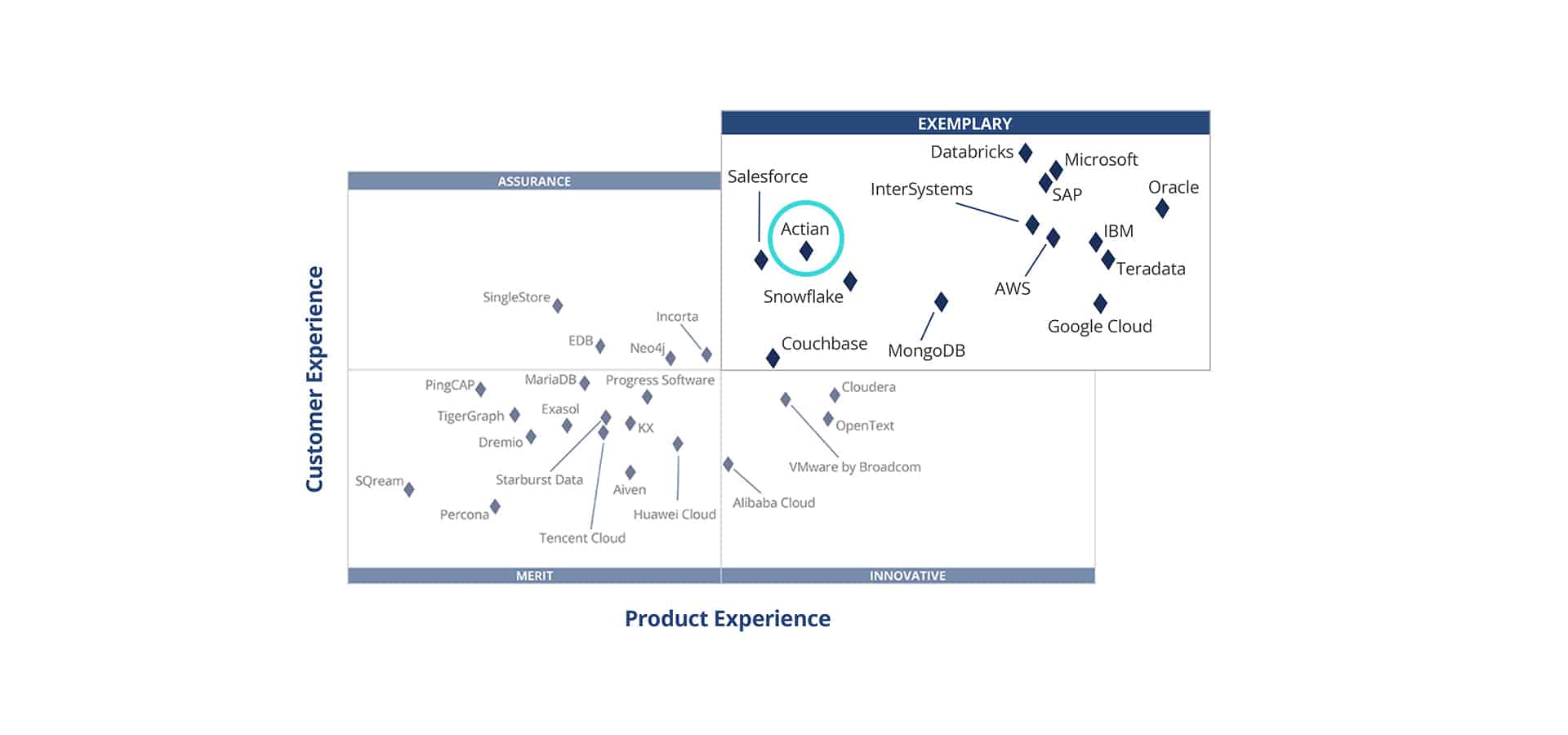

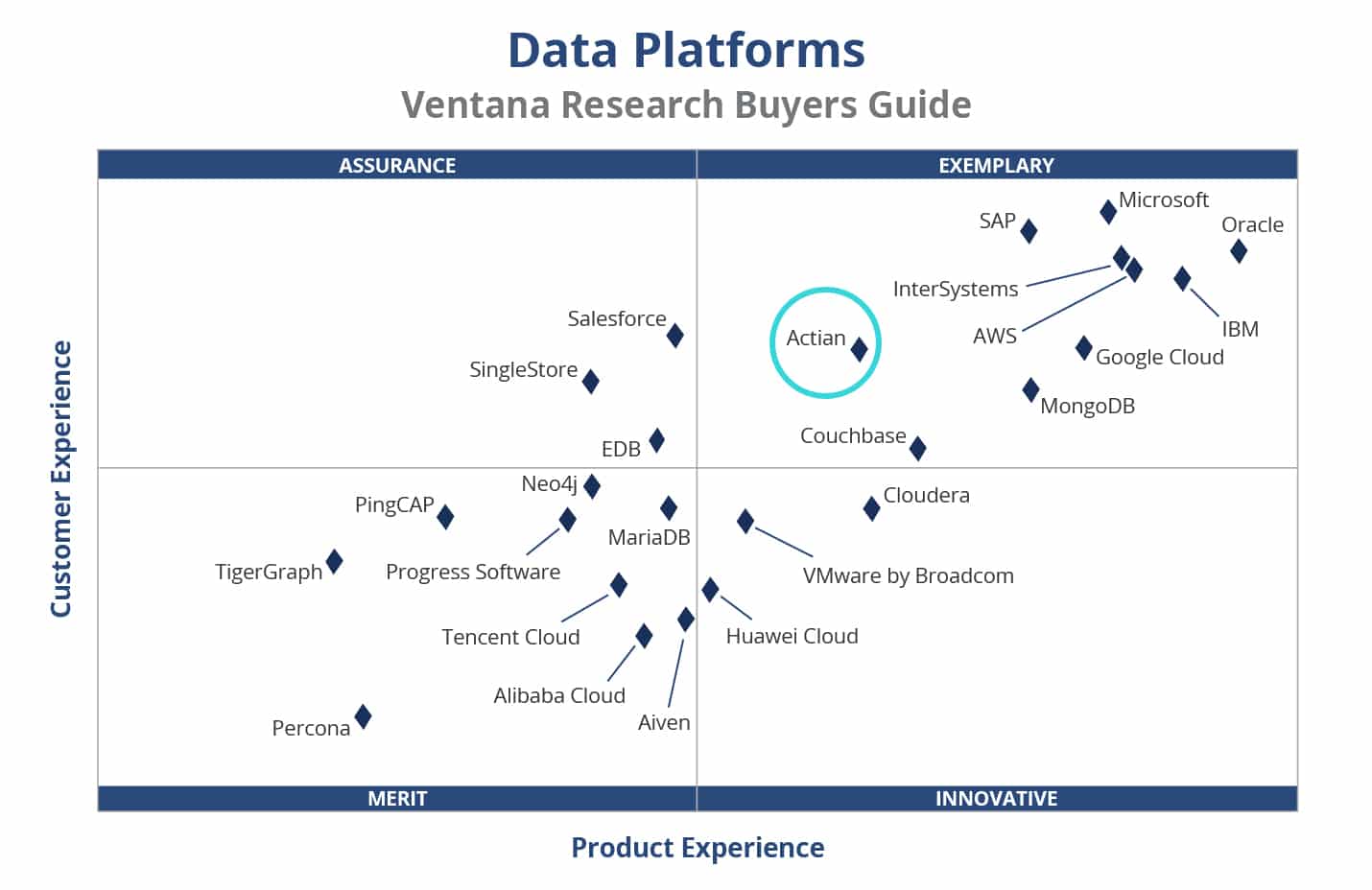

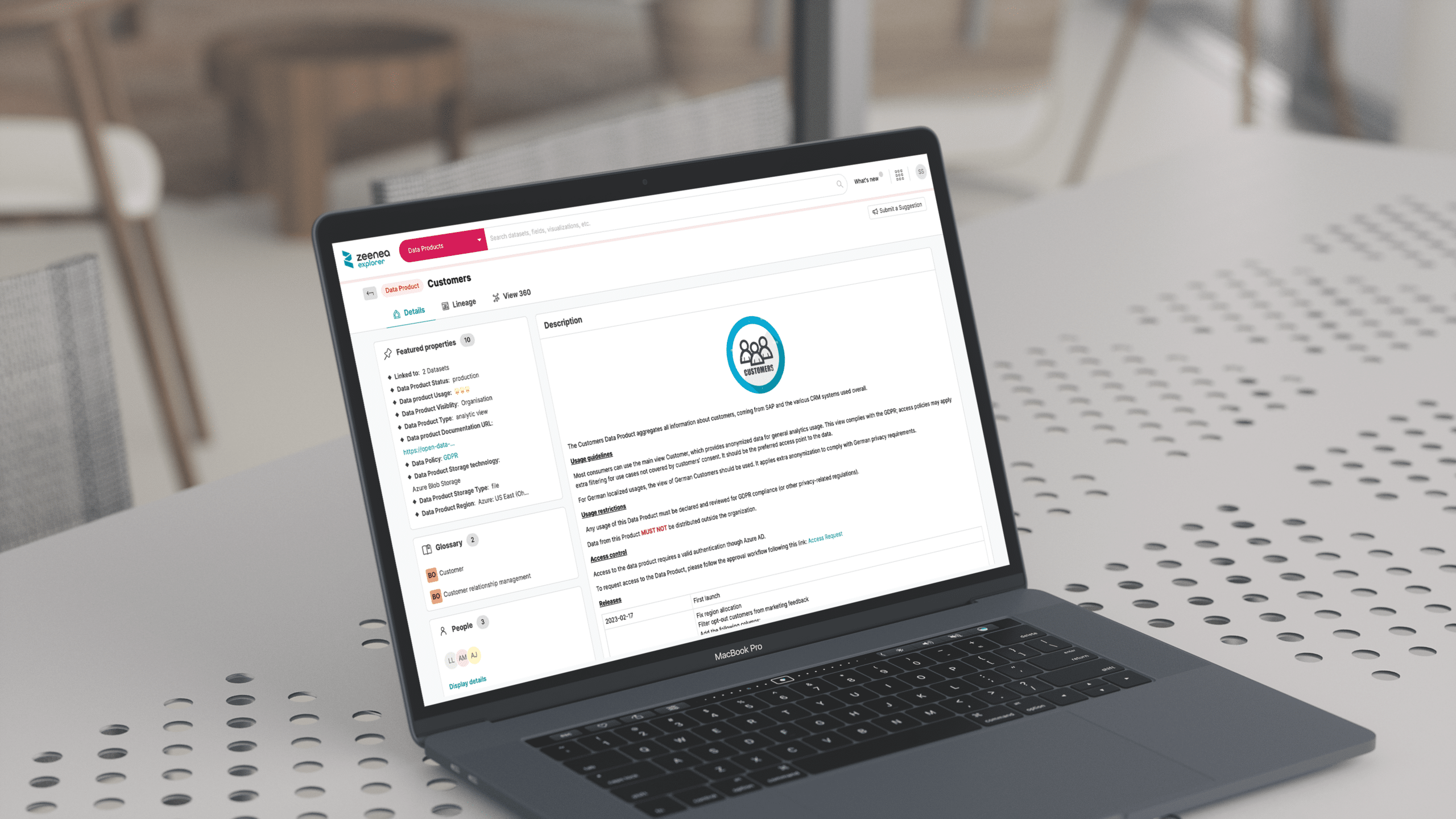

For example, the Actian Data Platform offers data warehousing, integration, and trusted insights on-prem, in the public cloud, or in hybrid environments. A hybrid approach can minimize disruption, preserve critical data, and ensure that legacy systems continue to function effectively alongside new technologies, allowing you to make decisions and drive outcomes with confidence.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.