Part Three: SQLite, the “Flat File” of Databases

Over the past few articles, our SQLite blog series has been looking at SQLite Serverless Architecture and how it is unsuitable for IoT environments. Those of you who have been following can jump ahead to the next section, but if you’re new to this discussion, you may want to review the predecessor parts.

- In part one, mobile may be IoT, but IoT is not mobile when it comes to data, we examined the fact that though SQLite is the most popular database on the planet—largely due to its ubiquitous deployment on mobile smartphones and tablets, where it supports embedded applications for a single user—it cannot support the multi-connection, multi-user, multi-application requirements of the IoT use cases that are proliferating with viral ferocity in every industry. In a world that calls for the performance of cheetahs and peregrine falcons, SQLite is a banana slug.

- In part two, Rethinking What Client-Server Means for Edge Data Management, we considered key features and characteristics of the SQLite Serverless Architecture (portability, little-to-no configuration, small footprint, SQL API, and some initially free version to seed adoption) in light of the needs of modern edge data management and discussed the shortcomings of the SQLite architecture in terms of its ability to integrate with critical features found in traditional client-server databases (chiefly those multi-point qualifiers above).

In our final analysis of this serverless architecture, I’d very much like to explore (read: clarify) what will happen if a developer ignores these cautionary points and doubles down on SQLite as a way to handle IoT use cases.

Don’t Mistake Multi-Connection and Multi-Threaded for Client Server

In the late 90s, as applications became more sophisticated, generated and ingested more data, and performed more complex operations on that data internally. Consequently, app developers had to develop a lot of workarounds to deal with the limitations of routine, operating system-based file management services. Instead of spending time on all these DIY efforts, application developers were clamoring for a dedicated database they could embed into an application to support their specific data management needs.

At the turn of the 21st century, SQLite appeared and seemed tailor-made to meet these needs. SQLite enabled indexing, querying, and other data management functionality through a series of standard SQL calls that could be inserted into the application code, with the entire database bundled as a set of libraries that became part of the final deployed executable. Keep in mind that the majority of these applications tended to be monolithic, single-purpose, single-user applications designed for the simpler CPU architectures in use at the time. They were not designed to run multiple processes, let alone multiple threads. End-user and data security were not yet the high priorities they are today. And as for performance in a networked environment? Wireless networks were reactive and spotty at best. Multiple, external, high-bandwidth data connections were uncommon.

So it’s really no surprise that SQLite wasn’t able to service simultaneous read and write requests for a single connection (let alone for multiple connections) when it was designed. Designers were thrilled to have an embeddable database that would allow multiple processes to have sequential read and write access to a data table within an application. They were not looking for enterprise-grade client-server capabilities. They were not designing stand-alone database systems that would support multiple applications simultaneously. They simply needed more than flat-file access mediated by an operating system.

And there lies the heart of the issue with SQLite. It was never intended to handle multiple external applications or their connections asynchronously, as would a traditional client-server database. Modern networked applications commonly have multiple processes and/or multiple threads. When you throw SQLite into a situation with multiple connections and the potential for multiple simultaneous read and write requests, you quickly encounter the possibility of race conditions and data corruption.

To be fair, SQLite has tried to accommodate these evolving demands. The current version of SQLite handles multiple connections through its thread-mode options: single-thread, multi-thread, and serialized. Single-thread is the original SQLite processing mode, handling one transaction at a time, either a read or a write from one and only one connection. Multi-thread will support multiple connections but still one at a time for read or write. Serialized—the default mode for the most current SQLite versions—can support multiple concurrent connections (and, therefore, can support a multi-threaded or multi-process application), but it cannot handle all of them simultaneously. SQLite can handle simultaneously read connections in multi-thread and serialized modes, but it locks the data tables to prevent attempts at simultaneous writes. Nor can SQLite handle the orchestration of writes from several connections.

Compare that to the architecture of a true client-server database that is built to manage simultaneous writes. The client-server database evaluates each write service request and, if attempts are made to write to the same data within a table, it blocks the request until the current operation on that data is completed. If attempts are made to different parts of the data table, the server allows them to go forward. That’s true orchestration. Locking the entire table and holding off writes (or faking it for sequential writes to occur alongside multiple reads with WAL) is not the same thing.

Why is this a showstopper for SQLite in an IoT environment? One of the most basic operations with IoT devices and gateways involves writing data from a variety of devices into your data repository, and the write locks imposed during multi-threaded/multi-connection operations render it non-viable in a production environment. Furthermore, a second basic operation taking place within an IoT environment involves performing data processing and analytics on previously collected datasets. While these may be read-intensive operations that are executed independently (either as separate processes or as separate threads) of the write-intensive operations just described, they still cannot occur concurrently in an SQLite environment and maintain ACID compliance.

As you scale up your deployments, or as system complexity increases—say you want to instrument more and more within an environment, be that an autonomous car or a smart building—you will invariably add more data connection points downstream or within your local environment. Each of these entities will have one or more additional database connections, if not their own database that needs a connection. You could try to establish these connections, but they will need to be handled through add-on application logic that will likely result in response times that are outside the design constraints for your IoT system.

Workarounds Designed to Deny (or Defy) Reality

SQLite partisans will wave their hands with dismissive nonchalance and tell you that SQLite is fast enough (it’s not; we’ve already discussed how slow SQLite is) and that you can build your own functionality to handle simultaneous reads and writes across multiple connections—in effect, manually synchronizing them specific to the use case being handled. One method by which they manage this scenario involves using the serialized mode mentioned above and building functionality to handle synchronization and orchestration within the application threads. This approach tries to avoid the transmission of read and write requests on multiple channels (thereby avoiding race conditions and the potential for data corruption). However, this approach also requires a high degree of skill, the assumption of long-term responsibility for the code, and a need for extensive test and validation to ensure that operations are transpiring properly.

An alternative approach would be to build the equivalent of a client-server orchestration front-end and use the single-thread option within SQLite, which would preclude race conditions or data corruption. But dropping back to a single-thread option would be like watching this banana slug move in even slower motion. That’s not a viable approach, given the high-speed, parallel write operations needed to accommodate multiple high-resolution data feeds or large-scale sensor grids. Moreover, all you’ve done is to accommodate the weaknesses of the database architecture by forcing the application to do something that the database should be doing. And you’d have to do that over and over, for every app in your IoT portfolio.

There are several sets of code and a couple of small shops that have tried to productize this latter approach, but with limited success. They work only with certain development platforms on a few of the SQLite supported platforms. Even if those platforms are a match for your use case, the performance issues may still increase the risk and difficulty of coding this workaround into your application.

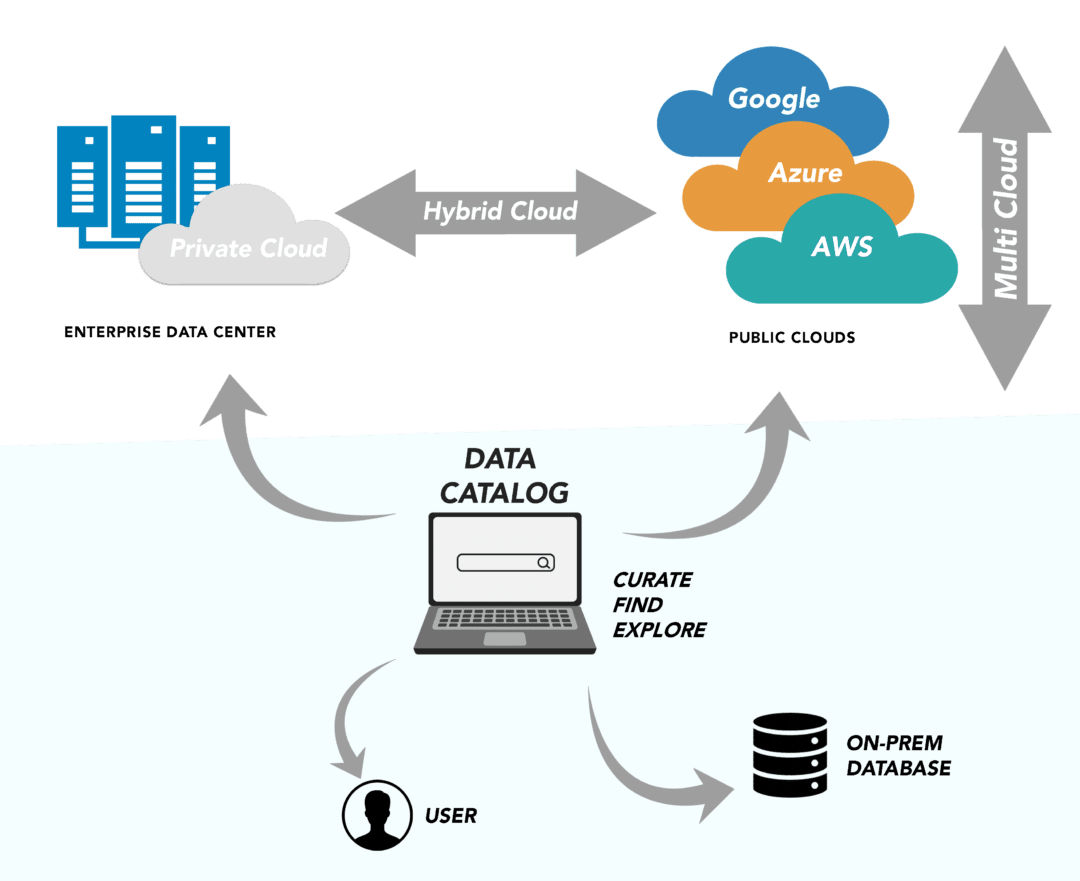

We’ve Seen This Iceberg Before

This cautionary tale isn’t just about the amount of DIY that will be incurred with the unquestioned reliance on SQLite for a given application. Like the IoT itself, it’s much bigger than that. For example, if you commit to handling this in your own code, how will you handle the movement of data from a device to the edge on-premises? How will you handle moving data to or from the cloud? The requirements for interacting with servers on either tier may be different, requiring you to write more code to perform data transformations (remember the blog on SQLite and ETL?). You might try to avoid the ETL bottleneck by using SQLite on both ends, but that would just kick the virtual can down the virtual road. You would still have to write code to handle SQLite masquerading as a server-based database on the gateway and in the cloud.

Ultimately, you can’t escape the need to write more code to make SQLite work in any of these scenarios. And that’s just the tip of this iceberg. You would need to make trade-off comparisons between DIY and partial-DIY plus code modules/libraries for other functionality—from data encryption and public key management to SQL query editing, and more. The list of features that a true client-server infrastructure brings to the table—all lacking in SQLite—goes on and on.

Back in the day, SQLite enabled developers to avoid much of the DIY that flat-file management had required. For the use cases that were emerging back then, it was an ideal solution. For today’s use cases, though, even more DIY would be required to make SQLite work—and even then it would not work all that well. The vast majority of IoT use cases require a level of client-server functionality that SQLite cannot provide without incurring significant costs—in performance, in development time, and in risk. In a nutshell, it’s déjà vu, but now SQLite is the flat file whose deficiencies we must leave in the past.

Oh, and if you think that all this is just an issue for developers, think again. In the next and final blog in this series, we’ll widen the lens a bit and look at what this means for the business and the bottom line.

If you’re ready to reconsider SQLite and learn more about Actian Zen, you can just kick the tires for free with Zen Core, which is royalty-free for development and distribution.

About Actian Corporation

Actian empowers enterprises to confidently manage and govern data at scale. Actian data intelligence solutions help streamline complex data environments and accelerate the delivery of AI-ready data. Designed to be flexible, Actian solutions integrate seamlessly and perform reliably across on-premises, cloud, and hybrid environments. Learn more about Actian, the data division of HCLSoftware, at actian.com.