The Journey to Data Mesh – Part 3 – Creating Your First Data Products

Actian Corporation

April 22, 2024

While the literature on data mesh is extensive, it often describes a final state, rarely how to achieve it in practice. The question then arises:

What approach should be adopted to transform data management and implement a data mesh?

In this series of articles, get an excerpt from our Practical Guide to Data Mesh where we propose an approach to kick off a data mesh journey in your organization, structured around the four principles of data mesh (domain-oriented decentralized data ownership and architecture, data as a product, self-serve data infrastructure as a platform, and federated computational governance) and leveraging existing human and technological resources.

- Part 1: Scoping Your Pilot Project

- Part 2: Assembling a Development Team & Data Platform for the Pilot Project

- Part 3: Creating Your First Data Products

- Part 4: Implementing Federated Computational Governance

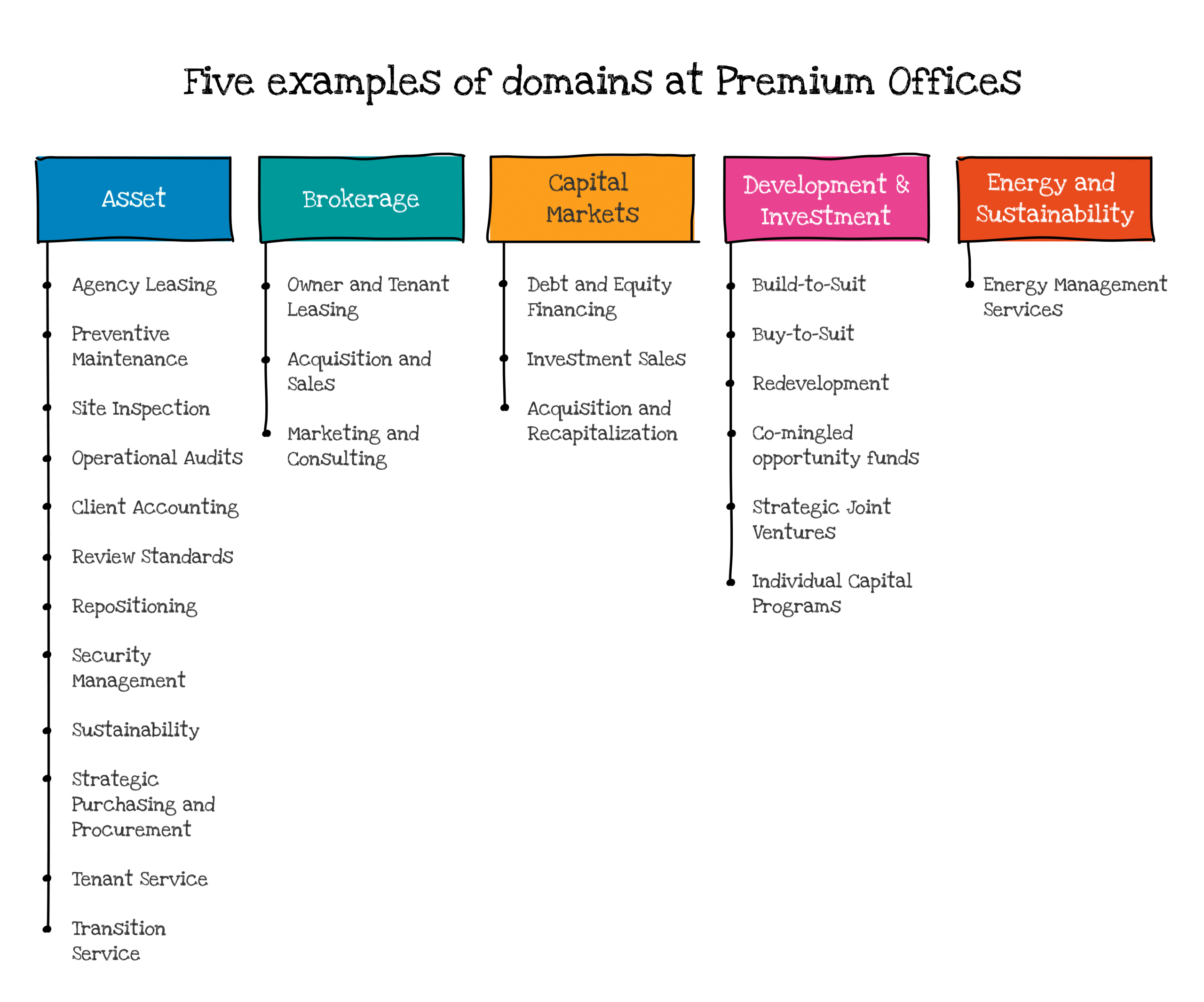

Throughout this series of articles, and in order to illustrate this approach for building the foundations of a successful data mesh, we will rely on an example: that of the fictional company Premium Offices – a commercial real estate company whose business involves acquiring properties to lease to businesses.

In the initial articles of the series, we’ve identified the domains, defined an initial use case, and assembled the team responsible for its development. Now, it’s time to move on to the second data mesh principle, “data as a product,” by developing the first data products.

The Product-Thinking Approach of the Mesh

Over the past decade, domains have often developed a product culture around their operational capabilities. They offer their products to the rest of the organization as APIs that can be consumed and composed to develop new services and applications. In some organizations, teams strive to provide the best possible experience to developers using their domain APIs: search in a global catalog, comprehensive documentation, code examples, sandbox environments, guaranteed and monitored service levels, etc.

These APIs are then managed as products that are born, evolve over time (without compatibility breaks), enriched, and are eventually deprecated, usually replaced by a newer, more modern, more performant version.

The data mesh proposes to apply this same product-thinking approach to the data shared by the domains.

Data Products Characteristics

In some organizations, this product-oriented culture is already well established. In others, it will need to be developed or introduced. But let’s not be mistaken:

A data product is not a new digital artifact requiring new technical capabilities (like an API Product). It is simply the result of a particular data management approach exposed by a domain to the rest of the organization.

Managing APIs as a product did not require a technological breakthrough: existing middleware did the job just fine. Similarly, data products can be deployed on existing data infrastructures, whatever they may be. Technically, a data product can be a simple file in a data lake with an SQL interface; a small star schema, complemented by a few views facilitating querying, instantiated in a relational database; or even an API, a Kafka stream, an Excel file, etc.

A data product is not defined by how it is materialized but by how it is designed, managed, and governed; and by a set of characteristics allowing its large-scale exploitation within the organization.

These characteristics are often condensed into the acronym DATSIS (Discoverable, Addressable, Trustworthy, Self-describing, Interoperable, Secure).

In addition, obtaining a DATSIS data product does not require significant investments. It involves defining a set of global conventions that domains must follow (naming, supported protocols, access and permission management, quality controls, metadata, etc.). The operational implementation of these conventions usually does not require new technological capabilities – existing solutions are generally sufficient to get started.

An exception, however, is the catalog. It plays a central role in the deployment of the data mesh by allowing domains to publish information about their data products, and consumers to explore, search, understand, and exploit these data products.

Best Practices for Data Product Design

Designing a data product is certainly not an exact science – there could be only one product, or three or four. To guide this choice, it is once again useful to leverage some best practices from distributed architectures – a data product must:

- Have a single and well-defined responsibility.

- Have stable interfaces and ensure backward compatibility.

- Be usable in several different contexts and therefore support polyglotism.

Data Products Developer Experience

Developer experience is also a fundamental aspect of the data mesh, with the ambition to converge the development of data products and the development of services or software components. It’s not just about being friendly to engineers but also about responding to a certain economic rationality:

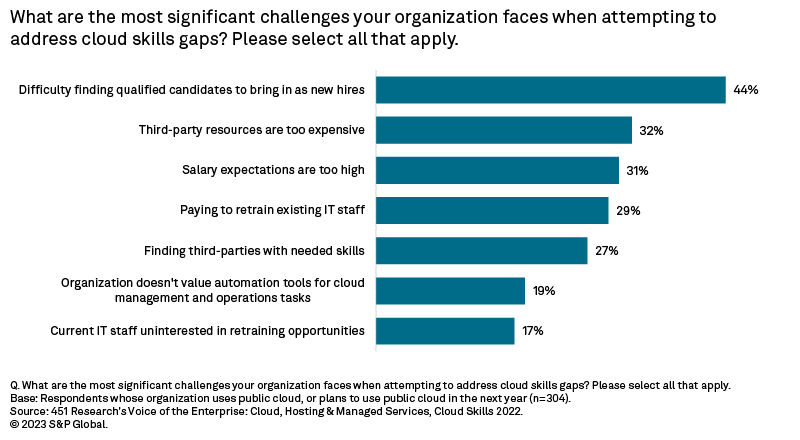

The decentralization of data management implies that domains have their own resources to develop data products. In many organizations, the centralized data team is not large enough to support distributed teams. To ensure the success of the data mesh, it is essential to be able to draw from the pool of software engineers, which is often larger.

The state of the art in software development relies on a high level of automation: declarative allocation of infrastructure resources, automated unit and integration testing, orchestrated build and deployment via CI/CD tools, Git workflows for source and version management, automatic documentation publishing, etc.

The development of data products should converge toward this state of the art – and depending on the organization’s maturity, its teams, and its technological stack, this convergence will take more or less time. The right approach is to automate as much as possible using existing and mastered tools, then identify operations that are not automated to gradually integrate additional tooling.

In practice, here is what constitutes a data product:

- Code first – For pipelines that feed the data product with data from different sources or other data products; for any consumption APIs of the data product; for testing pipelines and controlling data quality; etc.

- Data, of course – But most often, the data exists in systems and is simply extracted and transformed by pipelines. Therefore, it is not present in the source code (excluding exceptions).

- Metadata – Some of which document the data product: schema, semantics, syntax, quality, lineage, etc. Others are intended to ensure product governance at the mesh scale – contracts, responsibilities, access policies, usage restrictions, etc.

- Infrastructure – Or more precisely, the declaration of the physical resources necessary to instantiate the data product: deployment and execution of code, deployment of metadata, resource allocation for storage, etc.

On the infrastructure side, the data mesh does not require new capabilities – the vast majority of organizations already have a data platform. The implementation of the data mesh also does not require a centralized platform. Some companies have already invested in a common platform, and it seems logical to leverage the capabilities of this platform to develop the mesh.But others have several platforms, some entities, or certain domains having their infrastructure. It is entirely possible to deploy the data mesh on these hybrid infrastructures: as long as the data products respect common standards for addressability, interoperability, and access control, the technical modalities of their execution are of little importance.

Premium Offices Example:

To establish an initial framework for the governance of its data mesh, Premium Offices has set the following rules:

- A data product materializes as a dedicated project in BigQuery – this allows setting access rules at the project level, or more finely if necessary. These projects will be placed in a “data products” directory and a sub-directory bearing the name of the domain to which they belong (in our example, “Brokerage”).

- Data products must offer views to access data – these views provide a stable consumption interface and potentially allow evolving the internal model of the product without impacting its consumers.

- All data products must identify data using common references for common data (Clients, Products, Suppliers, Employees, etc.) – this simplifies cross-referencing data from different data products (LEI, product code, UPC, EAN, email address, etc.).

- Access to data products requires strong authentication based on GCP’s IAM capabilities – using a service account is possible, but each user of a data product must then have a dedicated service account. When access policies depend on users, the end user’s identity must be used via OAuth2 authentication.

- The norm is to grant access only to views – and not to the internal model.

- Access requests are processed by the Data Product Owner through workflows established in ServiceNow.

- DBT is the preferred ETL for implementing pipelines – each data product has a dedicated repository for its pipeline.

- A data product can be consumed either via the JDBC protocol or via BigQuery APIs (read-only).

- A data product must define its contract – data update frequency, quality levels, information classification, access policies, and usage restrictions.

- The data product must publish its metadata and documentation in a marketplace – in the absence of an existing system, Premium Offices decides to document its first data products in a dedicated space on its company’s wiki.

This initial set of rules will of course evolve, but it sets a pragmatic framework to ensure the DATSIS characteristics of data products by exclusively leveraging existing technologies and skills. For its pilot, Premium Offices has chosen to decompose the architecture into two data products:

- Tenancy Analytics – This first data product offers analytical capabilities on lease contracts – entity, parent company, property location, lease start date, lease end date, lease type, rent amount, etc. It is modeled in the form of a small star schema allowing analysis along 2 dimensions: time and tenant – these are the analysis dimensions needed to build the first version of the dashboard. It also includes one or two views that leverage the star schema to provide pre-aggregated data – these views constitute the public interface of the data product. Finally, it includes a view to obtain the most recent list of tenants.

- Entity Ratings – This second data product provides historical ratings of entities in the form of a simple dataset and a mirror view to serve as an interface, in agreement with common rules. The rating is obtained from a specialized provider, which distributes them in the form of APIs. To invoke this API, a list of entities must be provided, obtained by consuming the appropriate interface of the Tenancy analytics product.

In conclusion, adopting the mindset of treating data as a product is essential for organizations undergoing data management decentralization. This approach cultivates a culture of accountability, standardization, and efficiency in handling data across different domains. By viewing data as a valuable asset and implementing structured management frameworks, organizations can ensure consistency, reliability, and seamless integration of data throughout their operations.

In our final article, we will go over the fourth and last principle of data mesh: federated computational governance.

The Practical Guide to Data Mesh: Setting up and Supervising an Enterprise-Wide Data Mesh

Written by Guillaume Bodet, our guide was designed to arm you with practical strategies for implementing data mesh in your organization, helping you:

- Start your data mesh journey with a focused pilot project.

- Discover efficient methods for scaling up your data mesh.

- Acknowledge the pivotal role an internal marketplace plays in facilitating the effective consumption of data products.

- Learn how the Actian Data Intelligence Platform emerges as a robust supervision system, orchestrating an enterprise-wide data mesh.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)