Getting Started With Actian Zen and BtrievePython

Johnson Varughese

July 1, 2024

Welcome to the world of Actian Zen, a versatile and powerful edge data management solution designed to help you build low-latency embedded apps. This is Part 1 of the quickstart blog series that focuses on helping embedded app developers get started with Actian Zen. In this blog, we’ll explore how to leverage BtrievePython to run Btrieve2 Python applications, using the Zen 16.0 Enterprise/Server Database Engine.

But before we dive in, let’s do a quick introduction.

What is Btrieve?

Actian Zen Btrieve interface is a high-performance, low-level, record-oriented database management system (DBMS) developed by Pervasive Software, now part of Actian Corporation. It provides efficient and reliable data storage and retrieval by focusing on record-level operations rather than complex queries. Btrieve is known for its speed, flexibility, and robustness, making it a popular choice for applications that require high-speed data access and transaction processing.

What is BtrievePython?

BtrievePython is a modern Python interface for interacting with Actian Zen databases. It allows developers to leverage the powerful features of Btrieve within Python applications, providing an easy-to-use and efficient way to manage Btrieve records. By integrating Btrieve with Python, BtrievePython enables developers to build high-performance, data-driven applications using Python’s extensive ecosystem and Btrieve’s reliable data-handling capabilities.

This comprehensive guide will walk you through the setup on both Microsoft Server 2019 and Ubuntu V20, ensuring you have all the tools you need for success.

Getting Started With Actian Zen

Actian Zen offers a range of data access solutions compatible with various operating systems, including Android, iOS, Linux, Raspbian, and Windows (including IoT and Nano Server). For this demonstration, we’ll focus on Microsoft Server 2019, though the process is similar across different platforms.

Before we dive into the setup, ensure you’ve downloaded and installed the Zen 16.0 Enterprise/Server Database Engine for Windows or Linux on Ubuntu. Detailed installation instructions can be found on Actian’s Academy channel.

Setting Up Your Environment

Installing Python and BtrievePython on Windows:

-

-

- Download and Install Python: Visit Python’s official website and download the latest version (we’re using Python v3.12).

- Open Command Prompt as Administrator: Ensure you have admin rights to proceed with the installation.

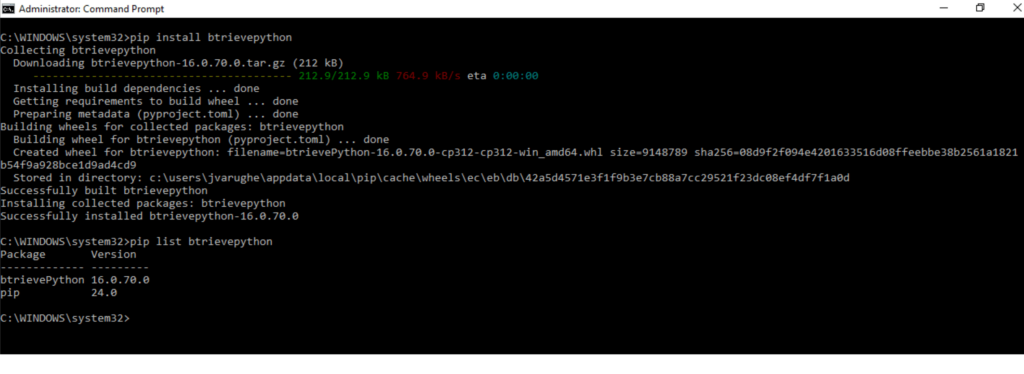

- Install BtrievePython: Execute pip install btrievePython. Note that this step requires an installed ZEN 16.0 client or Engine. If the BtrievePython installation fails, ensure you have Microsoft Visual C++ 14.0 or greater by downloading the Visual C++ Build Tools.

- Verify Installation: Run pip list to check if BtrievePython is listed.

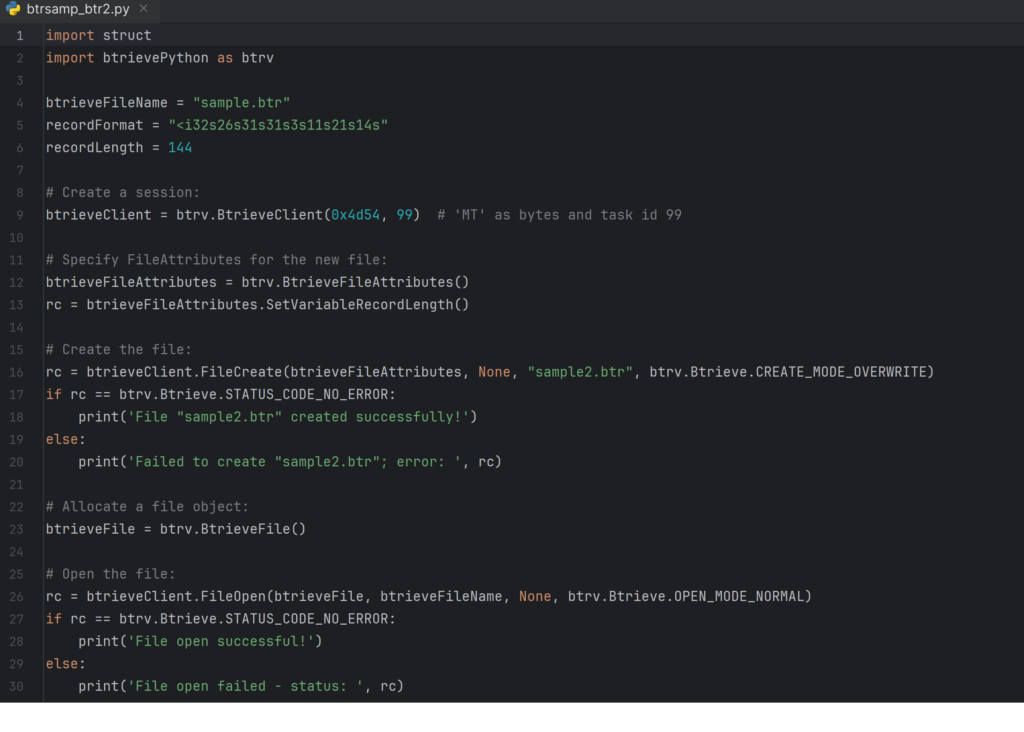

- Run a Btrieve2 Python Sample: Download the sample program from the Actian documentation and run it using python btr2sample.py 9 from an admin command prompt.

-

Installing Python and BtrievePython on Linux (Ubuntu):

-

-

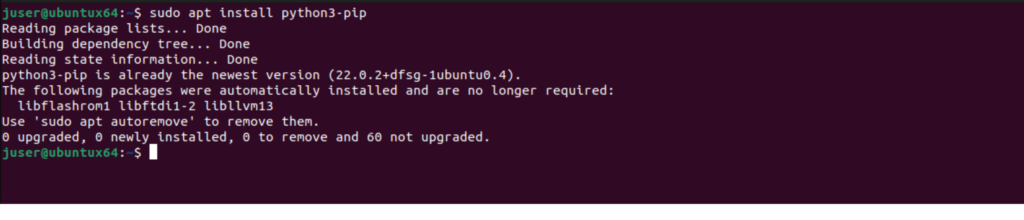

- Install PIP: Use sudo apt install python3-pip to get PIP, the Python package installer.

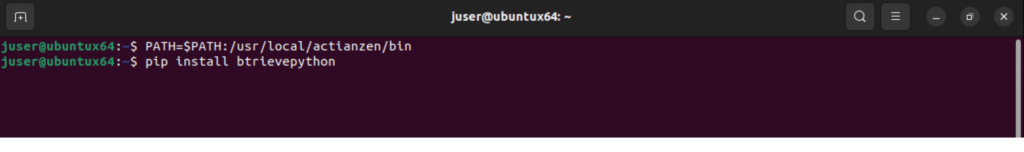

- Open a terminal window as a non-“root” user and export PATH=$PATH:/usr/local/actianzen/bin

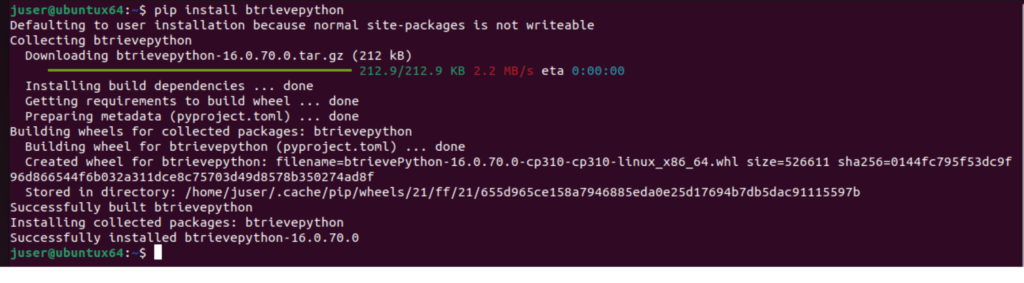

- Install BtrievePython: Execute sudo pip install btrievePython, ensuring a ZEN 16.0 client or Engine is present.

- Verify Installation: Run pip show btrievePython to confirm the installation.

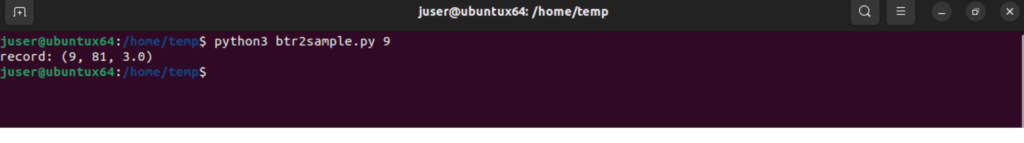

- Run a Btrieve2 Python Sample: After downloading the sample from the Actian documentation, run the sample with python3 btr2sample.py 9.

-

Visual Guide

The setup process includes several steps that are best followed with visual aids. Here are some key screenshots to help guide you through the setup:

For the Windows Setup:

Downloading and setting up Python.

Python Download Site:

Command Prompt Operations: Steps to install BtrievePython.

Code snippet:

Verification and Execution: verifying the installation and running the Btrieve2 sample application.

For the Linux Setup:

Installation Commands:

Install Python3-pip

BtrievePython Setup: BtrievePython installation.

Open a terminal window as a non-“root” user and export PATH=$PATH:/usr/local/actianzen/bin

BtrievePython Installed

Sample Execution: running the Btrieve2 sample app.

Conclusion

This guide has provided a thorough walkthrough on using BtrievePython with Actian Zen to run Btrieve2 Python applications. Whether you’re working on Windows or Linux, these steps will help you set up your environment efficiently and get your applications running smoothly. Actian Zen’s compatibility with multiple platforms ensures that you can manage your data seamlessly, regardless of your operating system.

For further details and visual guides, refer to the Actian Academy and the comprehensive documentation. Happy coding!

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)