Get to Know Actian’s 2024 Interns

Katie Keith

July 24, 2024

We want to celebrate our interns worldwide and recognize the incredible value they are bringing to our company. As a newly inducted intern myself, I am honored to have the opportunity to introduce our incredible new cohort of interns!

Andrea Brown (She/Her)

Clouds Operations Engineer Intern

Andrea is a Computer Science major at the University of Houston-Downtown. She lives in Houston and in her free time enjoys practicing roller skating and learning French. Her capstone project focuses on using Grafana for monitoring resources and testing them with k6 synthetics.

What she likes most about the intern program so far is the culture. “Actian has done such a great job cultivating a culture where everyone wants to see you succeed,” she notes. “Everyone is helpful and inspiring.” From the moment she was contacted for an internship to meeting employees and peers during orientation week, she felt welcome and knew right away she had made the right choice. She has no doubt this will be a unique and unforgettable experience, and she is looking forward to learning more about her capstone project and connecting with people across the organization.

Claire Li (She/Her)

UX Design Intern

Claire is based in Los Angeles and is studying interaction design at ArtCenter College of Design. For her capstone project, she will create interactive standards for the Actian Data Platform and apply them to reusable components and the onboarding experience to enhance the overall user experience.

“Actian fosters a positive and supportive environment for interns to learn and grow,” she says.

Claire enjoys the collaborative atmosphere and the opportunity to tackle real-world challenges. She looks forward to seeing how she and her fellow interns will challenge themselves to problem-solve, present their ideas, and bring value to Actian in their unique final presentations. Outside of work, she spends most of her weekends hiking and capturing nature shots.

Prathamesh Kulkarni (He/Him)

Cloud QA Intern

Prathamesh is working toward his master’s degree in Computer Science at The University of Texas at Dallas. He is originally from Pune, India.

His capstone project aims to streamline the development of Actian’s in-house API test automation tool and research the usability of GitHub Copilot in API test automation.

By automating these tasks, he and his team can reduce manual effort and expedite the creation of effective and robust test automation solutions. The amazing support he has received and the real value of the work he has been involved in have been highlights of his internship so far. He says it’s been a rewarding experience to apply what he has learned in a practical setting and see the impact of his contributions.

A fun fact about him is that he loves washing dishes—it’s like therapy to him, and he even calls himself a professional dishwasher! He is also an accomplished Indian classical percussion musician, having graduated in that field.

Marco Brodkorb

Development Vector Intern

Hailing from Thuringia, Germany, Marco is working on his master’s degree in Computer Science at Technische Universität Ilmenau. He began his work as an Actian intern by writing unit tests and then began integrating a new compression method for strings called FSST.

He is working on integrating a more efficient range join algorithm that uses ad hoc generated UB-Trees, as part of his master thesis.

Naomi Thomas (She/Her)

Education Team Intern

Naomi is from Florida and is a graduate student at the University of Central Florida pursuing a master’s degree in Instructional Design & Technology. She has five years of experience working in the education field with an undergraduate degree in Education Sciences.

For her capstone project, Naomi is diving into the instructional design process to create a customer-facing course on DataConnect 12.2 for Actian Academy. She is enjoying the company culture and the opportunity to learn from experienced instructional designers and subject matter experts. “Everyone has been incredibly welcoming and supportive, and I’m excited to be working on a meaningful project with a tangible impact!” she says.

A fun fact about her is that she has two adorable dogs named Jax and King. She enjoys reading and collecting books in her free time.

Linnea Castro (She/Her)

Cloud Operations Engineer Intern

Linnea is majoring in Computer Science at Washington State University. She is working with the Cloud Operations team to convert Grafana observability dashboards into source code—effective observability helps data tell a story, while converting these dashboards to code will make the infrastructure that supports the data more robust.

She has loved meeting new people and collaborating with the Cloud team. Their morning sync meetings bring together people across the U.S. and U.K. She says that getting together with the internship leaders and fellow interns during orientation week set a tone of connection and possibility that continues to drive her each day. Linnea is looking forward to continuing to learn about Grafana and get swifter with querying. To that end, she is eager to learn as much as she can from the Cloud team and make a meaningful contribution.

She has three daughters who are in elementary school and is a U.S. Coast Guard veteran. Her favorite book is “Mindset” by Dr. Carol Dweck because it introduced her to the concept and power of practicing a growth mindset.

Alain Escarrá García (He/Him)

Development Vector Intern

Alain is from Cuba and just finished his first year of bachelor studies at Constructor University in Bremen, Germany, where he is majoring in Software, Data, and Technology. Working with the Actian Vector team, his main project involves introducing microservice architecture for user-defined Python functions. In his free time, he enjoys music, both listening to it and learning to play different instruments.

Matilda Huang (She/Her)

CX Design Intern

Matilda is pursuing her master’s degree in Technology Innovation at the University of Washington. She is participating in her internship from Seattle. Her capstone project focuses on elevating the voice of our customers. She aims to identify friction points in our current feedback communication process and uncover areas of opportunity for CX prioritization.

Matilda is enjoying the opportunity to collaborate with members from various teams and looks forward to connecting with more people across the company.

Liam Norman (He/Him)

Generative AI Intern

Liam is a senior at Harvard studying Computer Science. His capstone project involves converting natural language queries into SQL queries to assist Actian’s sales team.

So far, his favorite part of the internship was meeting the other interns at orientation week. A fun fact: In his free time, he likes to draw cartoons and play the piano.

Laurin Martins (He/Him)

Development Vector Intern

Laurin is from a small village near Frankfurt, Germany, called Langebach and is studying for a master’s degree in IT at TU Ilmenau. His previous work for Actian includes his bachelor thesis “Multi-key Sorting in Vectorized Query Execution.”

After that, he completed an internship to implement the proposed algorithms for a wide variety of data types. He is currently working on his master’s thesis titled “Elastic Query Processing in Stateless x100.” He plans to further develop the ideas and implementation presented in his master’s thesis in a Ph.D. program in conjunction with TU Ilmenau.

In his free time, he discovered that Dungeons and Dragons is a great evening board game to play with friends. He is also the lead for the software development at a startup company (https://healyan.com)

Kelsey Mulrooney (She/Her)

Cloud Security Engineer Intern

Kelsey is from Wilmington, Delaware, and majoring in Cybersecurity at the Rochester Institute of Technology. She is involved in implementing honeypots—simulated systems designed to attract and analyze hacker activities.

Kelsey’s favorite part about the internship program so far is the welcoming environment that Actian cultivates. She looks forward to seeing how much she can accomplish in the span of 12 weeks. Outside of work, Kelsey enjoys playing percussion, specifically the marimba and vibraphone.

Justin Tedeschi (He/Him)

Cloud Security Engineer Intern

Justin is from Long Island, New York, and an incoming senior at the University of Tampa. He’s majoring in Management Information Systems with a minor in Cybersecurity. At Actian, he’s learning about vulnerabilities in the cloud and how to spot them, understand them, and also prevent them.

The internship program allows access to a variety of resources, which he’s definitely taking advantage of, including interacting with people he finds to be knowledgeable and understanding. A fun fact about Justin is that he used to be a collegiate runner—one year at the University of Buffalo, a Division 1 school, then another year at the college he’s currently attending, which is Division 2.

Guillermo Martinez Alacron

Development Vector Intern

Hailing from Mexico, Guillermo is studying Industrial Engineering and participating in an exchange at TU Ilmenau in Germany. As part of his internship, he is working on the design and implementation of a quality management system in order to obtain the ISO 9001 certification for Actian. He enjoys Star Wars, rock music, and sports—and is especially looking forward to the Olympics!

Joe Untrecht (He/Him)

Cloud Operations Engineer Intern

Joe is from Portola Valley, California, which is a small town near Palo Alto. He is heading into his senior year at the University of Wisconsin-Madison, majoring in Computer Science. He loves and cannot recommend this school enough. One interesting fact about him is that he loves playing Hacky Sack and is about to start making custom hacky sacks. Another interesting fact is that he loves all things Star Wars and believes “Revenge of the Sith” is clearly the best movie. His favorite dessert is cookies and milk.

His capstone project involves cloud resource monitoring. He has been learning how to use the various services on Amazon Web Services, Google Cloud, and Microsoft Azure while practicing how to visualize the data and use the services on Grafana. He has had an immense amount of fun working with these platforms and doesn’t think he has ever learned more than in the first three weeks of his internship. He views the internship as a great opportunity to improve his skills and build new ones. He is “beyond grateful” for this opportunity and excited to continue learning about Actian and working on his capstone project.

Jon Lumi (He/Him)

Software Development Intern

Jon is from Kosovo and is a second-year Computer Science student at Constructor University in Bremen, Germany. He is working at the Actian office in Ilmenau, Germany, and previously worked as a teaching assistant at his university for first-year courses.

His experience as an Actian intern has been nothing short of amazing because he has not only had the opportunity to grow professionally through the guidance of supervisors and the challenges he faced, but also to learn in a positive and friendly environment. Jon is looking forward to learning and experiencing even more of what Actian offers, and having a good time along the way.

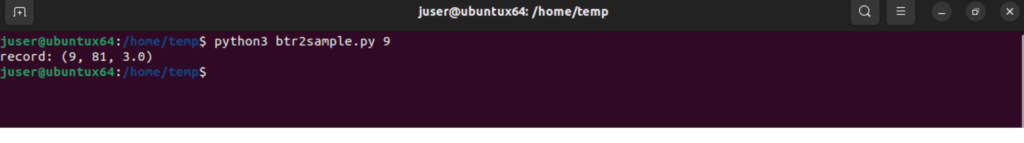

Davis Palmer (He/Him)

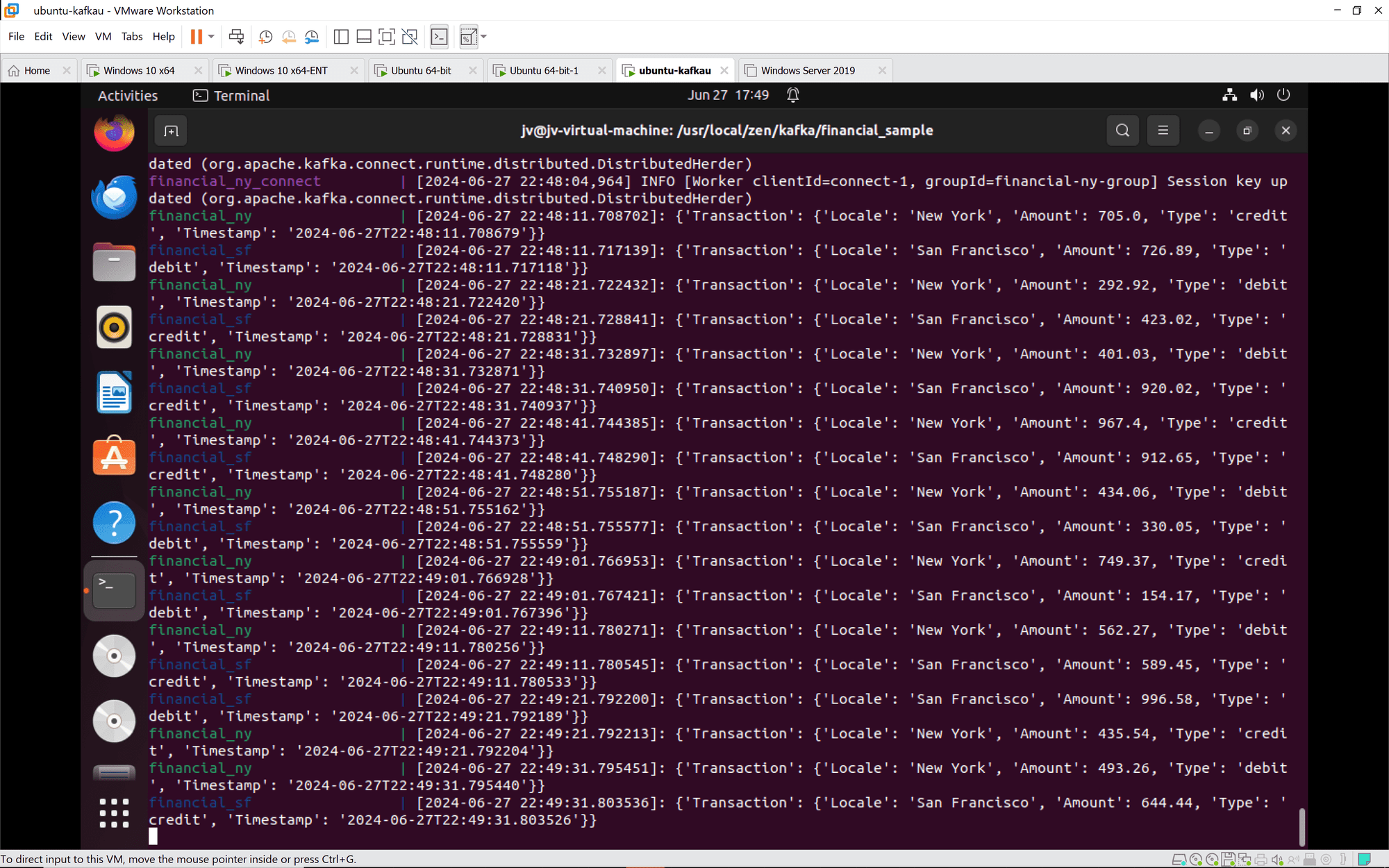

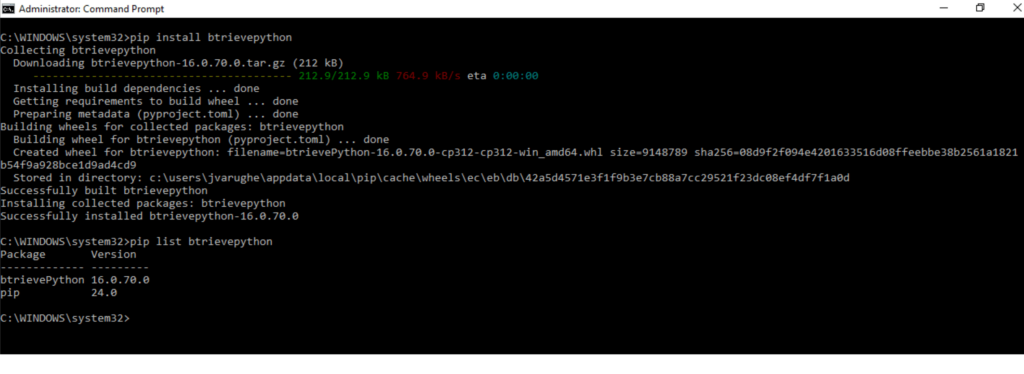

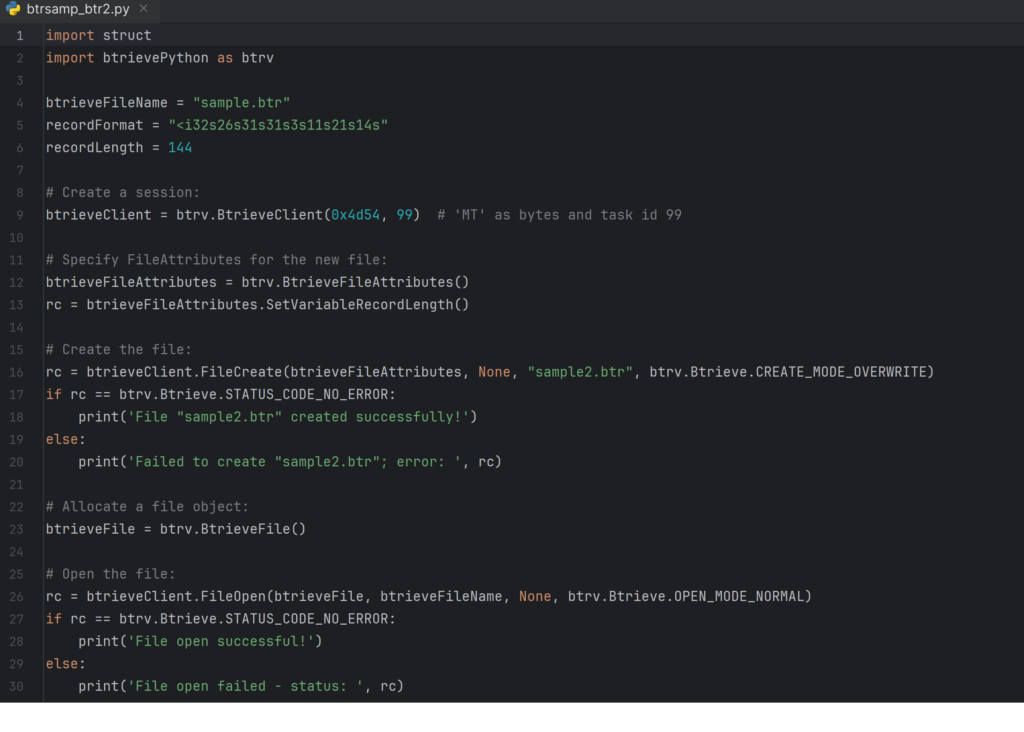

Engineering Intern, Zen Hardware

Davis is double majoring in Mechanical Engineering and Applied Mathematics. He’s also earning a minor in Computer Science at Texas A&M University.

His capstone project consists of designing and constructing a smart building with a variety of IoT devices with the Actian Zen team. He “absolutely loves” the work he has been doing and all the people he has interacted with. Davis is looking forward to all of the intern events for the rest of the summer.

Matthew Jackson (He/Him)

Engineering Intern, Zen Hardware

Matthew is working with the Actian Zen team. He grew up only a few miles from Actian’s office in Round Rock, Texas. Going into his junior year at Colorado School of Mines in Golden, Colorado, he’s working on two majors: Computer Science with a focus on Data Science, and Electrical Engineering with a focus on Information & Systems Sciences (ISS).

Outside of school, he plays a bit of jazz and other genres as a keyboardist and trumpeter. He is a huge fan of playing winter sports like hockey, skiing, and snowboarding. This summer at Actian, he is working alongside another hardware engineering intern for Actian Zen, Davis Palmer, to build a smart model office building to act as a tech demo for Zen databases. His part of the project is performing all the high-level development, which includes conducting web development, developing projects with facial recognition AI, and other tasks at that level of abstraction. He is super interested in the project assigned to him and is excited to see where it goes…

Fedor Gromov

Development Vector Intern

Fedor is from Russia and working at the Actian office in Germany. He is attending a master’s program at Constructor University of Bremen and studying Computer Science. He’s working on adding ONNX microservice support to a microservices team. His current hobby is bouldering.

Katie Keith (She/Her)

Employee Experience Intern

Katie is from Vail, Colorado, and an upcoming senior at Loyola University in Chicago. She is receiving her BBA in Finance with a minor in Psychology. For her capstone project, she is working with the Employee Experience team to put together a Pilot Orientation Program for the new go-to-market strategy employees.

She has really enjoyed Actian’s company culture and getting to learn from her team. Katie is looking forward to cheering on her fellow interns during their capstone presentations at the completion of the internship program. In her free time, she enjoys seeing stage productions and reading. She is super thankful to be part of the Actian team!

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.