On-Premises vs. Cloud Data Warehouses: 7 Key Differences

Actian Corporation

July 31, 2024

In the constantly evolving landscape of data management, businesses are faced with the critical decision of choosing between on-premises and cloud data warehouses. This decision impacts everything from scalability and cost to security and performance.

Understanding deployment options is crucial for data analysts, IT managers, and business leaders looking to optimize their data strategies. At a basic level, stakeholders need to understand how on-premises and cloud data warehouses are different—and why those differences matter.

Having a detailed knowledge of the advantages and disadvantages of each option allows data-driven organizations to make informed buying decisions based on their strategic goals and operational needs. For example, decision-makers often consider factors such as:

- Control over the data environment.

- Security and compliance needs.

- The ability to customize and scale.

- Capital expenditure vs. operational expense.

- Maintenance and management of the data warehouse.

The pros and cons, along with their potential impact on data management and usage, should be considered when implementing or expanding a data warehouse. It’s also important to consider future needs to ensure the data warehouse meets current, emerging, and long-term data requirements.

Location is the Biggest—But Not the Only—Differentiator

Modern on-premises data warehouses have been enabling enterprises since the 1980s. Early versions had the ability to integrate and store large data volumes and perform analytical queries.

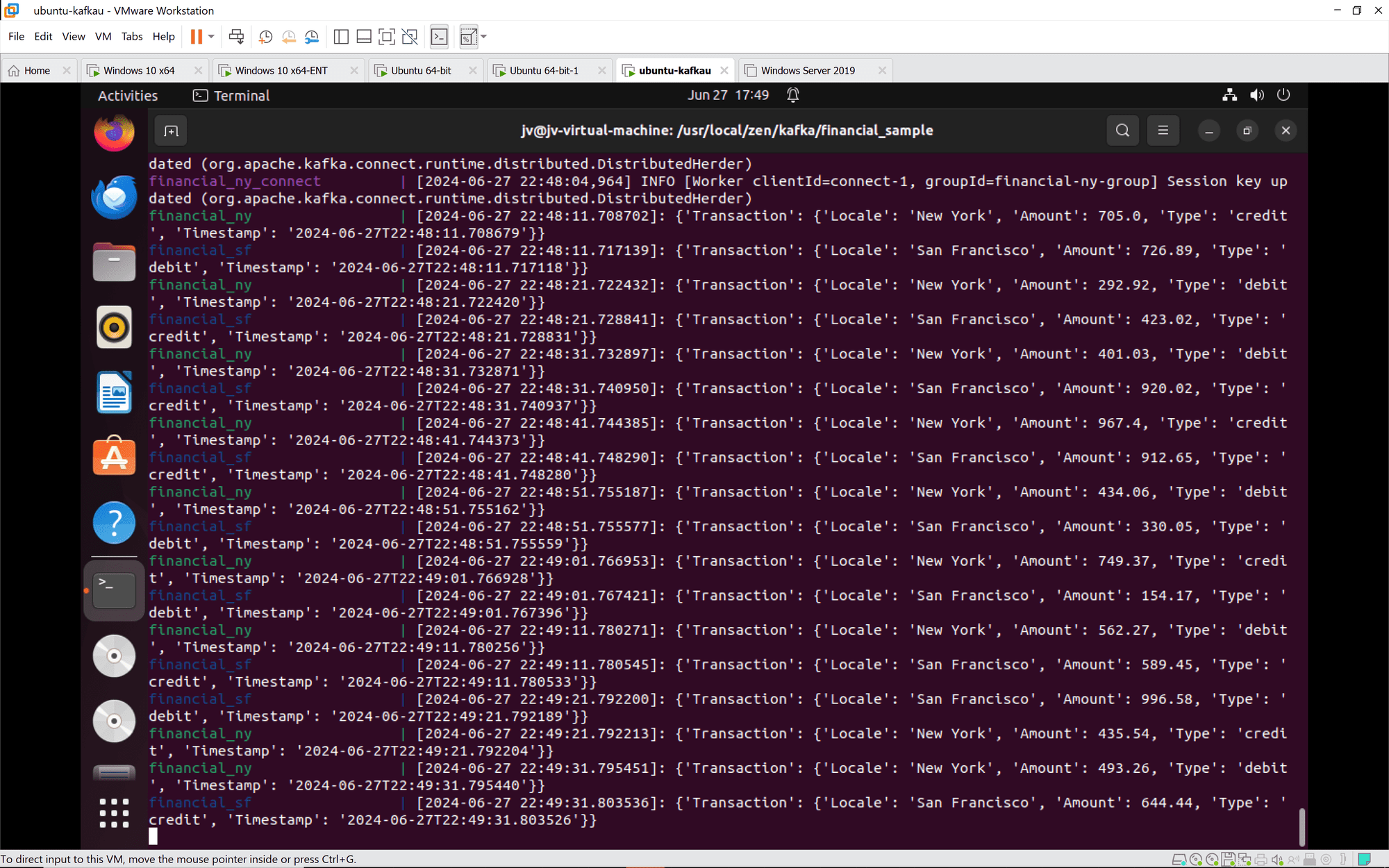

By the 2010s, as organizations became more data-driven, data volumes began to explode—giving rise to the term “big data”—and technology advanced for data storage, processing power, and faster analytics. The data warehouse, usually residing in on-premises environments, became a mainstay for innovative businesses. During this time, the public cloud became popular and cloud data storage also became available, including cloud-based data warehouses.

The biggest difference between an on-premises data warehouse and a cloud version is where the infrastructure is hosted and managed. An on-premises data warehouse has the infrastructure physically located within the organization’s facilities, whereas cloud versions leveraged storage in hyperscaler environments.

With on-premises data warehouses, the company is responsible for purchasing, setting up, and maintaining the hardware and software—which requires the proper skillset and resources to perform effectively. With a cloud data warehouse, the infrastructure is hosted by a cloud service provider. The provider manages the hardware and software, including maintenance, updates, and scaling. Here’s a look at other key differences.

7 Primary Differences and Their Business Impact

The fundamental differences in data location have several implications:

Overall Cost Structure

On-premises data warehouses typically require a significant upfront capital expenditure for hardware and software. This is in addition to ongoing costs for maintenance, upgrades, power, and cooling.

Cloud solutions operate on a subscription or pay-as-you-go model, which essentially avoids large capital expenditures and instead uses operational expenses. Having cloud service providers handle routine maintenance, backups, and disaster recovery can reduce the operational burden on an organization’s internal IT teams. The cloud option can ultimately be more cost-effective for stable, predictable workloads that do not have unpredictable cost implications.

Scalability

Scaling an on-premises data warehouse can be complex and time intensive, often requiring the organization to install additional hardware. Cloud data warehouses offer near-infinite scalability, allowing organizations to quickly and easily scale up or down based on demand—this is one of the primary benefits of the cloud option.

Deployment and Management

With an on-premises data warehouse, deployment can be time-consuming, involving a physical setup and extensive configurations that can take weeks or months. Managing the data warehouse also requires specialized IT staff to handle day-to-day operations, security, and troubleshooting.

The cloud speeds up deployment, often requiring just a few clicks to provision resources. The cloud provider largely handles management, freeing up internal IT staff for other tasks. Because cloud data warehouses can be up and running quickly, organizations can start deriving value sooner.

Control and Customization

Operating the data warehouse on-premises gives organizations complete control over their data and infrastructure. This gives extensive options for customization to meet specific business and data needs.

One trade-off with cloud solutions is that they do not offer the same level of control and customization compared to on-premises infrastructure. As a result, organizations may face limitations when fine-tuning specific configurations and ensuring complete data sovereignty in the cloud.

Flexibility to Meet Workloads

An on-premises data warehouse is typically limited by its physical infrastructure and the capacity that was initially implemented—unless the environment is expanded. Upgrades and changes can be cumbersome and slow, which contrasts with the flexibility offered by a cloud-based data warehouse, which allows for quick adjustments to computing and storage resources to meet changing workload demands.

Security and Compliance

Security is managed internally with on-premises solutions, giving organizations full control, but also full responsibility. Compliance with changing industry regulations may require significant effort and resources to stay current. At the same time, organizations in industries such as finance and healthcare, where data privacy and security are paramount, may want to keep data on-prem for security reasons.

With cloud data warehouses, security and compliance of the physical hardware is managed by the cloud service provider, which often has security certifications in place. However, organizations must ensure they choose a provider that meets their specific compliance requirements and have internal staff that is knowledgeable about cloud configuration to ensure cloud infrastructure is configured correctly as part of the shared responsibility model.

Performance and Latency

These are two critical factors for data warehousing, especially when seconds—or even milliseconds—matter. On-premises solutions are known for their high performance due to their dedicated resources, while latency is minimized because data processing occurs locally. Cloud solutions may experience latency issues, but they benefit from the continuous optimization and upgrades provided by cloud vendors.

Make Informed Buying Decisions With Confidence

When deciding between on-premises and cloud data warehouses, organizations should consider specific requirements for current and future usage. Considerations include:

- Data Volume and Growth Projections. Cloud solutions are better suited for businesses expecting rapid data growth because they offer immediate scalability.

- Regulatory and Compliance Needs. On-premises solutions may be beneficial for organizations with strict compliance requirements because they offer complete control over data security, access, and compliance measures. This helps ensure that sensitive information is handled according to specific regulatory standards.

- Budget and Financial Considerations. Cloud solutions offer lower initial costs and financial flexibility, which is beneficial for organizations with limited capital.

- Business Agility. The cloud’s ability to rapidly scale and deploy resources makes it a good option for organizations that prioritize agility. Scalability allows them to respond swiftly to market changes, efficiently manage workloads, and accelerate the development and deployment of new applications and services.

- Performance Requirements. On-premises solutions may be preferred by businesses needing high performance and low latency for workloads. Due to the proximity of data storage and computing resources, along with dedicated hardware, on-prem data warehouses can offer a performance advantage, although it’s important to note that cloud versions can offer real-time insights, too.

Consider Both Approaches With a Hybrid Solution

Choosing between on-premises and cloud data warehouses involves weighing the benefits and trade-offs of each option. Although the primary difference between on-premises and cloud data warehouses is the location and management of the infrastructure, the distinction cascades into other areas. This impacts myriad factors, such as costs, scalability, flexibility, security, and more.

By understanding key differences, data professionals, IT managers, and business decision-makers can make informed choices that align with their strategic goals. Organizations can ensure optimal data management and business success while having complete confidence in their data outcomes.

Organizations that want the benefits of both on-prem and cloud data warehouses can take a hybrid approach. A hybrid cloud data warehouse combines the scalability and flexibility of cloud with the control and security of on-premises solutions, enabling organizations to efficiently manage and analyze large volumes of data. This approach allows for seamless data integration and optimizes costs by utilizing current on-premises investments while benefiting from the scalability and flexibility offered by the cloud.

What does the future of data warehousing look like? Visit the Actian Academy for a look at where data warehousing began and where it is today.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)