Summary

This blog explains why strong data quality is essential within a data governance framework, detailing how establishing standards and processes for accuracy, consistency, and monitoring ensures reliable, compliant, and actionable data across the organization.

- Core dimensions define trusted data – Data quality relies on metrics like accuracy, completeness, consistency, timeliness, conformance, uniqueness, and usability, each requiring governance policies and validation processes to maintain trust.

- Governance tools and automation streamline quality – Automated profiling, validation, and cleansing integrate with governance frameworks to proactively surface anomalies, reduce manual rework, and free up teams for strategic initiatives.

- Governance + quality = AI-ready, compliant data – Combining clear standards, metadata management, and continuous monitoring ensures data is reliable, compliant, and fit for advanced analytics like AI and ML.

The ability to make informed decisions hinges on the quality and reliability of the underlying data. As organizations strive to extract maximum value from their data assets, the critical interplay between data quality and data governance has emerged as a fundamental imperative. The symbiotic relationship between these two pillars of data management can unlock unprecedented insights, drive operational efficiency, and, ultimately, position enterprises for sustained success.

Understanding Data Quality

At the heart of any data-driven initiative lies the fundamental need for accurate, complete, and timely information. Data quality encompasses a multifaceted set of attributes that determine the trustworthiness and fitness-for-purpose of data. From ensuring data integrity and consistency to minimizing errors and inconsistencies, a robust data quality framework is essential for unlocking the true potential of an organization’s data assets.

Organizations can automate data profiling, validation, and standardization by leveraging advanced data quality tools. This improves the overall quality of the information and streamlines data management processes, freeing up valuable resources for strategic initiatives.

How Data Quality Relates to Data Governance

Data quality is a fundamental pillar of data governance, ensuring that data is accurate, complete, consistent, and reliable for business use. A strong data governance framework establishes policies, processes, and accountability to maintain high data quality across an organization. This includes defining data standards, validation rules, monitoring processes, and data cleansing techniques to prevent errors, redundancies, and inconsistencies.

Without proper governance, data quality issues such as inaccuracies, duplicates, and inconsistencies can lead to poor decision-making, compliance risks, and inefficiencies. By integrating data quality management into data governance, organizations can ensure that their data remains trustworthy, well-structured, and optimized for analytics, reporting, and operational success.

The Key Dimensions of Data Quality in Data Governance

Effective data governance hinges on understanding and addressing the critical dimensions of data quality. These dimensions guide how organizations define, manage, and maintain data to ensure it is useful, accurate, and accessible. Below are the essential aspects of data quality that should be considered when creating a data governance strategy:

- Accuracy: Data must accurately reflect the real-world entities it represents. Inaccurate data leads to faulty conclusions, making it crucial for governance policies to verify and maintain correctness throughout the data lifecycle.

- Completeness: Data should capture all necessary attributes required for decision-making. Missing or incomplete information can compromise insights and analyses, so governance practices should ensure comprehensive data coverage across all relevant systems and processes.

- Consistency: Data needs to be presented in a uniform way across various platforms and departments. Inconsistent data can create confusion and hinder integration, which is why governance should enforce standards for formatting and data structures.

- Timeliness: The value of data diminishes over time, so it’s essential that data is up-to-date and relevant for current analysis. Governance efforts should ensure real-time updates and schedules for periodic data refreshes to maintain data’s usefulness.

- Conformance: Data should comply with predefined syntax rules and meet specific business logic requirements. Without conformance, data could lead to process errors, so governance should focus on maintaining compliance with validation rules and predefined formats.

- Uniqueness: To avoid redundancies, data should be free from duplicate entries or redundant records. A strong data governance framework helps establish processes to ensure data integrity and prevents unnecessary duplication that could skew analytics.

- Usability: Data must be easily accessible, understandable, and actionable for users. Governance frameworks should prioritize user-friendly interfaces, clear documentation, and efficient data retrieval systems to ensure that data is not only accurate but also usable for business needs.

Addressing these key dimensions through a comprehensive data governance framework helps organizations maintain high-quality data that is reliable, consistent, and actionable, ensuring that data becomes a strategic asset for informed decision-making.

How to Achieve Data Quality in Data Governance

Achieving high data quality within a data governance framework is essential for making informed, reliable decisions and maintaining compliance. It involves implementing structured processes, tools, and roles to ensure that data is accurate, consistent, and accessible across the organization.

Let’s explore key strategies for ensuring data quality, such as defining standards, using data profiling techniques, and setting up monitoring and validation processes.

Define Clear Standards

One of the most effective strategies for ensuring data quality is to define clear standards for how data should be structured, processed, and maintained. Data standards establish consistent rules and guidelines that govern everything from data formats and definitions to data collection and entry processes. These standards help eliminate discrepancies and ensure that data across the organization is uniform and can be easily integrated for analysis.

For instance, organizations can set standards for data accuracy, defining acceptable levels of error, or for data completeness, specifying which fields must always be populated. Additionally, creating data dictionaries or data catalogs allows teams to agree on terminology and definitions, ensuring everyone uses the same language when working with data. By defining these standards early in the data governance process, organizations create a solid foundation for maintaining high-quality, consistent data that can be relied upon for decision-making and reporting.

Profile Data With Precision

The first step in achieving data quality is understanding the underlying data structures and patterns. Automated data profiling tools, such as those offered by Actian, empower organizations to quickly and easily analyze their data, uncovering potential quality issues and identifying areas for improvement. By leveraging advanced algorithms and intelligent pattern recognition, these solutions enable businesses to tailor data quality rules to their specific requirements, ensuring that data meets the necessary standards.

Validate and Standardize Data

With a clear understanding of data quality, the next step is implementing robust data validation and standardization processes. Data quality solutions provide a comprehensive suite of tools to cleanse, standardize, and deduplicate data, ensuring that information is consistent, accurate, and ready for analysis. Organizations can improve data insights and make more informed, data-driven decisions by integrating these capabilities.

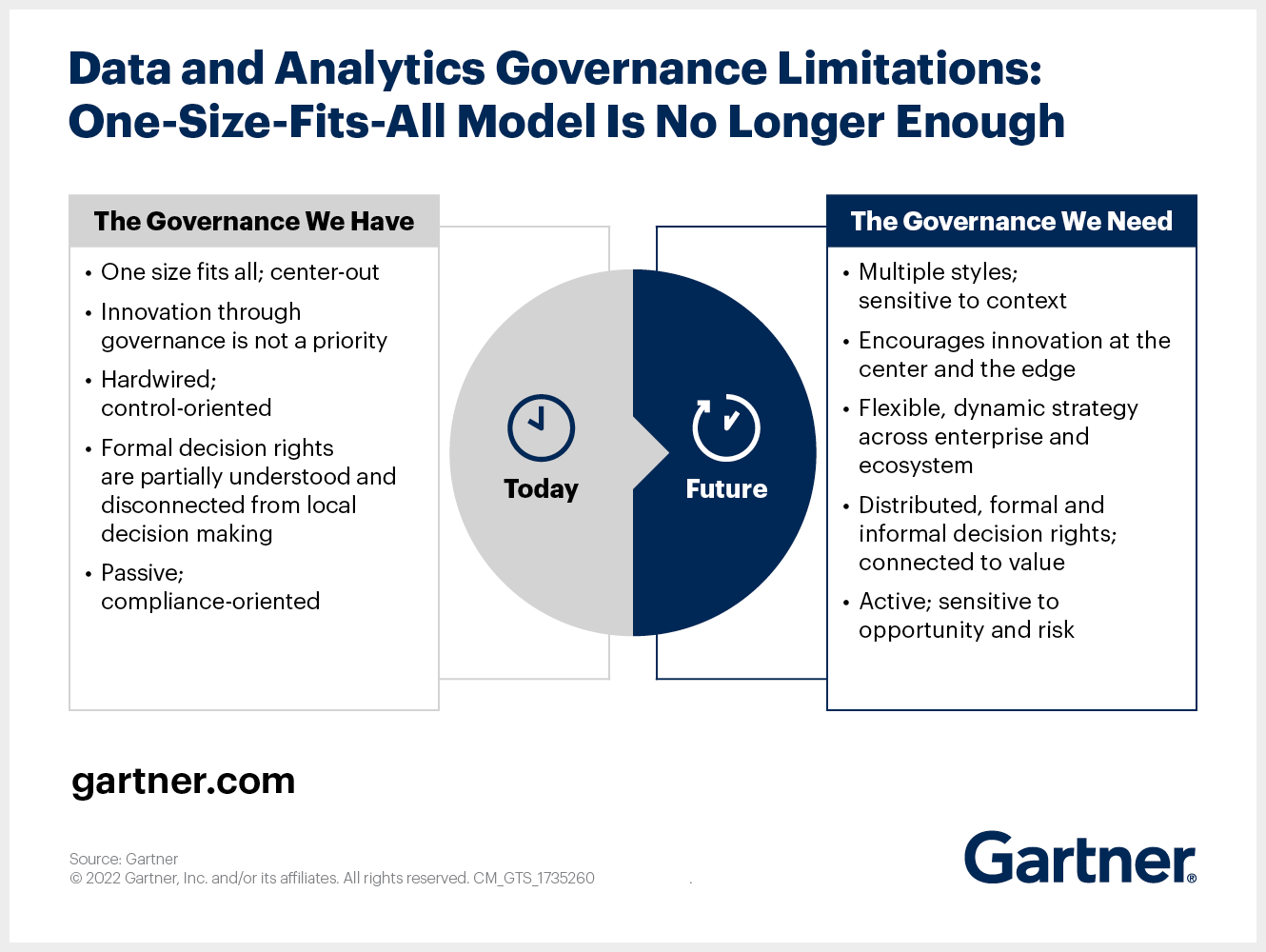

The Importance of Data Governance

While data quality is the foundation for reliable and trustworthy information, data governance provides the overarching framework to ensure that data is effectively managed, secured, and leveraged across the enterprise. Data governance encompasses a range of policies, processes, and technologies that enable organizations to define data ownership, establish data-related roles and responsibilities, and enforce data-related controls and compliance.

Unlocking the Power of Metadata Management

Metadata management is central to effective data governance. Solutions like the Actian Data Intelligence Platform provide a centralized hub for cataloging, organizing, and managing metadata across an organization’s data ecosystem. These platforms enable enterprises to create a comprehensive, 360-degree view of their data assets and associated relationships by connecting to a wide range of data sources and leveraging advanced knowledge graph technologies.

Driving Compliance and Risk Mitigation

Data governance is critical in ensuring compliance with industry standards and data privacy regulations. Robust data governance frameworks, underpinned by powerful metadata management capabilities, empower organizations to implement effective data controls, monitor data usage, and mitigate the risk of data breaches and/or non-compliance.

The Synergistic Relationship Between Data Quality and Data Governance

While data quality and data governance are distinct disciplines, they are inextricably linked and interdependent. Robust data quality underpins the effectiveness of data governance, ensuring that the policies, processes, and controls are applied to data to extract reliable, trustworthy information. Conversely, a strong data governance framework helps to maintain and continuously improve data quality, creating a virtuous cycle of data-driven excellence.

Organizations can streamline the data discovery and access process by integrating data quality and governance. Coupled with data quality assurance, this approach ensures that users can access trusted data, and use it to make informed decisions and drive business success.

Why Data Quality Matters in Data Governance

As organizations embrace transformative technologies like artificial intelligence (AI) and machine learning (ML), the need for reliable, high-quality data becomes even more pronounced. Data governance and data quality work in tandem to ensure that the data feeding these advanced analytics solutions is accurate, complete, and fit-for-purpose, unlocking the full potential of these emerging technologies to drive strategic business outcomes.

In the age of data-driven transformation, the synergistic relationship between data quality and data governance is a crucial competitive advantage. By seamlessly integrating these two pillars of data management, organizations can unlock unprecedented insights, enhance operational efficiency, and position themselves for long-term success.

About Traci Curran

Traci Curran is Director of Product Marketing at Actian, focusing on the Actian Data Platform. With 20+ years in tech marketing, Traci has led launches at startups and established enterprises like CloudBolt Software. She specializes in communicating how digital transformation and cloud technologies drive competitive advantage. Traci's articles on the Actian blog demonstrate how to leverage the Data Platform for agile innovation. Explore her posts to accelerate your data initiatives.