Part Two: Rethinking What Client-Server Means for Edge Data Management

Over the past few weeks, our SQLite blog series has considered the performance deficiencies of SQLite when handling local persistent data and looked at the performance complications created by the need for ETL when sharing SQLite data with back-end databases. In our last installment—Mobile may be IoT but IoT is not Mobile—we started to understand why the SQLite serverless architecture doesn’t serve IoT environments very well. The fact that SQLite is the most popular database on the planet lies in the fact that it was inexpensive (read: free) and seemingly sufficient for the single-user embedded applications emerging on mobile smartphones and tablets.

That was yesterday. Tomorrow is a very different story.

The IoT is expanding at an explosive rate, and what’s happening at the edge—in terms of applications, analytics, processing demands, and throughput—will make the world of single-user SQLite deployments seem quaint. As we’ll see in this and the next installment of this blog, the data requirements for modern edge use cases lie far outside SQLite’s wheelhouse.

SQLite Design-Ins for the IoT: Putting the Wrong Foot Forward

As we’ve noted, SQLite is based on an elegant but simple B-tree architecture. It can store any type of data, is implemented in C, and has a very small footprint—a few hundred KBs—which makes it portable to virtually any environment with minimal resourcing. And while it’s not fully ANSI-standard SQL, it’s close enough for horseshoes, hand grenades, and mobile applications.

For all these reasons, and because it has been used ubiquitously as mobile devices have proliferated over the past decade, IoT developers naturally adopted SQLite into many early IoT applications. These early design-ins were almost mirror images of mobile applications (minus the need for much effort at the presentation layer). Data was captured and cached on the device, with the expectation that it would be moved to the cloud for data processing and analytics.

But that expectation was simply an extrapolation of the mobile world that we knew, and it was shortsighted. It didn’t consider how much processing power could be packed into an ever-smaller CPU package nor where those packages might end up. It didn’t envision the edge as a locus for analytics (wasn’t that the domain of the cloud and the data center?). It didn’t envision the true power of AI and ML and the role those would soon begin to play throughout the IoT. And it didn’t count on the sheer volume of data that would soon be washing through the networks like a virtual tsunami.

Have you been to an IoT trade show recently? Three to five years ago, many of the sessions described PoCs and small pilots in which all data was sent up into the cloud. Engineers and developers we spoke to on the trade show floor expressed skepticism about the need for anything more than SQLite. Some even questioned the need for a database at all (let alone databases that were consistent across clients and servers). In the last three years, though, the common theme of the sessions has changed. They began to center on scaling up pilots to full production and infusing ML routines into local devices and gateways. The conversations started to consider more robust local data management needs. Discussions, in hushed tones at first, about client-server configurations (OMG!) began to appear. The realization that the IoT is not the same as mobile was beginning to sink in.

Rethinking Square Pegs and Round Holes

Of course, the rationale for not using a client-server database in an IoT environment (or, for that matter, any embedded environment) made perfect sense—as long as the client-server model you were eschewing was the enterprise client-server model that had been in use since the ‘80s. In that client-server paradigm, databases were designed for the data center. They were built to run on big iron and to support enterprise applications like ERP, with tens, hundreds, even thousands of concurrent users interacting from barely sentient machines. Collect these databases, add in sophisticated management overlays, an army of DBAs, maybe an outside systems integrator, and steep them in millions of dollars of investment monies — and soon you’ve got yourself a nice little enterprise data warehouse.

That’s not something you’re going to squeeze into an embedded application. Square peg, round hole. And that explains why developers and line-of-business technical staff tended to announce that they had pressing business elsewhere whenever the words “client-server” began to pop up in conversations about the IoT. The use cases emerging in what we began to think of as the IoT were not human end-user centric. Unless someone were prototyping or doing some sort of test and maintenance on a device or gateway or some complex instrumentation, little or no ad hoc querying was taking place. Client-server was serious overkill.

In short, given a very limited set of use cases, limited budgets, and an awareness of the cost and complexity of traditional client-server database environments, relying on SQLite made perfect sense.

Reimagining Client-Server With the IoT in Mind

The dynamics of modern edge data management demand that we reframe our notions of client-server, for the demands of the IoT differ from those of distributed computing as envisioned in the 80s. The old client-server paradigm involved a lot of ad hoc databases interaction—both directly for ad hoc query and indirectly by applications that involved human end-users. In IoT use cases, data access is more prescribed, often repeated and event-driven; you know exactly which data needs to be accessed, as well as when (or at least under which circumstances) an event will generate the request.

Similarly, in a given IoT use case there are no unknowns about how many applications are running on a device or about how many external devices will be requesting data from (or sending data to) an application and its database pairing (and here, whether the database is embedded or separate standalone doesn’t really matter). While these numbers vary among use cases and deployments, a virtual team of developers, systems integrators, product managers, and others will design structure, repeatability, and visibility into the system—even if it’s stateless (and more so if it’s stateful).

In the modern IoT space, client-server database requirements are more like well-defined publish and subscribe relationships (post by publisher/read by subscriber and access from publisher/write to subscriber). They operate as automated machine-to-machine relationships, in which publishing/broadcasting and parallel multichannel intake activities often take place concurrently. Indeed, client-server in the IoT is like publish-subscribe—except that everything needs to perform both operations, and most complex devices (including gateways and intelligent equipment) will need to be able to perform both operations not just simultaneously but also across parallel channels.

Let me repeat that for emphasis: most complex IoT devices (read: pretty much anything other than a sensor) is going to need to be able to read simultaneously and write simultaneously.

SQLite cannot do this.

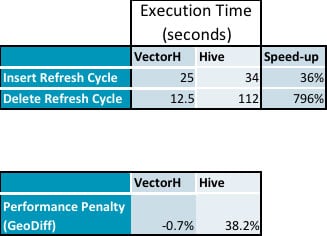

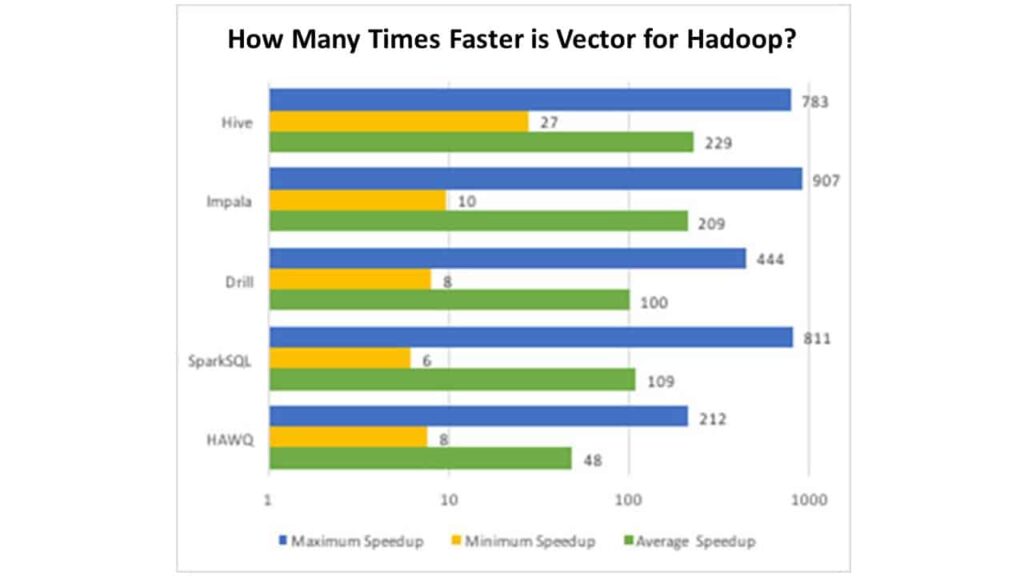

Traditional client-server databases can, but they were not designed with a small footprint in mind. Most cloud and data center client-server databases require hundreds of megabytes, even gigabytes, of storage space. However, the core functions needed to handle simultaneous reads and writes efficiently take up far less space. The Actian Zen edge database, for example, has a footprint of less than 50MB. And while this is 100X the installed footprint of SQLite, it’s merely a sliver of the space attached to the 64-bit ARM and Intel embedded processor-based platforms we see today. Moreover, Actian Zen edge’s footprint provides all the resources necessary for multi-user management, integration with external applications through ODBC and other standards, security management, and other functionality that is a must once you jump from serverless to client-server. A serverless database like SQLite does not provide those services because their need—like the edge itself—was simply not envisioned at the time.

If we look at the difference between Actian Zen edge and Actian Zen enterprise (with its footprint under 200MB), we can see that most of the difference has to do with human end-user enablement. For example, Actian Zen enterprise includes an SQL editor that enables ad-hoc queries and other data management operations from a command line. While most of that same functionality resides in Zen edge, it is accessed and executed through API calls from an application rather than a CLI.

But Does Every IoT Edge Scenario Need a Server?

Those of you who have been following closely will now sit up and say, Hey, wait: Didn’t you say that not every IoT edge data management scenario needs a client-server architecture?

Yes, I did. Props to you for paying attention. Not all scenarios do—but that’s not really the question you should be asking. The salient question is, do you really want to master one architecture, implementation, and vendor solution for those serverless use cases and separate architectures, implementations, and vendor solutions for the Edge, cloud, and data center? And, from which direction do you approach this question?

Historically, the vast majority of data architects and developers have approached this question from the bottom up. That’s why we started with flat files and then moved to SQLite. Rather than looking from the bottom up, I’m arguing that we need to step back, embrace a new understanding of what client-server can be, and then revisit the question from the top down. Don’t just try to force-fit serverless into a world for which it was never intended—or worse, kluge up from serverless to a jury-rigged implementation of a late 20th century-server configuration.

That way madness lies, as we’ll see in the final installment of this series, where we’ll look at what happens if developers decide to use SQLite anyway.

Ready to reconsider SQLite, learn more about Actian Zen. Or, you can just kick the tires for free with Zen Core which is royalty-free for development and distribution.

About Actian Corporation

Actian empowers enterprises to confidently manage and govern data at scale. Actian data intelligence solutions help streamline complex data environments and accelerate the delivery of AI-ready data. Designed to be flexible, Actian solutions integrate seamlessly and perform reliably across on-premises, cloud, and hybrid environments. Learn more about Actian, the data division of HCLSoftware, at actian.com.