Actian Vector and Vector in Hadoop are powerful tools for efficiently running queries. However, most users of data analytics platforms seek to find ways to optimize performance to gain incremental query improvements.

The Actian Service and Support team works with our customers to identify common areas that should be investigated when trying to improve query performance. Most of our recommendations apply equally well to Actian Vector (single node) as to Actian Vector in Hadoop (VectorH, a multi-node cluster on Hadoop).

Actian has recently published an in-depth overview of technical insights and best practices to help all Vector and VectorH users optimize performance, with a special focus on VectorH.

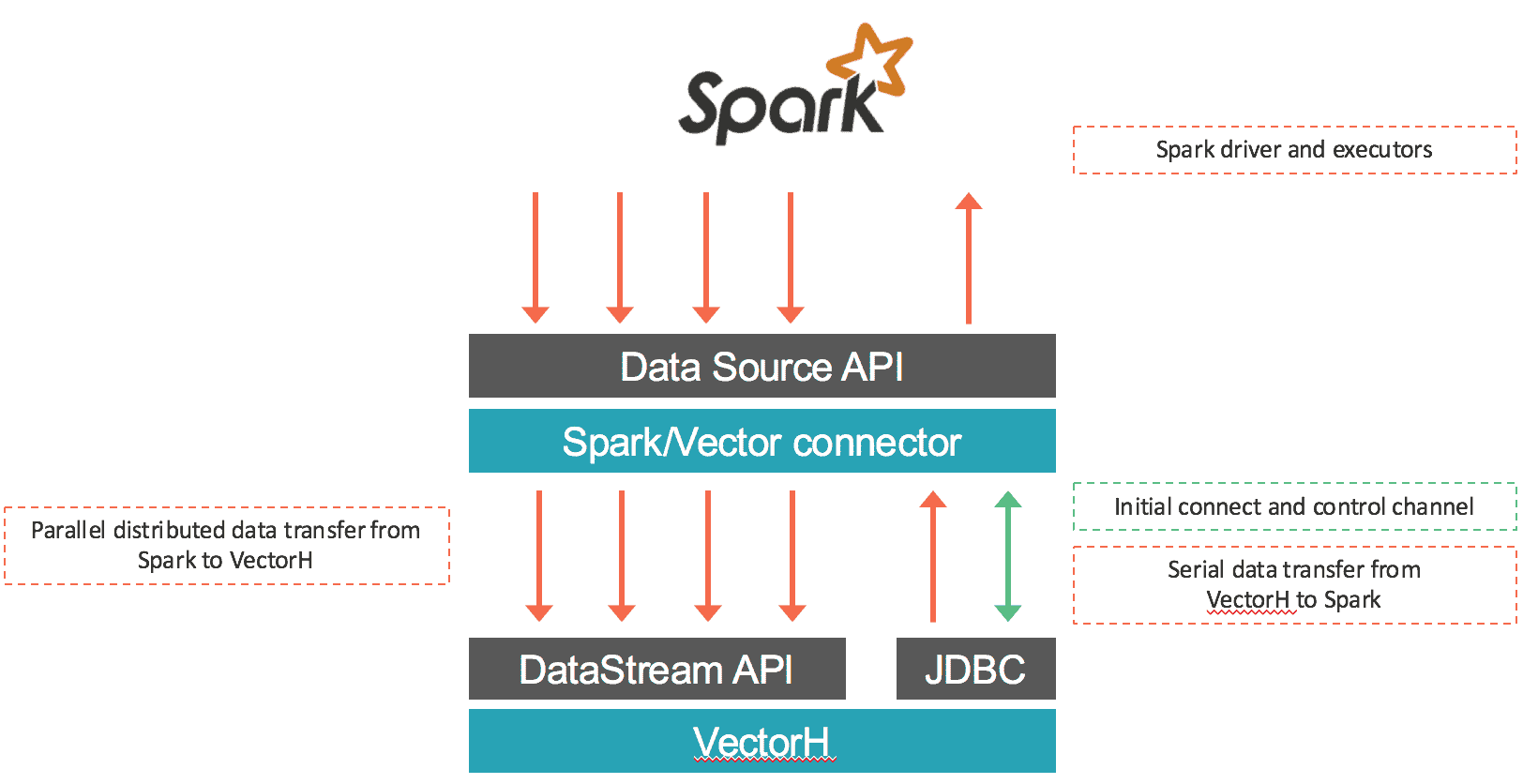

Unlike SQL query engines on Hadoop (Hive, Impala, Spark SQL, etc.) VectorH is a true columnar, MPP, RDBMS with full capabilities of SQL, ACID transactions (i.e. support for updates and deletes in place), built-in robust security options, etc. This flexibility allows VectorH to be optimized for complex workloads and environments.

Note that Vector and VectorH are very capable of running queries efficiently without using any of the examined techniques. But these techniques will come in handy for demanding workloads and busy Hadoop environments and will allow you to get the best results from your platform.

Through our work with customers, we have found the following areas should be investigated to achieve maximum performance.

Partition Your Tables

One very important consideration in schema design for any Massively Parallel Processing (MPP) system like VectorH is how to spread data around a cluster so as to balance query execution evenly across all of the available resources. If you do not explicitly partition your tables when they are created, VectorH will by default create non-partitioned tables – but for best performance, you should always partition the largest tables in your database.

Avoid Data Skew

Unbalanced data, where a small number of machines have much more data than most of the others, is known as data skew. Data skew can cause severe performance problems for queries, since the machine with the disproportionate amount of data governs the overall query speed and can become a bottleneck.

Missing Statistics

Data distribution statistics are essential to the proper creation of a good query plan. In the absence of statistics, the VectorH query optimizer makes certain default assumptions about things like how many rows will match when two tables are joined together. When dealing with larger data sets, it is much better to have real data about the actual distribution of data, rather than to rely on these estimates.

Sorting Data

The relational model of processing does not require that data is sorted on disk – instead, an ORDER BY clause is used on a query that needs the data back in a particular sequence.

However, by using what is known as MinMax indexes (maintained automatically within a table’s structure without user intervention), VectorH is able to use ordered data to more efficiently eliminate unnecessary blocks of data from processing and hence speed up query execution, when queries have a WHERE clause or join restriction on a column that the table is sorted on.

Using the Most Appropriate Data Types

Like any database, choosing the right data type for your schema and queries can make a big difference to VectorH’s performance, so don’t make it a practice to use the maximum column size for convenience. Instead, consider the largest values you are likely to store in a VARCHAR column, for example, and size your columns accordingly.

Because VectorH compresses column data very effectively, creating columns much larger than necessary has minimal impact on the size of data tables. As VARCHAR columns are internally stored as null-terminated strings, the size of the VARCHAR actually has no effect on query processing times. However, it does influence the frontend communication times, as the data is stored as the maximum defined length after it leaves the engine. Note however that storing data that is inherently numeric (IDs, timestamps, etc) as VARCHAR data is very detrimental to the system, as VectorH can process numeric data much more efficiently than character data.

Memory Management for Small Changes

VectorH has a patent-pending mechanism for efficiently dealing with many small changes to data, called Positional Delta Trees (PDTs). These also allow the use of update and delete statements on data stored in an append-only file system like HDFS.

However, if a lot of update, insert or delete statements are being executed, memory usage for the PDT structures can grow quickly. If large amounts of memory are used, the system can get slower to process future changes, and eventually memory will be exhausted. Management of this memory is handled automatically, however the user can also directly issue a ‘combine’ statement, which will merge the changes from the PDT back into the main table in a process called update propagation. There are a number of triggers that make the system perform this maintenance automatically in the background (such as thresholds for the total memory used for PDTs, or the percentage of rows updated), so this is usually transparent for the user.

Optimizing for Concurrency

VectorH is designed to allow a single query to run using as many parallel execution threads as possible to attain maximum performance. However, perhaps atypically for an MPP system, it is also designed to allow for high concurrency with democratic allocation of resources when there is a high number of queries present to the system. VectorH will handle both these situations with “out-of-the-box” settings, but can be tuned to suit the needs of the application (for example if wanting to cater for a higher throughput of heavy duty queries by curtailing the maximum resources any one query can acquire).

The number of concurrent connections (64 by default) that a given VectorH instance will accept is governed by the connect_limit parameter, stored in config.dat and managed through the CBF utility. But there are usually more connections than executing queries, so how are resources allocated among concurrent queries?

By default, VectorH tries to balance single-query and multi-query workloads. The key parameters in balancing this are:

- The number of CPU cores in the VectorH cluster.

- The number of threads that a query is able to use.

- The number of threads that a query is granted by the system.

- The number of queries currently executing in the system.

Summary

Vector and VectorH are very capable of running queries efficiently without using any of the techniques and tips described here. But the more demanding your workload, in terms of either data volumes, query complexity or user concurrency, the more that applying some the tips defined in the full report will allow you to get the best results from your platform.

About Actian Corporation

Actian empowers enterprises to confidently manage and govern data at scale, streamlining complex data environments and accelerating the delivery of AI-ready data. The Actian data intelligence approach combines data discovery, metadata management, and federated governance to enable smarter data usage and enhance compliance. With intuitive self-service capabilities, business and technical users can find, understand, and trust data assets across cloud, hybrid, and on-premises environments. Actian delivers flexible data management solutions to 42 million users at Fortune 100 companies and other enterprises worldwide, while maintaining a 95% customer satisfaction score.