Avoid the Potholes Along Your Migration Journey From Netezza

Actian Corporation

August 20, 2019

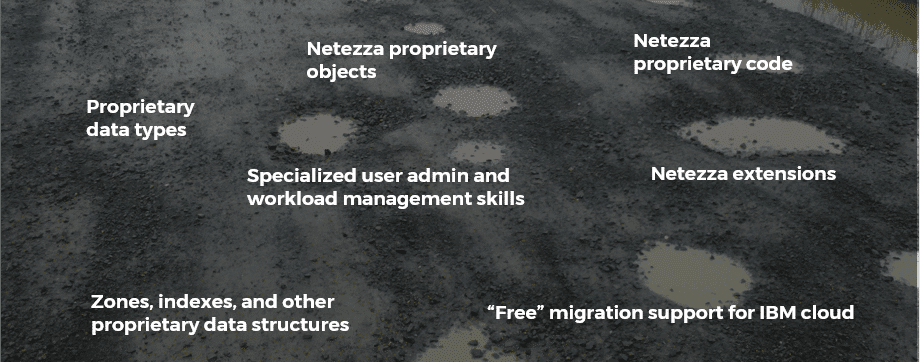

With certain models of Netezza reaching end-of-life, you may be considering your options for a new, modern data warehouse. But don’t make migration an afterthought. Migrations of terabytes of data, thousands of tables and views, specialized code and data types, and other proprietary elements do not happen overnight.

Given the dependencies and complexities involved with data warehouse migrations, it’s no wonder that, according to Gartner’s Adam Ronthal, over 60% of these projects fail to achieve their intended goals.

Here are some common pitfalls to watch out for when you are ready to start on your migration journey.

1. Pressure to ‘Lift and Shift’

One of the key decisions in designing your journey is whether to “lift and shift” the entire data warehouse or incrementally offload project by project— gradually reducing the burden on your legacy system. IBM is promoting “lift and shift” of Netezza to their IBM Cloud. But think back to how prior forklift upgrades have gone—whether involving mainframes, data center consolidation, service-oriented architecture, or any other legacy technical debt—and you can quickly see the high risk of a “lift and shift” migration strategy.

To mitigate risk and realize faster time-to-value, you want to choose an incremental approach that would enable you to gradually transition from Netezza while first offloading your most simple workloads.

2. “Free” Migration Support From IBM

IBM is offering free migration assistance for Netezza Twinfin and Striper customers to move to the IBM Integrated Analytics System (IIAS) based on Db2 technology. But what in life is ever truly free? Moving to IIAS guarantees that you are locked in yet again to the expensive IBM ecosystem, requiring specialized skills and knowledge to operate and manage.

And you will certainly be disappointed by the difference in functionality offered by Db2.

3. Mishandling Proprietary Elements

There are broadly two classes of workloads running on Netezza systems: Business Intelligence-led systems and Line of Business (LoB) applications. BI applications are relatively straightforward to migrate from one platform to another. LoB applications, however, often contain significant amount of complex logic and represent the most challenging workloads to migrate to a new platform. We frequently find that customers have written some of the logic of these systems using stored procedures or user-defined functions, which are the least portable way of building an application.

If your application estate consists of such complex code, your target data warehouse should adhere to SQL, Spark, JDBC/ODBC, and other open standards and have a wealth of partners capable of automatically identifying and converting proprietary Netezza elements to standards-based views, code, data, etc.

4. Rushing the Business Assessment

A thorough assessment of your legacy environment is a critically important step in the migration journey. Your company has most likely invested decades of logic into your Netezza platform. There will be a lot of junk data that got created over that time. Tables that may not have been touched for years. Countless queries and workloads that are irrelevant to the business. These objects should not be moved during the migration.

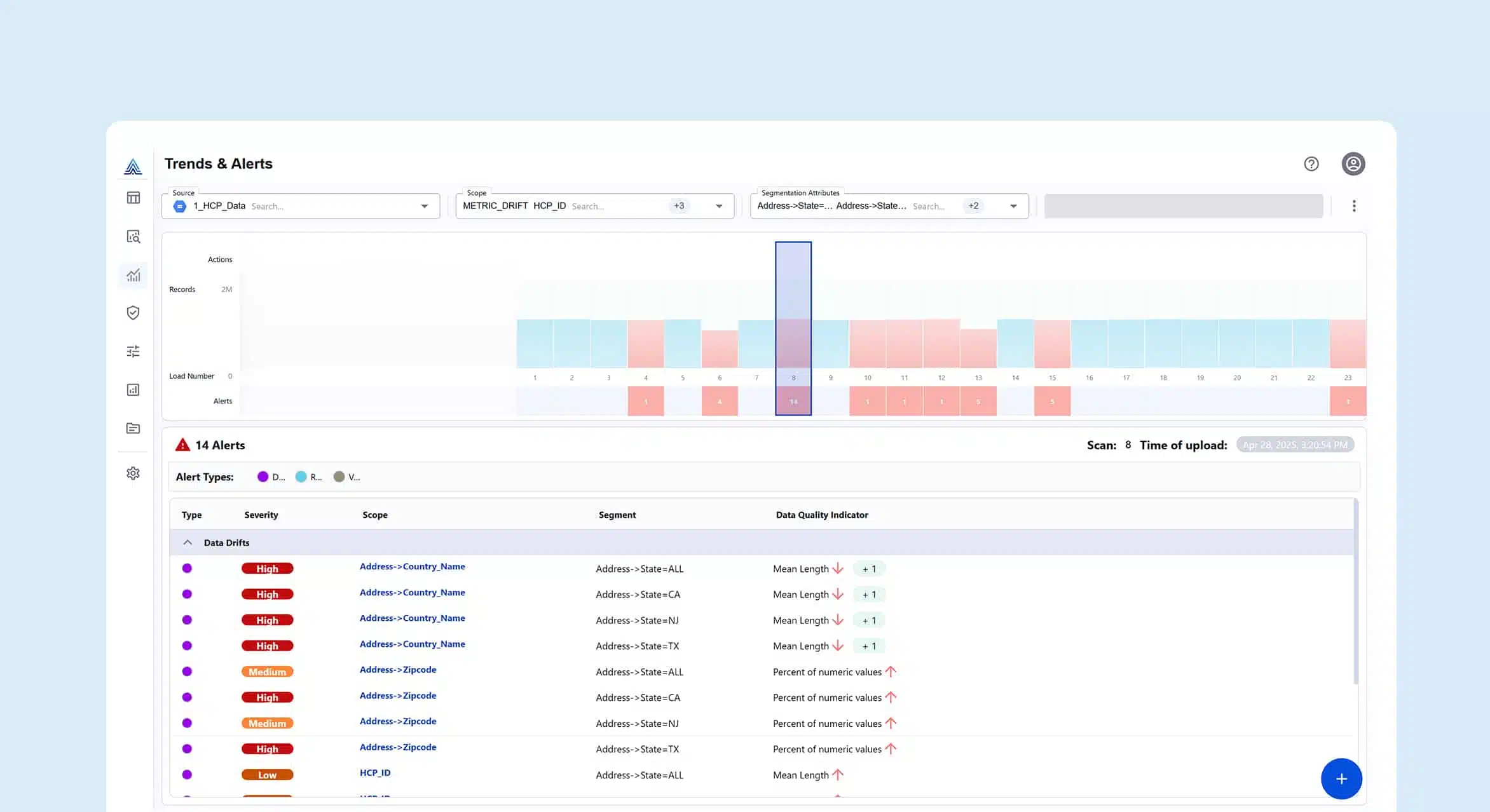

You can reduce your migration risk by using an automated tool to analyze the logs from your Netezza data warehouse to gain a complete understanding of your current environment. Based on numerous factors, the tool can identify redundancies that should not be migrated, decide what should, prioritization for migration, and how to work with phased migrations.

5. Being Locked in Without Options

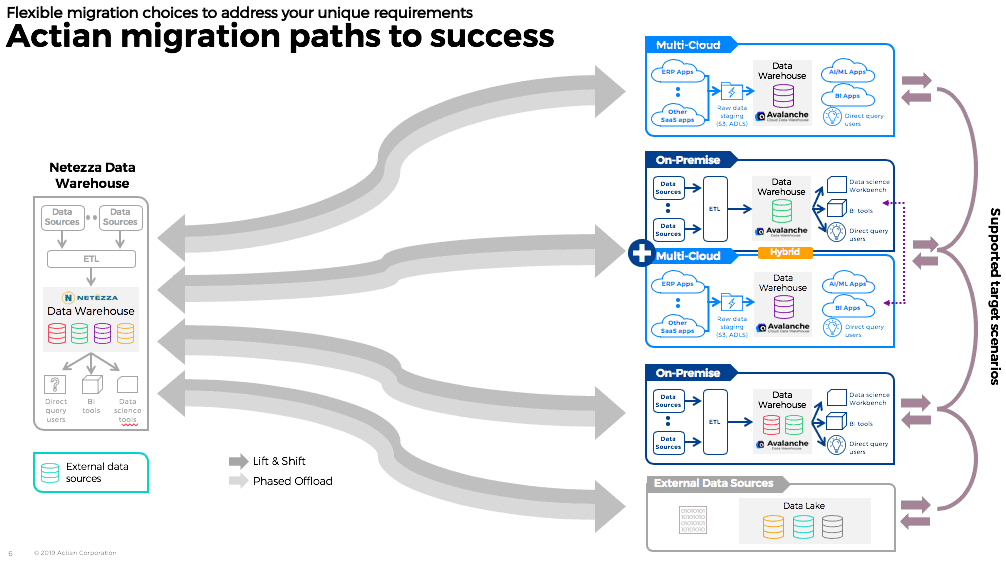

Be sure to choose a solution that provides the flexibility for you to chart any path you desire without compromise. For instance, do you want to move all at once to the cloud as part of a cloud-first strategy, or conduct your data warehouse migration in stages? Businesses with rigorous compliance or privacy demands often prefer to store some data on-premises. Do you want to be locked into a particular cloud platform, or go with AWS now but have the option to move some apps to Azure later? Whatever your situation is, don’t trade in your current vendor lock-in for another.

Learn more migration best practices by watching our on-demand webinar, “Top 7 tips for Netezza migration success,” featuring former Director of Tech Services at Netezza.

Migrate With Confidence

Actian Data Platform can help you incrementally migrate or offload from your Netezza data warehouse until it can be retired in a managed fashion—according to your timeframe and your terms. Choose the path that is best for you – cloud, on-premises, or a combination of both, with a seamlessly architected hybrid solution. Prior studies from our customers and prospects have shown typical gap analysis to resolve to over 95% on average for migrations.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)