How to Deploy Effective Data Governance, Adopted by Everyone

Actian Corporation

October 8, 2020

It is no secret that the recent global pandemic has completely changed the way people do business. In March 2020, France was placed in total lockdown, and many companies had to adapt to new ways of working, whether that be by introducing remote working, changing the production agenda, or even shutting down the organization’s operations completely. This health crisis had companies ask themselves: how are we going to deal with the financial, technological, and compliance risks following COVID-19?

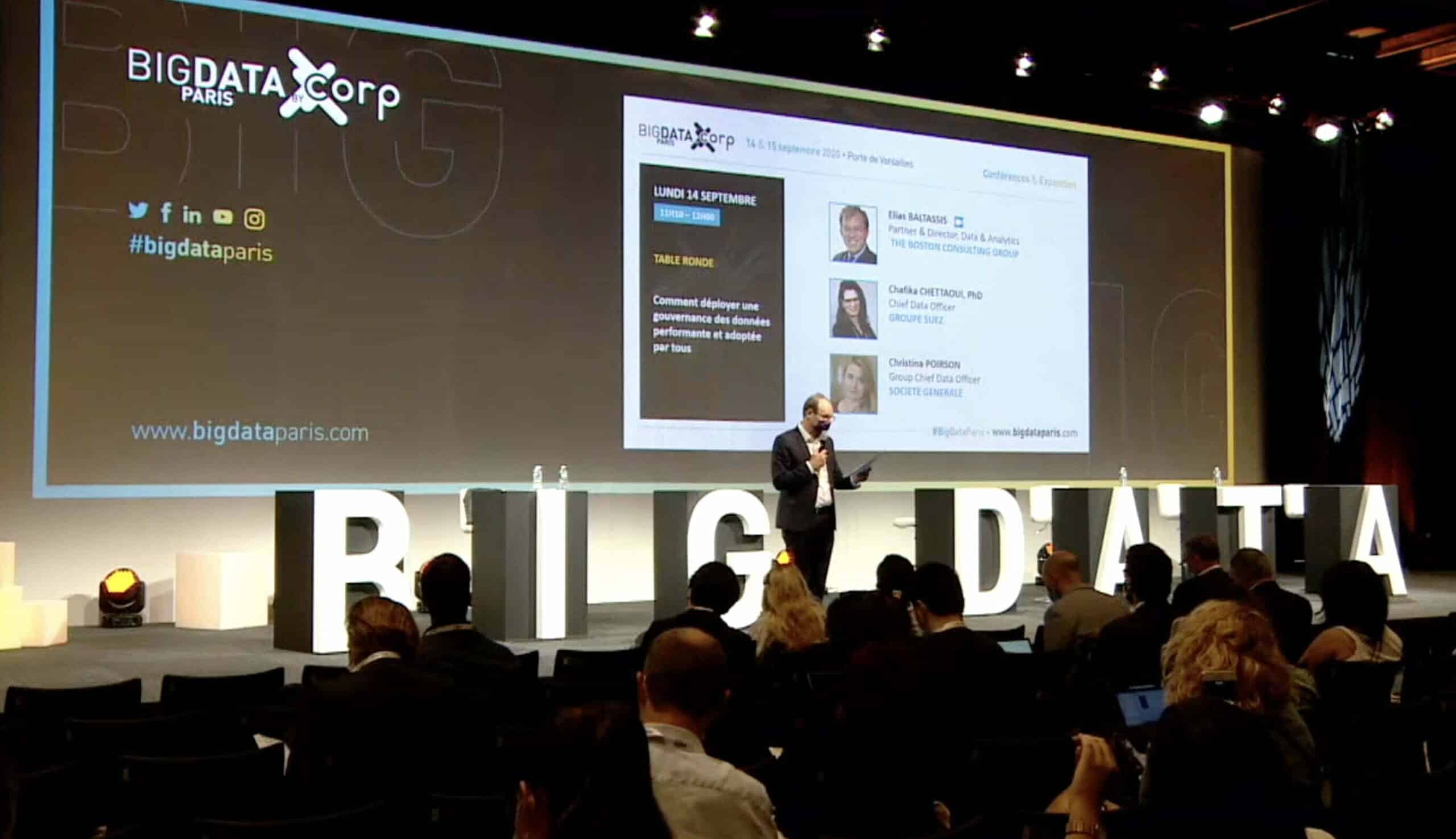

At Big Data Paris 2020, we had the pleasure to attend the roundtable “How to deploy effective data governance that is adopted by everyone” led by Christina Poirson, CDO of Société Générale, Chafika Chettaoui, CDO of the Suez Group and Elias Baltassis, Partner & Director, Data & Analytics of the Boston Consulting Group. In this roundtable of approximately 35 minutes, the three data experts explain the importance and the best practices of implementing data governance.

First Steps to Implementing Data Governance

The impact of COVID-19 has not been without underlining the essential challenge of knowing, collecting, preserving, and transmitting quality data. So, has the lockdown pushed companies to want to put in place a data governance strategy? This first question, answered by Elias Baltassis, confirms the strong increase in demand for implementing data governance in France:

“The lockdown certainly accelerated the demand for implementing data governance! It was already a topic for the majority of these companies long before the lockdown, but the health crisis has, of course, pushed companies to strengthen the security and reliability of their data assets”.

So, what is the objective of data governance? And where do you start? Elias explains that the first thing to do is to diagnose the data assets in the enterprise, and identify the sticking points: “Identify the places in the enterprise where there is a loss of value because of poor data quality. This is important because data governance can easily drift into a bureaucratic exercise, which is why you should always keep as a “guide” the value created for the organization, which translates into better data accessibility, better quality, etc”.

Once the diagnosis is done and the sources of value are identified, Elias explains that there are four methodological steps to follow:

- Know your company’s data, its structure, and who owns it (via a data glossary for example),

- Set up a data policy targeted at the points of friction,

- Choose the right tool to deploy these policies across the enterprise

- Establish a data culture within the organization, starting with hiring data-driven people, such as Chief Data Officers.

The above methodology is therefore essential before starting any data governance project which, according to Elias, can be implemented fairly quickly: “Data governance can be implemented quickly, but increasing data quality will take more or less time, depending on the complexity of the business; a company working with one country will take less time than a company working with several countries in Europe for example”.

The Role of the Chief Data Officer in the Implementation of Data Governance

Christina Poirson, explains that for her and Société Générale, data governance played a very important role during this exceptional period: “Fortunately, we had data governance in place that ensured the quality and protection of data during lockdown to our professional and private customers. We realized the importance of the couple digitization and data, which has been vital not only for our work during the crisis, but also for tomorrow’s business.”

So how did a company as large, old and with thousands of data records as Société Générale implement a new data governance strategy? Christina explains that data at Société Générale is not a recent topic. Indeed, since the very beginnings, the firm has been asking for information about the client in order to be able to advise them on what type of loan to put in place, for example.

However, Société Générale’s CDO tells us that today, with digitization, there are new types, formats and volumes of data. It confirms what Elias Baltassis said just before: “The implementation of a data office and Chief Data Officers was one of the first steps in the company’s data strategy. Our role is to maximize the value of data while respecting the protection of sensitive data, which is very important in the banking world.”.

To do this, Christina explains that Société Générale supports this strategy throughout the data’s life cycle: from its creation to its end, including its qualification, protection, use, anonymization and destruction.

On the other hand, Chafika Chettaoui, CDO of the Suez group, explains that she sees herself as a conductor:

“What Suez lacked was a conductor who had to organize how IT can meet the business objectives. Today, with the increasing amount of data, the CDO has to be the conductor for the IT, business, and even HR and communication departments, because data and digital transformation is above all a human transformation. They have to be the organizer to ensure the quality and accessibility of the data as well as its analysis”.

But above all, the two speakers agreed that a CDO has two main missions:

- The implementation of different standards on data quality and protection.

- Must break down data silos by creating a common language around data, or data fluency, in all parts of the enterprise.

Data Acculturation in the Enterprise

We don’t need to remind you that building a data culture within the company is essential to create value with its data. Christina Poirson explains that data acculturation was quite a long process for Société Générale:

“To implement data culture, we went through what we call “data mapping” at all levels of the managerial structure, from top management to employees. We also had to set up coaching sessions, coding training and other dedicated awareness sessions. We have also made available all the SG Group’s use cases in a catalog of ideas so that every company in the group can be inspired: it’s a library of use cases that is there to inspire people”.

She goes on to explain that they have other ways of acculturating employees at Société Générale:

- Setting up a library of algorithms to reuse what has already been set up.

- Implementing specific tools to assess whether the data complies with the regulations.

- Making data accessible through a group data catalog.

Data acculturation was therefore not an easy task for Société Générale. But, Christina remains positive and tells us a little analogy: “Data is like water, CIOs are the pipes, and businesses make demands related to water. There must therefore be a symbiosis between the IT, CIO and the business departments”.

Chafika Chettaoui adds: “Indeed, it is imperative to work with and for the business. Our job is to appoint people in the business units who will be responsible for their data. We have to give the responsibility back to everyone: the IT for building the house, and the business for what we put inside. By putting this balance in place, there are back and forth exchanges and it is not just the IT’s responsibility”.

Roles in Data Governance

Although roles and responsibilities vary from company to company, in this roundtable discussion, the two Chief Data Officers explain how role allocation works within their data strategy.

At Société Générale they have fairly strong convictions. First of all, they set up “Data Owners”, who are part of the business, who are responsible for:

- The definition of their data.

- Their main uses.

- Their associated quality level.

On the other hand, if a data user wants to use that data, they don’t have to ask permission from the Data Owner, otherwise the whole system is corrupt. As a result, Société Générale has put in place measures to ensure that they check compliance rules and regulations, without calling the Data Owner into question: “the data at Société Générale belongs either to the customer or to the whole company, but not to a particular BU or department. We manage to create value from the moment the data is shared”.

At Suez, Chafika Chettaoui confirms that they have the same definition of Data Owner, but she adds another role, that of the Data Steward. At Suez, the Data Steward is the one who is on site, making sure that the data flows work.

She explains: “The Data Steward is someone who will animate the so-called Data Producers (the people who collect the data in the systems), make sure they are well trained and understand the quality of the data, as well as be the one who will hold the dashboards and analyze if there are any inconsistencies. It’s someone in the business, but with a real data valency and an understanding of the data and its value”.

What are the Key Best Practices for Implementing Data Governance?

What should never be forgotten in implementing data governance is to remember that data does not belong to one part of the organization but must be shared to all. It is therefore imperative to standardize the data. To do this, Christina Poirson explains the importance of a data dictionary: “by adding a data dictionary that includes the name, definition, data owner, and quality level of the data, you already have a first brick in your governance”.

As mentioned above, the second good practice in data governance is to define roles and responsibilities around data. In addition to a Data Owner or Data Steward, it is essential to define a series of roles to accompany each key stage in the use of the data. Some of these roles can be :

- Data Quality Manager

- Data Protection Analyst

- Data Usages Analyst

- Data Analyst

- Data Scientist

- Data Protection Officer

As a final best practice recommendation for successful data governance, Christina Poirson explains the importance of knowing your data environment, as well as your risk appetency, the rules of each business unit, industry and service to truly facilitate data accessibility and compliance.

…and the Mistakes to Avoid?

To end the roundtable, Chafika Chettaoui talks about the mistakes to avoid in order to succeed in governance. According to her, we must not start with technology. Even if, of course, technology and expertise are essential to implementing data governance, it is very important to focus first on the culture of the company.

She states: “Establishing a data culture with training is essential. On the one hand we have to break the myth that data and AI are “magical”, and on the other break the myth of “intuition” of some experts, by explaining the importance of data in the enterprise. The cultural aspect is key, and at any level of the organization. ”

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.