IoT in Manufacturing: Why Your Enterprise Needs a Data Catalog

Actian Corporation

January 12, 2021

Digital transformation has become a priority in organizations’ business strategies, and manufacturing industries are no exception to the rule! With stronger customer expectations, increased customization demands, and the complexity of the global supply chain, manufacturers are in need of finding new, more innovative products and services. In response to these challenges, manufacturing companies are increasingly investing in IoT (Internet of Things).

In fact, the IoT market has grown exponentially over the past few years. IDC reports the IoT footprint is expected to grow up to $1.2 trillion in 2022, and Statista, by way of contrast, is confident its economic impact may be between $3.9 and $11.1 trillion by 2025.

In this article, we define what IoT is and some manufacturing-specific use cases, as well as explain why the Actian Data Intelligence Platform Data Catalog is an essential tool for manufacturers to advance in their IoT implementations.

What is IoT?

According to Tech Target, the Internet of Things (IoT), “a system of interrelated computing devices, mechanical and digital machines, objects, or people that are provided with unique identifiers and the ability to transfer data over a network without requiring human-to-human or human-to-computer interaction.”

A “thing” in the IoT can therefore be a person with a heart monitor implant, an automobile that has built-in sensors to alert the driver when tire pressure is low or any other object that can be assigned an ID and is able to transfer data over a network.

From a manufacturing point of view, IoT is a way to digitize industry processes. Industrial IoT employs a network of sensors to collect critical production data and uses various software to turn this data into valuable insights about the efficiency of manufacturing operations.

IoT Use Cases in Manufacturing Industries

Currently, many IoT projects deal with facility and asset management, security and operations, logistics, customer servicing, etc. Here is a list of examples of IoT use cases in manufacturing:

Predictive Maintenance

For industries, unexpected downtime and breakdowns are the biggest issues. Hence manufacturing companies realize the importance of identifying potential failures, their occurrences and consequences. To overcome these potential issues, organizations now use machine learning for faster and smarter data-driven decisions.

With machine learning, it becomes easy to identify patterns in available data and predict machine outcomes. This works by identifying the correct data set, combining it with a machine to feed real-time data.This kind of information allows manufacturers to estimate the current condition of machinery, determine warning signs, transmit alerts and activate corresponding repair processes.

With predictive maintenance through the use of IoT, manufacturers can lower the maintenance costs, lessen the downtime and extend equipment life, thereby enhancing quality of production by attending to problems before equipment fails.

For instance, Medivators, one of the leading medical equipment manufacturers, successfully integrated IoT solutions across their service and experienced an impressive 78% boost of the service events that could be easily diagnosed and resolved without any additional human resources.

Asset Tracking

IoT asset tracking is one of the fastest growing phenomena across manufacturing industries. It is expected that by 2027, there will be 267 million active asset trackers in use worldwide for agriculture, supply chain, construction, mining, and other markets.

While in the past manufacturers would spend a lot of time manually tracking and checking their products, IoT uses sensors and asset management software to track things automatically. These sensors continuously or periodically broadcast their location information over the internet and the software then displays that information for you to see. This therefore allows manufacturing companies to reduce the amount of time they spend locating materials, tools, and equipment.

A striking example of this can be found in the automotive industry, where IoT has helped significantly in the tracking of data for individual vehicles. For example, Volvo Trucks introduced connected-fleet services that include smart navigation with real-time road conditions based on information from other local Volvo trucks. In the future, more real-time data from vehicles will help weather analytics work faster and more accurately; for example, windshield wiper and headlight use during the day indicate weather conditions. These updates can help maximize asset usage by rerouting vehicles in response to weather conditions.

Another tracking example is seen at Amazon. They are using WiFi robots to scan QR codes on its products to track and triage its orders. Imagine being able to track your inventory—including the supplies you have in stock for future manufacturing—at the click of a button. You’d never miss a deadline again! And again, all that data can be used to find trends to make manufacturing schedules even more efficient.

Driving Innovation

By collecting and audit-trailing manufacturing data, companies can better track production processes and collect exponential amounts of data. That knowledge helps develop innovative products, services, and new business models. For example, JCDecaux Asia has developed their displaying strategy thanks to data and IoT. Their objective was to have a precise idea of the interest of the people for the campaigns they carried out, and to attract their attention more and more via animations. “On some screens, we have installed small cameras, which allow us to measure whether people slow down in front of the advertisement or not.”, explains Emmanuel Bastide, Managing Director for Asia at JCDecaux.

In the future, will displaying advertising be tailored to individual profiles? JCDecaux says that in airports, for example, it is possible to better target advertising according to the time of day or the landing of a plane coming from a particular country! By being connected to the airport’s arrival systems, the generated data can send the information to the displaying terminals, which can then display a specific advertisement for the arriving passengers.

Data Catalog: One Way to Rule Data for any Manufacturer

To enable advanced analytics, collect data from sensors, guarantee digital security and use machine learning and artificial intelligence, manufacturing industries need to “unlock data,” which means centralizing in a smart and easy-to-use corporate “Yellow Pages” of the data landscape. For industrial companies, extracting meaningful insights from data is made simpler and more accessible with a data catalog.

A data catalog is a central repository of metadata enabling anyone in the company to have access, understand and trust any necessary data to achieve a particular goal.

Actian Data Intelligence Platform Data Catalog x IoT: The Perfect Match

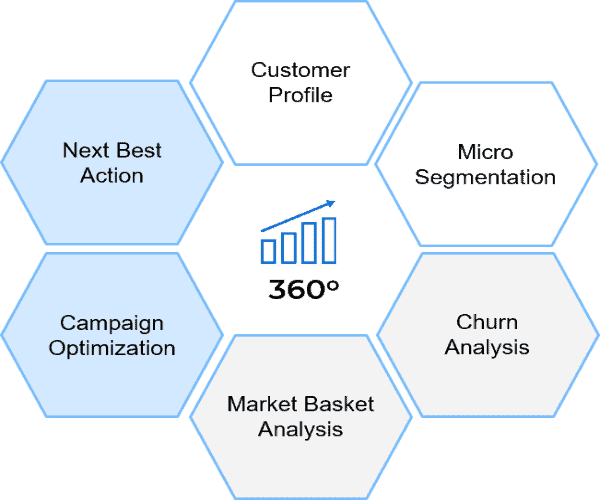

Actian Data Intelligence Platform helps industries build an end-to-end information value chain. Our data catalog allows you to manage a 360° knowledge base using the full potential of the metadata of your business assets.

Success Story in the Manufacturing Industry

In 2017, Renault Digital was born with the aim of transforming the Renault Group into a data-driven company. Today, this entity is made up of a community of experts in terms of digital practices, capable of innovating while delivering agile delivery and maximum value to the company’s business IT projects. Jean-Pierre Huchet, Head of Renault’s Data Lake, states that their main data challenges were:

- Data was too siloed.

- Complicated data access.

- No clear and shared definitions of data terms.

- Lack of visibility on personal/sensitive data.

- Weak data literacy.

By choosing the Actian Data Intelligence Platform Data Catalog as their data catalog software, they were able to overcome these challenges and more. Actian Data Intelligence Platform today has become an essential brick in Renault Digital’s data projects. Its success can be translated into:

- Its integration into Renault Digital’s onboarding: mastering the data catalog is part of their training program.

- Resilient documentation processes & rules implemented via the Actian Data Intelligence Platform.

- Hundreds of active users.

Now, the Actian Data Intelligence Platform is their main data catalog, with Renault Digital’s objectives of having a clear vision of the data upstream and downstream of the hybrid data lake, a 360 degree view on the use of their data, as well as the creation of several thousands of Data Explorers.

Actian Data Intelligence Platform’s Unique Features for Manufacturing Companies

Our data catalog has the following features to solve your problematics:

- Universal connectivity to all technologies used by leading manufacturers.

- Flexible metamodel templates adapted to manufacturers’ use-cases.

- Compliance to specific manufacturing standards through automatic data lineage.

- A smooth transition in becoming data literate through compelling user experiences.

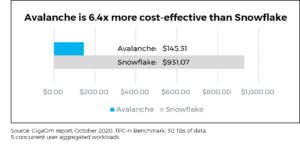

- An affordable platform with a fast return on investment (ROI).

Are You Interested in Unlocking Data Access for Your Company?

Are you in the manufacturing industry? Get the keys to unlocking data access for your company by downloading our new Whitepaper “Unlock Data for the Manufacturing Industry”. Download our Whitepaper.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.