Data Privacy: Five Tips to Help Your Cloud Data Platform Keep Up

Teresa Wingfield

December 29, 2022

Gartner estimates that 65% of the world’s population will have its personal information covered under modern privacy regulations by 2023, up from 10% in 2020. General Data Protection Regulation (GDPR) opened the floodgates with its introduction in 2018. Since then, countries across the globe have enacted their own laws. The United States is growing increasingly complex as individual states such as California, Colorado, Connecticut, Utah, Virginia, and Wisconsin have each passed their own privacy bills and more states have pending legislation in the works. Plus, there’s industry compliance to worry about such as Payment Card Industry (PCI) DSS and Health Insurance Portability and Accountability Act (HIPAA).

In a fragmented privacy compliance environment, organizations are scrambling to make sure they comply with all the different rules and regulations. There’s no getting around the need to understand constantly evolving data privacy legislation and to develop appropriate policies. To deal with the tremendous scope of the work involved and the regulatory requirements for this role, many organizations are hiring a dedicated Data Privacy Officer/Data Protection Officer.

Implementing a compliant cloud data platform is also hard, particularly as organizations strive to make data available to anyone in their organization who can use it to gain valuable insights that produce better business outcomes. These five tips can make cloud data privacy easier:

1. Choose a Platform That Includes Integration

Data silos add to data compliance complexity and introduce more non-compliance risks. With built-in data integration, businesses can quickly create and reuse pipelines to ingest and transform data from any source, providing a way to break down silos and to avoid the need to build them in the future. With integration as part of a single solution, businesses will be able to migrate data more quickly into the platform and to reflect changes in source systems sooner.

Further, integrating data to get a 360-degree view of the customer will help you better understand what sensitive information you’re collecting data, where you’re sourcing it from and how and where you are using it.

2. Understand What Data Your Users Really Need

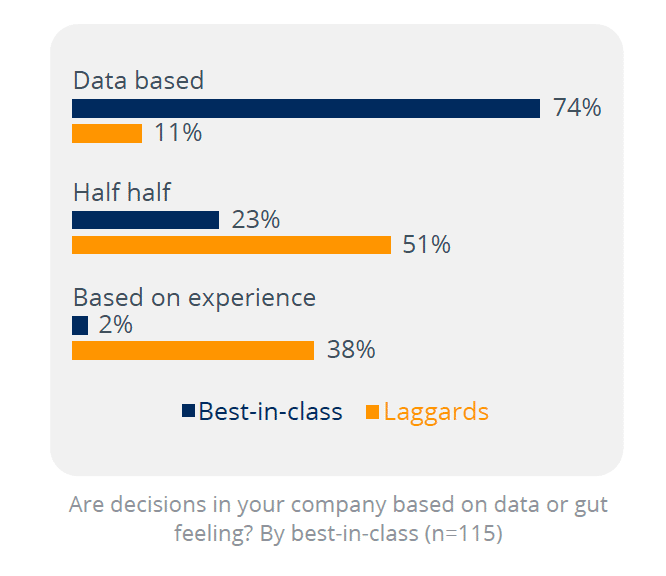

Collecting too much data also increases risk exposure because there’s more data to protect. Delivering data that users need rather than a kitchen sink approach not only improves decision-making, but also enhances cloud data privacy. If simply asked “what data do you need?”, the answer is often “everything,” but this rarely is the right answer. Getting to know one’s users and understand what specific data they really require to do their jobs is a better approach.

3. Ensure That Users Only See the Data They Should

What can a business’ users see? The answer should not be “everything,” nor should it be “nothing.” Business users need visibility to some data to do their jobs, but identities shouldn’t be exposed unless necessary.

Cloud data platforms need to provide fine-grained techniques such as column-level de-identification and dynamic data masking to prevent inappropriate access to personally identifiable information (PII), sensitive personal information, and commercially sensitive data, while still allowing visibility to data attributes the worker needs. Column level de-identification protects sensitive fields at rest while dynamic data masking applies protection on read depending on the role of the user. Businesses will also need role-based policies that you can quickly update so that they can flexibly enforce the wide range of data access requirements across users.

4. Isolate Your Sensitive Data

Many privacy laws require that businesses protect their data from various Internet threats. There are lots of security measures to consider when protecting any data, but protecting sensitive data requires advanced capabilities such as those mentioned above as well as the ability to restrict physical access. Using a platform evaluation check list, businesses should be sure to include support for isolation capabilities such as:

- On-premises support in addition to the cloud so that sensitive data can remain in the data center.

- The ability to limit the data warehouse to specific IP ranges.

- Separate data warehouse tenants.

- Use of a cloud service’s virtual private cloud (VPC) to isolate a private network.

- Platform access control for metadata, provisioning, management, and monitoring.

5. Recognize the Importance of Data Quality in Cloud Data Privacy

Data leaders widely recognize the importance of high-quality data to enable accurate decision-making but think of it less often as a compliance issue. Some data privacy regulations specifically call for improving quality. For instance, GDPR requires businesses to correct inaccurate or incomplete personal data. Make sure your Cloud Data Platform lets you easily configure data quality rules to ensure data is accurate, consistent, and complete.

Final Thoughts

Non-compliance is costly and can cause considerable damage to your brand reputation. For GDPR alone, data protection authorities have handed out $1.2 billion in fines since Jan. 28, 2021. To avoid becoming part of the mounting penalties, and then part of the next day’s news cycle, always remember to keep compliance in mind when evaluating your cloud data platform and how it meets cloud data privacy data requirements.

Also, here’s a few blogs on security, governance and privacy that discuss protecting databases and cloud services.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)