Exploiting Analytics in the Cloud Without Moving All Your Data There

Actian Corporation

July 29, 2019

Your company has competing data needs. You need IT systems optimized for real-time transaction processing to serve as the engine for digitally transformed business processes. You also need your data aggregated and optimized for analytics to generate both real-time insights and perform deep data-mining activities. It can be difficult to balance these competing needs in your solution architecture if the only approach you are considering is “find a middle-of-the-road solution that minimally satisfies both needs.”

As one might imagine from the way that approach is phrased, it is a compromise solution, one in which both needs are satisfied during the short term, but no one is happy and the company can’t excel during the long term. The alternative (and recommended) approach is to develop separate, specialized and optimized solutions for each business challenge. Enabling specialization not only leads to better performance but also a path to long-term scalability and business agility.

Your transactional systems must be optimized for real-time processing. They may reside on-premises, in the cloud or be a Software-as-a-Service (SaaS) solution that third parties manage. They each have their own underlying data structure and processing capabilities that have been carefully crafted to provide optimum performance for the business processes that rely on them. Let these systems do the job for which they were designed and don’t slow them to satisfy analytics needs.

Your analytics systems must aggregate, analyze and present data in a consumable format. Digital business processes rely on this data to inform operators and staff what actions must be taken to keep the company running smoothly and to take advantage of business opportunities.

The challenge of effective real-time analytics is putting the data in a common place for analysis, and then having enough processing power to convert raw data into meaningful information and actionable insights in real-time. The cloud provides an ideal solution to support your analytics needs, because of its massively parallel-processing (MPP) capabilities, consumption-based billing models and access to low-cost, shared infrastructure resources.

The question many solution architects and IT leaders ask is “If I want to do analytics in the cloud, then must I move all of my data there?” The answer is “No.” The data you have moved to the cloud should be processed in the cloud. The data you have stored on-premises or in your private cloud should similarly be processed there. Most analytic processing occurs in memory, which means you can simply pull in the data you need from source systems when you are ready to process it.

It is important to co-locate the data and processing as moving masses of data can be slow and expensive. Once the analysis is complete, you can discard the raw data from the analytics system. This approach lets you leverage the cloud-processing power and scale for processing without moving all your data.

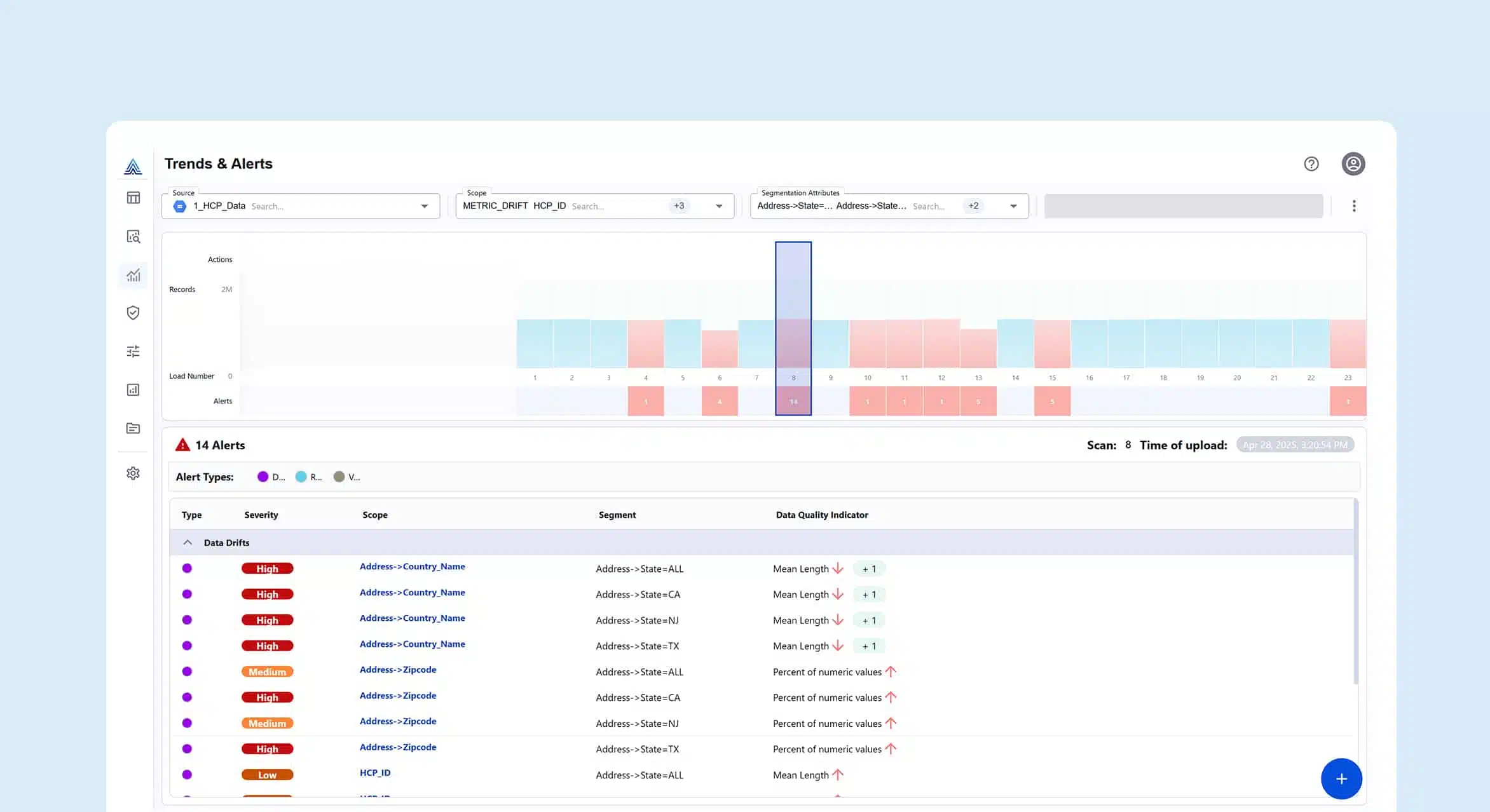

The key to an effective cloud-analytics solution is having a set of data-management capabilities that enable you to manage data connections to source systems, tools to aggregate data into a common hybrid data model. Actian provides multi-cloud and on-premises analytics with a robust distributed query capability that lets you put the compute wherever the data lies.

Actian is the leading provider of hybrid data-management solutions to support real-time, cloud-based and on-premises analytics and operational data processing. To learn more, visit www.actian.com/data-platform.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)