An organization’s data catalog enhances all available data assets by relying on two types of information – on the one hand, purely technical information that is automatically synchronized from their sources; and on the other hand, business information that comes from the work of Data Stewards. The latter is updated manually and thus brings its share of risks to the entire organization.

A permission management system is therefore essential to define and control the access rights of catalog users. In this article, we detail the fundamental characteristics and the possible approaches to building an efficient permission management system, as well as the solution implemented by the Actian Data Intelligence Platform Data Catalog.

Permission Management System: An Essential Tool for the Entire Organization

For data catalog users to trust in the information they are viewing, it is essential that the documentation of cataloged objects is relevant, of high quality, and, above all, reliable. Your users must be able to easily find, understand, and use the data assets at their disposal.

The Origin of Catalog Information and Automation

A data catalog generally integrates two types of information. On the one hand, there is purely technical information that comes directly from the data source. This information is synchronized in a completely automated and continuous way between the data catalog and each data source to guarantee its veracity and freshness. On the other hand, the catalog contains all the business or organizational documentation, which comes from the work of the Data Stewards. This information cannot be automated; it is updated manually by the company’s data management teams.

A Permission Management System is a Prerequisite for Using a Data Catalog

To manage this second category of information, the catalog must include access and input control mechanisms. Indeed, it is not desirable that any user of your organization’s data catalog can create, edit, import, export or even delete information without having been given prior authorization. A user-based permission management system is therefore a prerequisite; it plays the role of a security guard for the access rights of users.

The 3 Fundamental Characteristics of a Data Catalog’s Permission Management System

The implementation of an enterprise-wide permission management system is subject to a number of expectations that must be taken into account in its design. Among them, we have chosen in this article to focus on three fundamental characteristics of a permission management system: its level of granularity and flexibility, its readability and auditability, and its ease of administration.

Granularity and Flexibility

First of all, a permission management system must have the right level of granularity and flexibility. Some actions should be available to the entire catalog for ease of use. Other actions should be restricted to certain parts of the catalog only. Some users will have global rights related to all objects in the catalog, while others will be limited to editing only the perimeter that has been assigned to them. The permission management system must therefore allow for this range of possibilities, from global permission to the fineness of an object in the catalog.

Our clients are of all sizes, with very heterogeneous levels of maturity regarding data governance. Some are start-ups, others are large companies. Some have a data culture that is already well integrated into their processes, while others are only at the beginning of their data acculturation process. The permission management system must therefore be flexible enough to adapt to all types of organizations.

Readability and Auditability

Second, a permission management system must be readable and easy to follow. During an audit, or a review of the system’s permission, an administrator who explores an object must be able to quickly determine who has the ability to modify it. Conversely, when an administrator looks at the details of a user’s permission set, they must quickly be able to determine the scope that is assigned to that user and their authorized actions on it.

This simply ensures that the right people have access to the right perimeters, and have the right level of permission for their role in the company.

Have you ever found yourself faced with a permission system that was so complex that it was impossible to understand why a user was allowed to access information? Or on the contrary was unable to do so?

Simplicity of Administration

Finally, a permission management system must be resilient in facing the increasing catalog volume. We know today that we live in a world of data: 2.5 exabytes of data were generated per day in 2020 and it is estimated that 463 exabytes of data will be generated per day in 2025. New projects, new products, new uses: companies must deal with the explosion of their data assets on a daily basis.

To remain relevant, a data catalog must evolve with the company’s data. The permission management system must therefore be resilient to changes in content or even to the movement of employees within the organization.

Different Approaches to Designing a Data Catalog Permission Management System

There are different approaches to designing a data catalog permission management system, which more or less meet the main characteristics expected and mentioned above. We have chosen to detail three of them in this article.

Crowdsourcing

First, the crowdsourcing approach – where the collective is trusted to self-correct. A handful of administrators can moderate the content and all users can contribute to the documentation. An auditing system usually completes the system to make sure that no information is lost by mistake or malice. In this case, there is no control before documenting, but a collective correction afterwards. This is typically the system chosen by online encyclopedias such as Wikipedia. These systems depend on the number of contributors and their own knowledge to work well, as self-correction can only be effective through the collective.

This system perfectly meets the need for readability – all users have the same level of rights, so there is no question about the access control of each user. It is also simple to administer – any new user has the same level of rights as everyone else, and any new object in the data catalog is accessible to everyone. On the other hand, there is no way to manage the granularity of rights. Everyone can do and see everything.

Permission Attached to the User

The second approach to designing the permission management system is using solutions where the scope is attached to the user’s profile. When a user is created in the data catalog, the administrators assign a perimeter that defines the resources that they will be able to see and modify. In this case, all controls are done upstream and a user cannot access a resource inadvertently. This is the type of system used by an OS such as Windows for example.

This system has the advantage of being very secure, there is no risk that a new resource will be visible or modifiable by people who do not have the right to do so. This approach also meets the need for readability: for each user, all the accessible resources are easy to find. The expected level of granularity is also good, since it is possible to allocate the data system resource by resource.

On the other hand, administration is more complex – each time a new resource is added to the catalog, it must be added to the perimeters of the said users. It is possible to overcome this limitation by creating dynamic scopes. To do this, you can define rules that assign resources to users, for example all PDF files will be accessible to so-and-so. But contradictory rules can easily appear, complicating the readability of the system.

Permission Attached to the Resource

The last major approach to designing a data catalog’s permission management system is to use solutions where the authorized actions are attached to the resource to be modified. For each resource, the possible permissions are defined user by user. Thus it is the resource that has its own permission set. By looking at the resource, it is then possible to know immediately who can view or edit it. This is for example the type of system of a UNIX-like OS.

The need for readability is perfectly fulfilled – an administrator can immediately see the permissions of different users when viewing the resource. The same goes for the need for granularity – this approach allows permissions to be given at the most macro level through an inheritance system, or at the most micro level directly on the resource. Finally, in terms of ease of administration, it is necessary to attach each new user to the various resources, which is potentially tedious. However, there are group systems that can mitigate this complexity.

The Data Catalog Permission Management Model: Simple, Readable and Flexible

Among these approaches, let’s detail the one chosen by the Actian Data Intelligence Platform and how it is applied.

The Resource Approach was Preferred

Let’s summarize the various advantages and disadvantages of each of the approaches discussed above. In both resource and user-level permission management systems, the need for granularity is well addressed – these systems allow for resource-by-resource permission to be assigned. In contrast, in the case of crowdsourcing, the basic philosophy is that anyone can access anything. Readability is clearly better in crowdsourcing systems or in systems where permissions are attached to the resource. It remains adequate in systems where permissions are attached to the user, but often at the expense of simplicity of administration. Finally, the simplicity of administration is very much optimized for the crowdsourcing approach and depends on what you are going to modify the most – the resource or the users.

Since the need for granularity is not met in the crowdsourcing approach, we eliminated it. We were then left with two options: resource-based permission or user-based permission models. Since the readability is a bit better with resource-based permission, and since the content of the catalog will evolve faster than the number of users, the user-based permission option seemed the least relevant.

The option we have chosen at the Actian Data Intelligence Platform was therefore the third one: user permissions are attached to the resource.

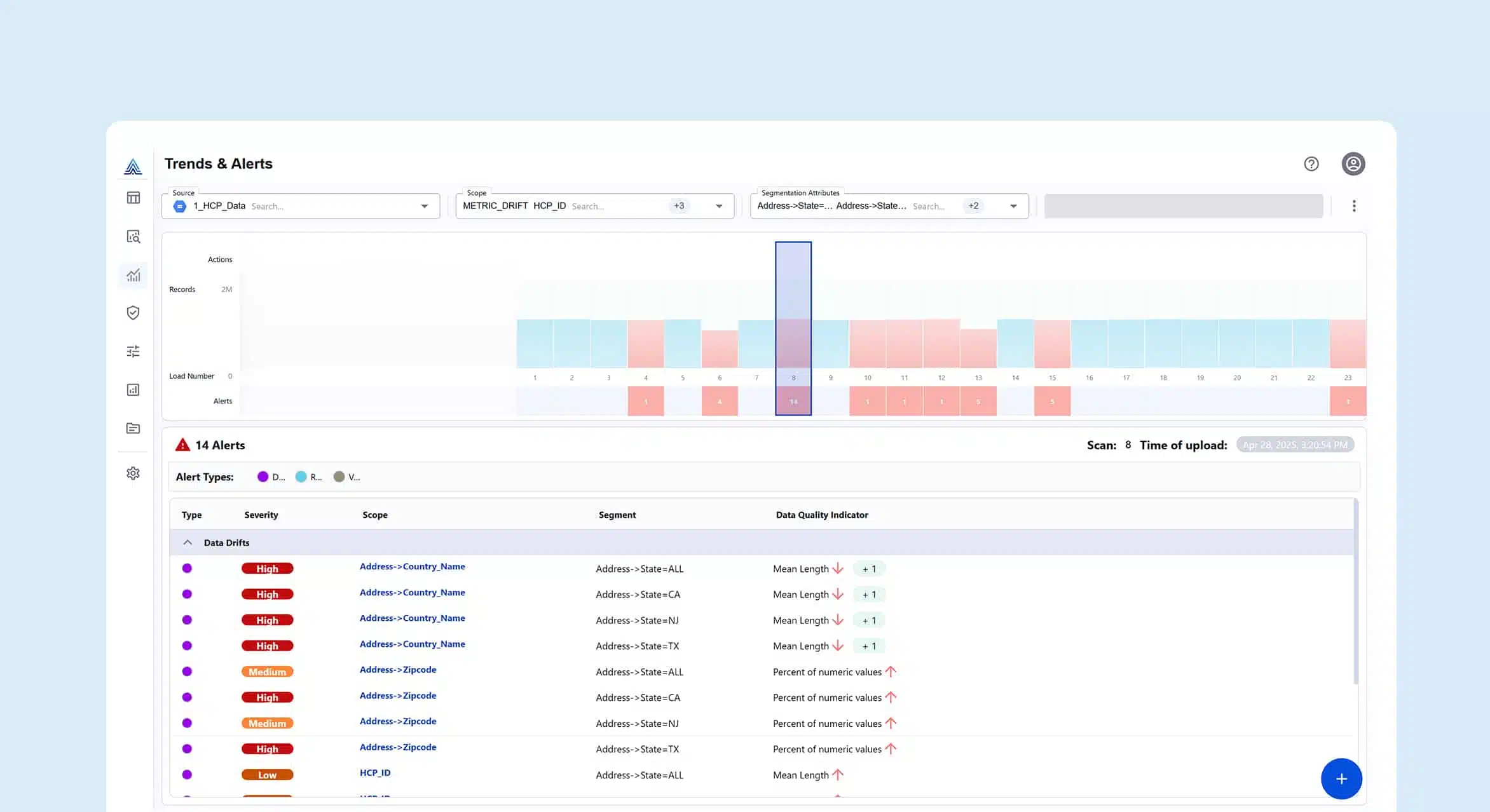

How the Data Catalog Permission Management System Works

In the Actian Data Intelligence Platform Data Catalog, it is possible to define for each user if they have the right to manipulate the objects of the whole catalog, one or several types of objects, or only those of their perimeter. This allows for the finest granularity, but also for more global roles. For example, “super-stewards” could have permission to act on entire parts of the catalog, such as the glossary.

We then associate a list of Curators with each object in the catalog, i.e., those responsible for documenting that object. Thus, simply by exploring the details of the object, one can immediately know who to contact to correct or complete the documentation, or to answer a question about it. The system is therefore readable and easy to understand. The users’ scope of action is precisely determined through a granular system, right down to the object in the catalog.

When a new user is added to the catalog, it is then necessary to define its scope of actions. For the moment, this configuration is done through the bulk editing of objects. In order to simplify management even further, it will soon be possible to define specific groups of users, so that when a new collaborator arrives there is no longer any need to add them by name to each object in their scope. Instead, they simply need to be added to the group, and their scope will be automatically assigned to them.

Finally, we have voluntarily chosen not to implement a documentation validation workflow in the catalog. We believe that team accountability is one of the keys to the success of a data catalog adoption. This is why the only control we put in place is the one that determines the user’s rights and scope. Once these two elements have been determined, the people responsible for the documentation are free to act. The system is completed with an event log on modifications to allow complete auditability, as well as a discussion system on the objects. It allows everyone to suggest changes or report errors on the documentation.

If you would like to learn more about our permission management model, or get more information about our Data Catalog.

About Actian Corporation

Actian empowers enterprises to confidently manage and govern data at scale. Actian data intelligence solutions help streamline complex data environments and accelerate the delivery of AI-ready data. Designed to be flexible, Actian solutions integrate seamlessly and perform reliably across on-premises, cloud, and hybrid environments. Learn more about Actian, the data division of HCLSoftware, at actian.com.