What Makes a Data Catalog “Smart”? #4 – The Search Engine

Actian Corporation

February 16, 2022

A data catalog harnesses enormous amounts of very diverse information, and its volume will grow exponentially. This will raise 2 major challenges:

- How to feed and maintain the volume of information without tripling (or more) the cost of metadata management?

- How to find the most relevant datasets for any specific use case?

We think that a data catalog should be Smart to answer these 2 questions, with smart technological and conceptual features that go wider than the sole integration of AI algorithms.

In this respect, we have identified 5 areas in which a data catalog can be “Smart” – most of which do not involve machine learning:

- Metamodeling

- The data inventory

- Metadata management

- The search engine

- User experience

A Powerful Search Engine for an Efficient Exploration

Given the enormous volumes of data involved in an enterprise catalog, we consider the search engine the principal mechanism through which users can explore the catalog. The search engine needs to be easy to use, powerful, and, most importantly, efficient – the results must meet user expectations. Google and Amazon have raised the bar very high in this respect, and the search experience they offer has become a reference in the field.

This second-to-none search experience can be summed up thus:

- I write a few words in the search bar, often with the help of a suggestion system that offers frequent associations of terms to help me narrow down my search.

- The near-instantaneous response provides results in a specific order and I fully expect to find the most relevant one on page one.

- Should this not be the case, I can simply add terms to narrow the search down even further or use the available filters to cancel out the non-relevant results.

Alas, the best currently on offer in the data cataloging market in terms of search capabilities seems to be limited to capable systems indexations, scoring, and filtering. This approach is satisfactory when the user has a specific idea of what they are looking for (high intent search) but can prove disappointing when the search is more exploratory (low intent search) or when the idea is simply to spontaneously suggest relevant results to a user (no intent).

In short, simple indexation is great for finding information whose characteristics are well known but falls short when the search is more exploratory. The results often include false positives and the order in which the search comes out is over-represented with exact matches.

A Multidimensional Search Approach

We decided from the get-go that a simple indexation system would prove limited and would fall short of providing the most relevant results for the users. We, therefore, chose to isolate the search engine in a dedicated module on the platform and to turn it into a powerful innovation (and investment) zone.

We naturally took an interest in the work of the founders of Google on Page Rank, their algorithm. Page Rank takes into account several dozen aspects (called features), amongst which are the density of the relation between different graph objects (hypertext links in the case of internet pages), the linguistic treatment of search terms, or the semantic analysis of the knowledge graph.

Of course, we do not have the means Google has, nor its expertise in terms of search result optimization. But we have integrated into our search engine several features that provide a high level of relevant results, and those features are permanently evolving.

We have integrated the following core features:

- Standard, flat, indexation of all the attributes of an object (name, description, and properties) weighing it up in accordance with the type of property.

- An NLP layer (Natural Language Processing) that takes into account the near misses (typing or spelling errors).

- A semantic analysis layer that relies on the processing of the knowledge graph.

- A personalization layer that currently relies on a simple user classification according to their uses, and will in the future be enriched by individual profiling.

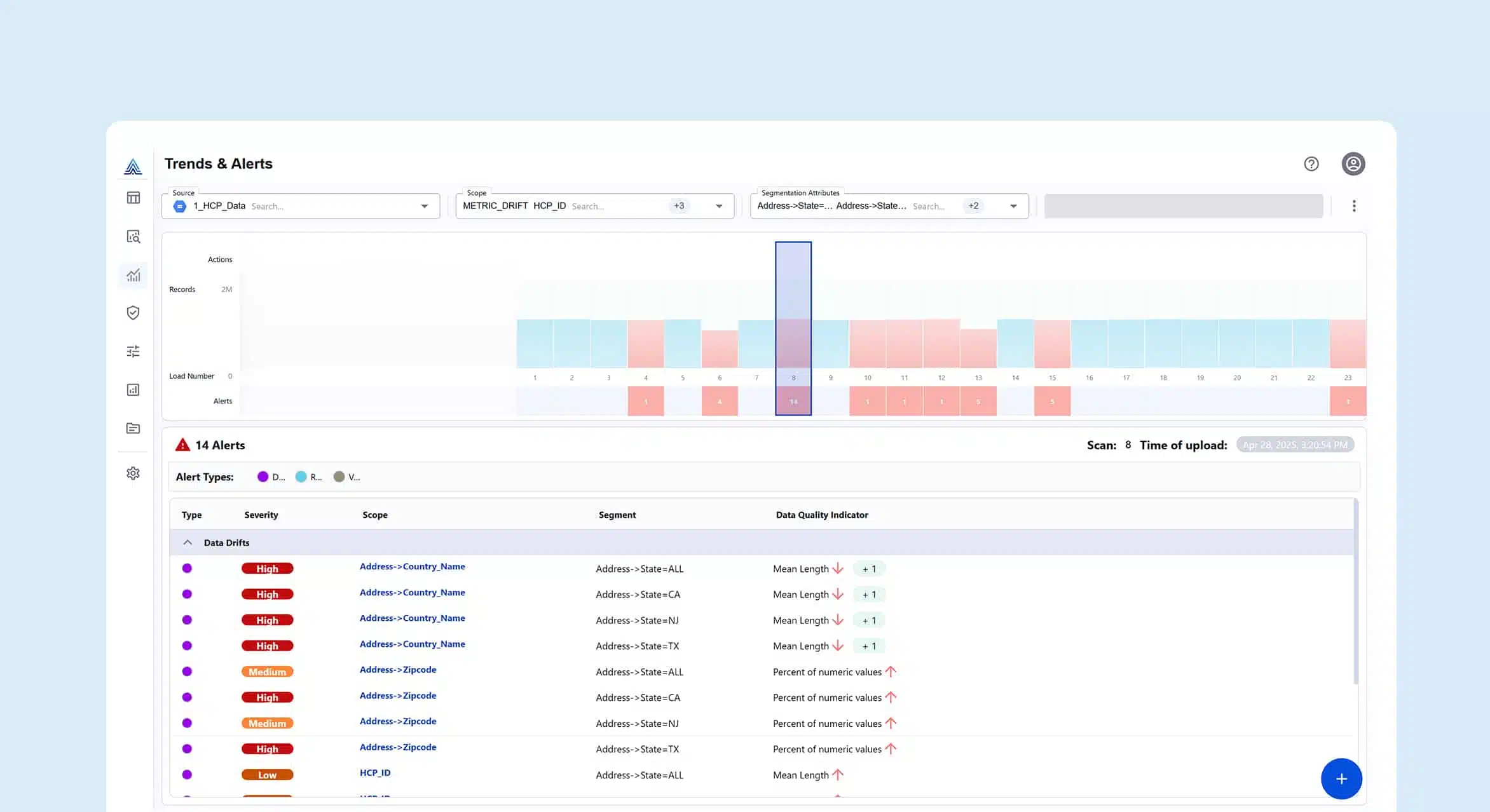

Smart Filtering to Contextualize and Limit Search Results

To complete the search engine, we also provide what we call a smart filtering system. Smart filtering is something we often find on e-commerce websites (such as Amazon, booking.com, etc.) and it consists in providing contextual filters to limit the search result. These filters work in the following way:

- Only those properties that help reduce the list of results are offered in the list of filters – non-discriminating properties do not show up.

- Each filter shows its impact – meaning the number of residual results once the filter has been applied.

- Applying a filter refreshes the list of results instantaneously.

With this combination of multi-dimensional search and smart filtering, we feel that we offer a superior search experience to any of our competitors. And our decoupled architecture enables us to explore new approaches continuously, and rapidly integrate those that seem efficient.

For more information on how a Smart search engine enhances a Data Catalog, download our eBook: “What is a Smart Data Catalog?”.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)