The Consequences of Poor Data Quality: Uncovering the Hidden Risks

Actian Corporation

June 23, 2024

Summary

Poor data quality quietly drains millions in revenue, productivity, and trust. This blog outlines the hidden financial, operational, and compliance risks that stem from inaccurate or incomplete data.

- The average business loses $15 million annually due to poor data quality; in the U.S., this impact reaches $3.1 trillion across the economy.

- Employees spend up to 27% of their time correcting bad data, slowing decision-making, and increasing operational costs.

- Poor data undermines compliance efforts, damages brand reputation, and leads to missed market opportunities.

The quality of an organization’s data has become a critical determinant of its success. Accurate, complete, and consistent data is the foundation upon which crucial decisions, strategic planning, and operational efficiency are built. However, the reality is that it is a pervasive issue, with far-reaching implications that often go unnoticed or underestimated.

Defining Poor Data Quality

Before delving into the impacts of poor data quality, it’s essential to understand what constitutes subpar data. Inaccurate, incomplete, duplicated, or inconsistently formatted information can all be considered poor data quality. This can stem from various sources, such as data integration challenges, data capture inconsistencies, data migration pitfalls, data decay, and data duplication.

The Hidden Costs of Poor Data Quality

- Loss of Revenue

Poor data quality can directly impact a business’s bottom line. Inaccurate customer information, misleading product details, and incorrect order processing can lead to lost sales, decreased customer satisfaction, and damaged brand reputation. Gartner estimates that poor data quality costs organizations an average of $15 million per year. - Reduced Operational Efficiency

When employees waste time manually correcting data errors or searching for accurate information, it significantly reduces their productivity and the overall efficiency of business processes. This can lead to delayed decision-making, missed deadlines, and increased operational costs. - Flawed Analytics and Decision-Making

Data analysis and predictive models are only as reliable as the data they are based on. Incomplete, duplicated, or inaccurate data can result in skewed insights, leading to poor strategic decisions that can have far-reaching consequences for the organization. - Compliance Risks

Stringent data privacy regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), require organizations to maintain accurate and up-to-date personal data. Failure to comply with these regulations can result in hefty fines and reputational damage. - Missed Opportunities

Poor data quality can prevent organizations from identifying market trends, understanding customer preferences, and capitalizing on new product or service opportunities. This can allow competitors with better data management practices to gain a competitive edge. - Reputational Damage

Customers are increasingly conscious of how organizations handle their personal data. Incidents of data breaches, incorrect product information, or poor customer experiences can quickly erode trust and damage a company’s reputation, which can be challenging to rebuild.

Measuring the Financial Impact of Poor Data Quality

- Annual Financial Loss: Organizations face an average annual loss of $15 million due to poor data quality. This includes direct costs like lost revenue and indirect costs such as inefficiencies and missed opportunities (Data Ladder).

- GDP Impact: Poor data quality costs the US economy approximately $3.1 trillion per year. This staggering figure reflects the extensive nature of the issue across various sectors, highlighting the pervasive economic burden (Experian Data Quality) (Anodot).

- Time Wasted: Employees can waste up to 27% of their time dealing with data issues. This includes time spent validating, correcting, or searching for accurate data, significantly reducing overall productivity (Anodot).

- Missed Opportunities: Businesses can miss out on 45% of potential leads due to poor data quality, including duplicate data, invalid formatting, and other errors that hinder effective customer relationship management and sales efforts (Data Ladder).

- Audit and Compliance Costs: Companies may need to spend an additional $20,000 annually on staff time to address increased audit demands caused by poor data quality. This highlights the extra operational costs that come with maintaining compliance and accuracy in financial reporting (CamSpark).

Strategies for Improving Data Quality

Addressing poor data quality requires a multi-faceted approach encompassing organizational culture, data governance, and technological solutions.

- Fostering a Data-Driven Culture

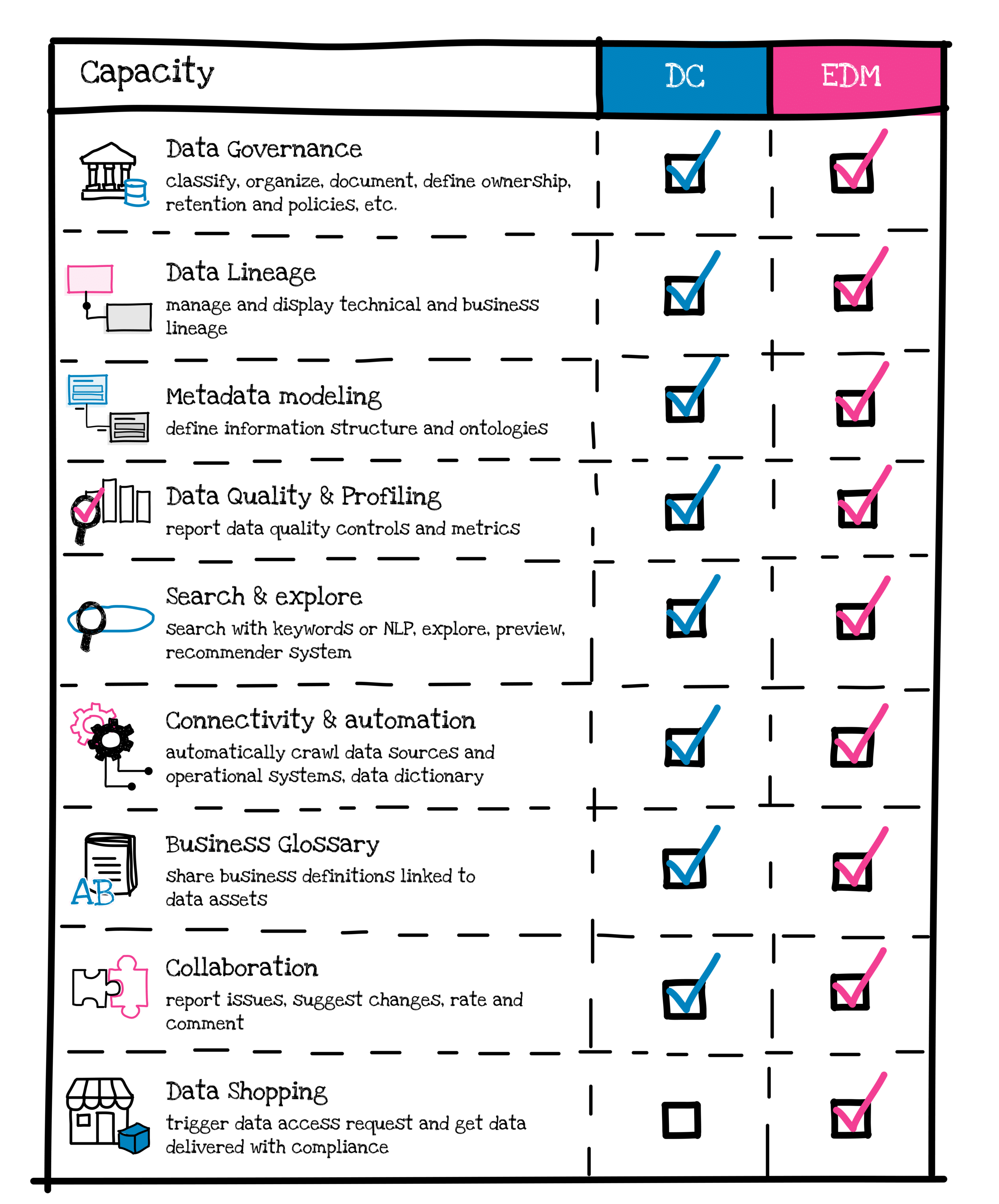

Developing a workplace culture that prioritizes data quality is essential. This involves establishing clear data management policies, standardizing data formats, and assigning data ownership responsibilities to ensure accountability. - Implementing Robust Data Governance

Regularly auditing data quality, cleaning and deduplicating datasets, and maintaining data currency are crucial to maintaining high-quality data. Automated data quality monitoring and validation tools can greatly enhance these processes. - Leveraging Data Quality Solutions

Investing in specialized data quality software can automate data profiling, cleansing, matching, and deduplication tasks, significantly reducing the manual effort required to maintain data integrity.

The risks and costs associated with poor data quality are far-reaching and often underestimated. By recognizing the hidden impacts, quantifying the financial implications, and implementing comprehensive data quality strategies, organizations can unlock the true value of their data and position themselves for long-term success in the digital age.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)