Data Literacy: The Foundation for Effective Data Governance

Actian Corporation

November 2, 2021

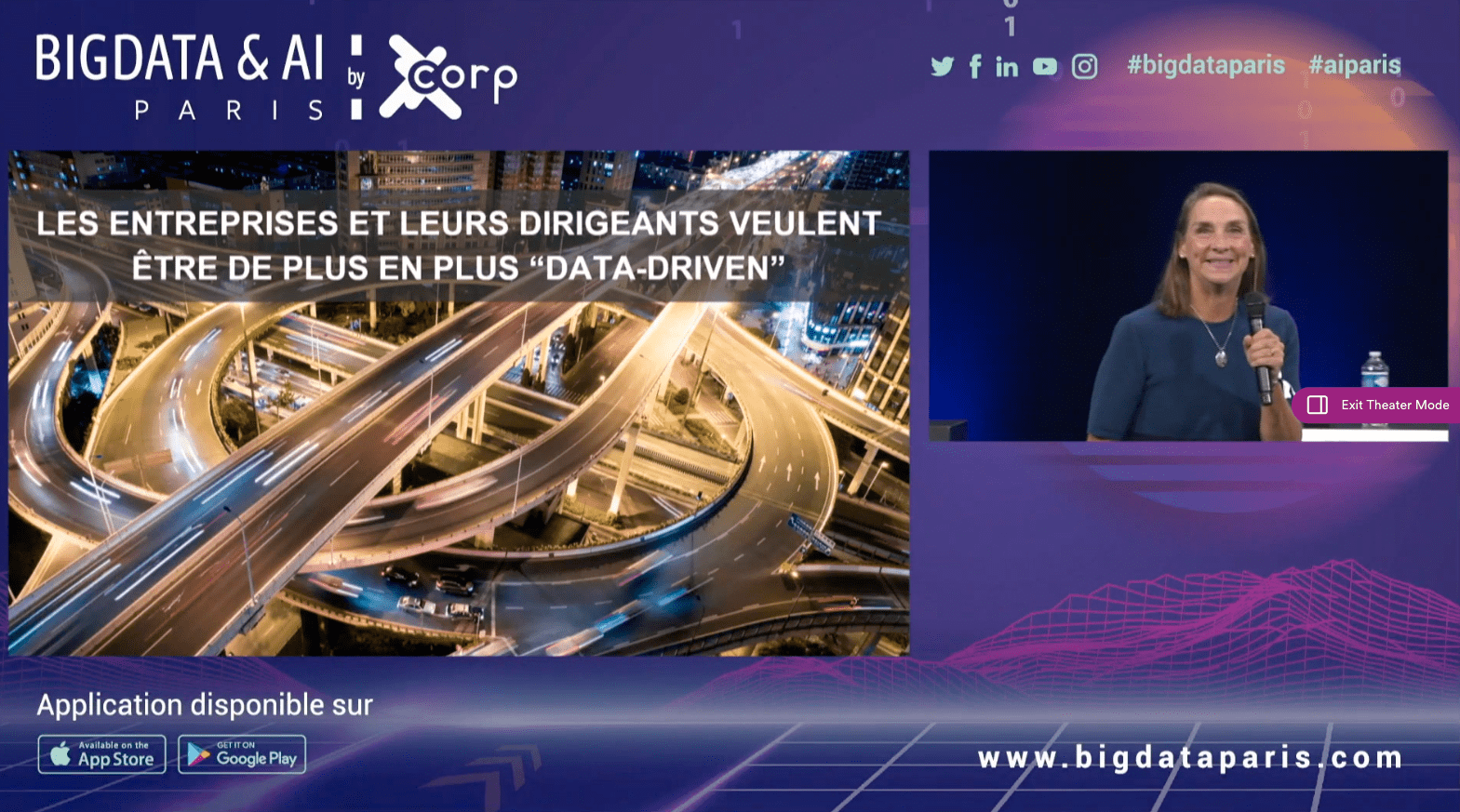

On September 28th and 29th, we attended several conferences during the Big Data & AI Paris 2021. One of these conferences particularly caught our attention around a very trendy topic: data literacy. In this article, we will present best practices for implementing data literacy that Jennifer Belissent, Analyst at Forrester and Data Analyst at Snowflake, shared during her presentation. She also detailed why this practice is essential for effective data governance.

The Data-Driven Enterprise

It’s no secret that today, all companies want to become data-driven. And everyone is looking for data. Indeed, it is no longer reserved for a particular person or team but for all departments of the organization. From reporting to predictive analytics to the implementation of machine learning algorithms, data must be present in the company’s applications and processes to provide information directly for the organization’s strategic decision-making.

To do this, Jennifer says: “Silos must be broken down throughout the company! We need to give access to internal data, of course, but we must not neglect external data, such as data from suppliers, customers, and partners. We use it, and today we are even dependent on it.”

What is Data Literacy?

Data literacy is the ability to identify, collect, process, analyze, and interpret data to understand the transformations, processes, and behaviors it generates.

However, many employees suffer from a lack of knowledge around data and associated analytics because they do not recognize what data is and the value it brings to the company. And every employee has a role to play. For better data governance, a data literacy program must be established.

The Challenges of Data Governance

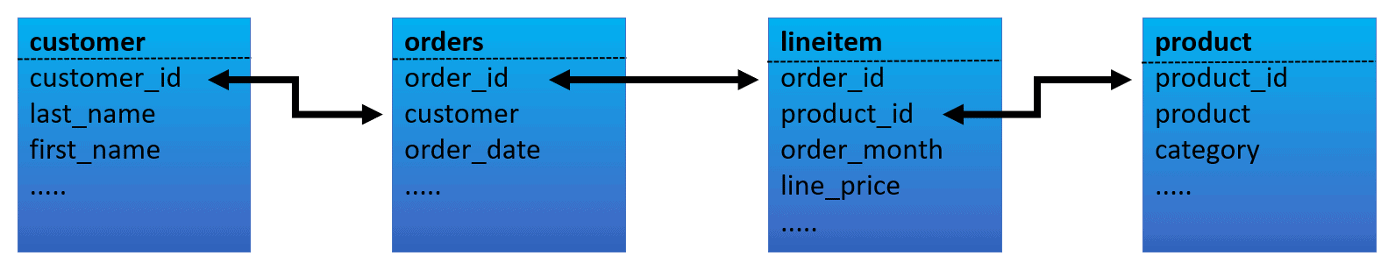

The colossal amounts of data an organization generates must be managed and governed properly in order to extract a maximum value from them. Jennifer presents the three major challenges at Snowflake:

- Data is Everywhere: Whether it’s in analytics systems, storage locations, or Excel files, it’s hard to know all the data in the company if it’s not shared.

- Data Management is Complex: It’s hard to manage all this data from various sources. Where is the data? What does it contain? Who owns it? The answers to these questions require centralized visibility and control.

- Security and Governance are Rigid: Data security is very often linked to the organization’s data silos. To secure and govern this data, it is necessary to have a unified, consistent and flexible policy.

But that’s not all! There is a fourth challenge: the lack of data literacy.

The Consequences of a Lack of Data Literacy in an Organization

To illustrate what data literacy is, Jennifer recounts to us an anecdote. In early 2020, during the first lockdown in France, Jennifer was talking to the Chief Data Officer at Sodexo. The CDO told Jennifer that during a data analysis related to their website, an interesting fact emerged: a peak in the purchase of sausages in the morning.

This surprised the CDO who found this increase in sausage sales strange, knowing that “breakfast sausages” were not a usual breakfast for the French.

Upon further investigation, the CDO discovered that this spike in sales coincided with Sodexo’s replacement of traditional point-of-sale cash registers with automated kiosks. These kiosks had buttons for each item to better manage orders. The problem was identified: the cashier in charge of these new kiosks had no idea what these buttons represented and was constantly pressing them, without knowing that they were actually capturing data! Fortunately, Sodexo had noticed this, otherwise the company would have ordered a huge stock of sausages…

Following this story, Jennifer says she conducted a qualitative study with Forrester asking three questions:

1. Do you work with data?

2. Are you comfortable with data?

3. If not, what training would help you feel more comfortable with data?

The answers to these questions were surprising! In fact, Jennifer says Forrester thought the most important question in the study would be the last one. But it was actually the answers to the first question that surprised them: many of the people answered that they didn’t work with data at all because “they didn’t work with spreadsheets or calculations.”

On the other hand, those who answered that they were comfortable with data had a big lack of trust with their colleagues: these people were the only ones who understood the data and therefore worried about the mistakes their collaborators might make.

“So there were two major problems with data: getting useful and reliable data, but more importantly, most people in this study didn’t even know they were working with data!” says Jennifer.

Lack of Data Literacy Undermines Data Governance

The definition of data literacy, according to Jennifer, is someone who can read, understand, create and communicate data. But Jennifer doesn’t think that’s enough: “You also have to be able to recognize data. As we’ve seen, many people today don’t know what data is.”

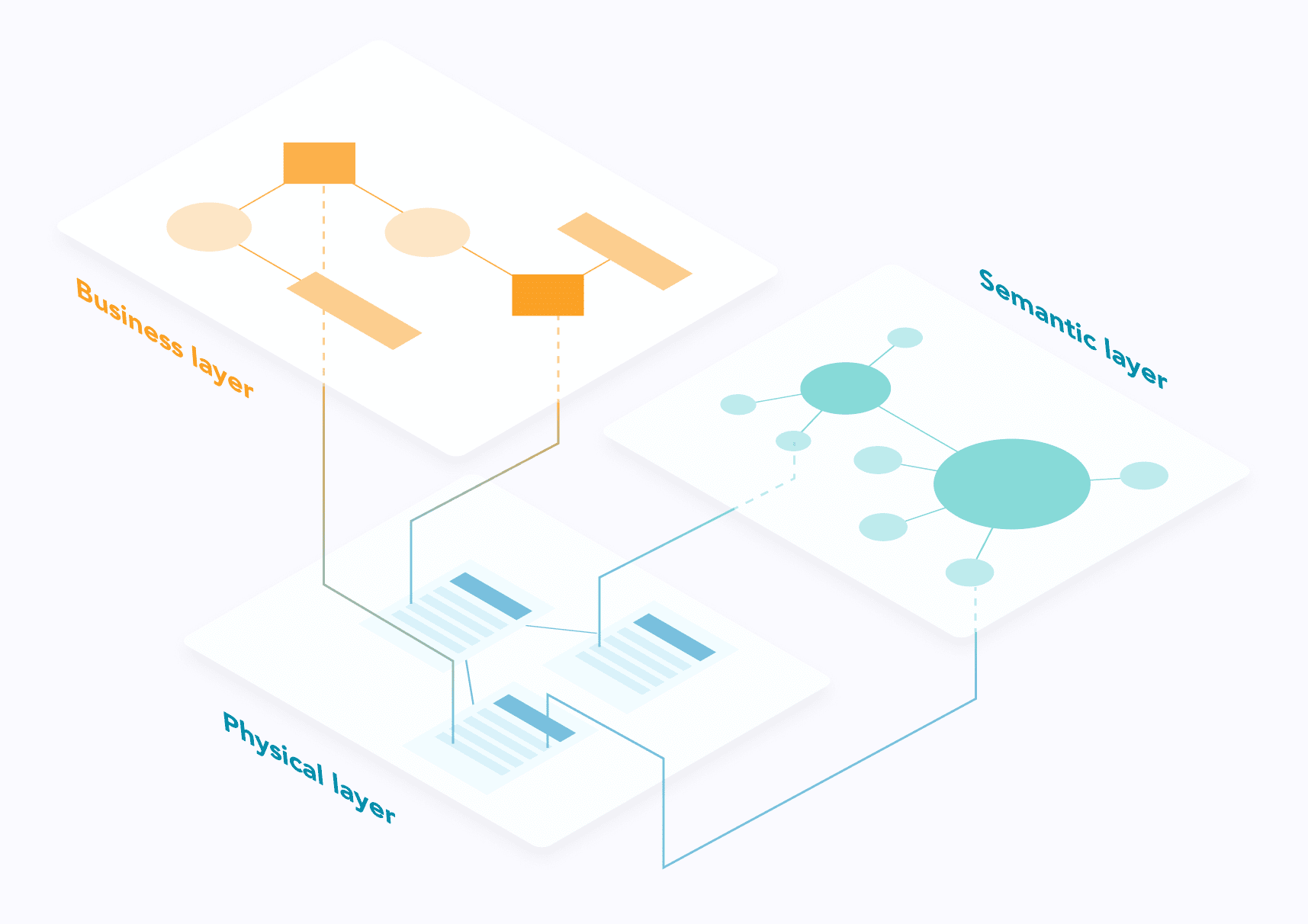

For many, data governance is only associated with security. But in reality, governance spans the entire value chain and the entire life cycle of data. There are three pillars of data governance according to Jennifer:

- Know the Data: Understand, classify, track data and its use, know who owns it, know if it’s good quality, if it’s sensitive, etc.

- Protect Data: Secure sensitive data with access controls based on internal policies and external regulations.

- Liberate Data: Convey the potential of data and enable teams to share it.

And around these three pillars comes data literacy. Data governance will be improved through better data literacy.

Best Practices in Data Literacy

The implementation of a data literacy program should not be reserved to experts, and should even start at the bottom of the pyramid. This starts with the onboarding process of a new employee, for example.

Jennifer suggests that companies wishing to become data-driven rely on a data literacy program that meets 4 objectives:

- Raise Awareness: Make all employees aware of what data is, its interest, the role of each person with regard to data and, above all, the value it brings to the company.

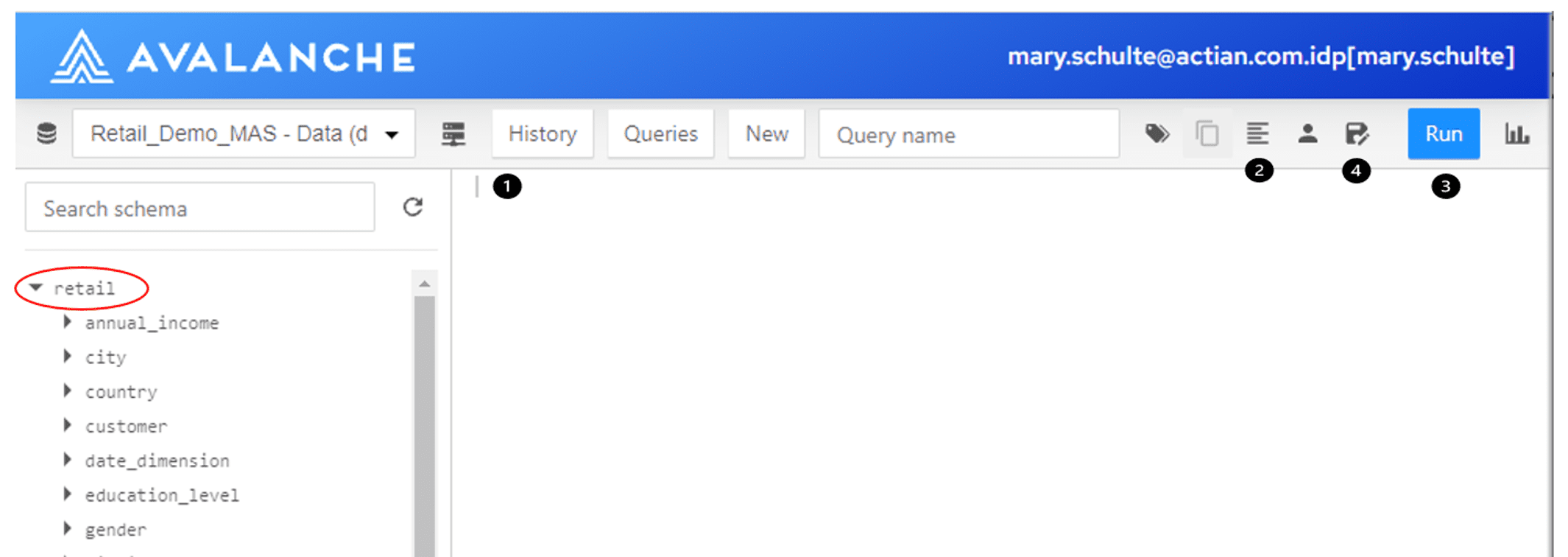

- Improve Understanding: Those who are supposed to use data in the company are often afraid, and do not always understand it. It is therefore important to provide them with the right tools, help them ask the right questions and explain the logic of the analyses so that these users can make better decisions.

- Enriching Expertise: This means putting the best technical tools and practices in place, but it also means leveraging them.

- Enable Scaling: It is thanks to your company’s data experts that you will be able to enable scaling and therefore, help create a community and a data culture. It is important that these experts pass on their knowledge to the whole company.

To conclude, Jennifer shares one last analogy:

“For data-driven companies, data governance represents the traffic laws, and data literacy is the foundation.”

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)