Modern enterprises face challenges governing vast datasets across hybrid‑cloud environments while maintaining compliance and enabling AI‑driven insights. Knowledge graphs have emerged as a strategic solution, offering federated metadata management, intelligent discovery, and automated governance. This guide examines leading platforms and explains how the Actian Data Intelligence Platform addresses governance pain points through its unified, AI‑ready architecture.

Who Should Consider a Knowledge Graph for Data Governance

Governance pain points that signal a graph is needed

Several governance challenges indicate that a knowledge graph can deliver meaningful impact:

-

Data silos hide lineage and ownership, making impact analysis impossible. Knowledge graphs visualize relationships across domains, mapping upstream dependencies to downstream analytics.

-

Stale or conflicting metadata occurs when teams maintain separate definitions for the same concepts, leading to inconsistent reporting. Knowledge graphs enable automated synchronization, ensuring definitions remain current through real‑time metadata propagation.

-

Incomplete impact analysis impedes confident decision‑making around data changes. Graph‑based platforms allow “what‑if” queries across dependent assets, showing the ripple effect of proposed changes.

67% of enterprises cite “metadata fragmentation” as their top governance challenge. Organizations using graph-based governance report a 40% faster time-to-insight compared to catalog-only approaches.

Data lineage records data’s origins, movements, transformations, and dependencies — essential for compliance and impact analysis.

Ideal organization size, data volume, and cloud footprint

Knowledge graphs deliver value to enterprises managing substantial data volumes across complex infrastructures:

- Large enterprises with over 10 TB of structured and unstructured data.

- Multi‑cloud organizations managing over 5 PB across AWS, Azure, and GCP.

- Hybrid environments requiring real‑time synchronization between on‑premises and cloud systems.

Mid‑size to large firms benefit from hybrid‑cloud scalability, where federated architectures eliminate the need to centralize all metadata.

| Data Volume | Recommended Solution | Key Considerations |

|---|---|---|

| < 5 TB | Traditional catalog | Simpler tools may suffice |

| 5–10 TB | Graph pilot project | Test with critical use cases |

| > 10 TB | Full graph implementation | Graph becomes essential |

| > 100 TB | Federated graph architecture | Requires distributed approach |

Regulatory triggers that drive graph adoption

Compliance mandates increasingly require sophisticated lineage tracking and automated governance controls:

- GDPR and CCPA demand precise data subject access and “right‑to‑be‑forgotten” capabilities. Knowledge graphs support these requirements through traceable lineage.

- HIPAA and healthcare regulations require detailed audit trails and access controls for protected health information. Graph‑based governance automatically tracks data access.

- Industry‑specific mandates like Basel III for banking require demonstrable data quality and lineage documentation. Knowledge graphs provide automated evidence collection.

Organizations treating compliance as a strategic differentiator consistently outperform peers in risk management and innovation velocity.

Actian Data Intelligence Platform – A Strategic Advantage

Federated knowledge graph unifies edge to multi‑cloud

Actian Data Intelligence Platform governs distributed data without requiring centralization, delivering a unified governance layer across hybrid and multi‑cloud environments. At its core is a federated knowledge graph that connects metadata wherever it resides — from edge systems to enterprise clouds.

Unlike traditional catalogs that require aggregating metadata into a single repository, Actian’s federated approach creates a semantic overlay that links operational, analytical, and domain‑specific metadata. Each domain retains ownership of its metadata through localized graph stores, with changes synchronized automatically via Actian’s global metadata service.

This real‑time synchronization ensures consistent definitions, lineage, and governance policies without manual effort — enabling faster impact analysis and stronger compliance across complex infrastructures.

Example: A global enterprise uses Actian’s federated knowledge graph to unify governance for datasets spanning multiple clouds and on‑prem systems, achieving complete lineage and automated compliance without moving sensitive data from its source.

CI/CD‑integrated data contracts enforce quality

Data contracts shift governance from reactive to proactive. Actian embeds schema definitions, quality rules, and service‑level agreements into CI/CD pipelines, automating governance in the development process.

A typical workflow involves:

- Developer commits code changes to a Git repository.

- Pipeline runs contract validation tests.

- Quality checks verify schema compatibility and data freshness.

- Successful validation triggers automatic publication to the data catalog.

- Failed validation blocks deployment and notifies stakeholders.

This approach reduces data quality incidents by up to 60% after implementing contract‑driven governance.

Data contracts formalize agreements between data producers and consumers, codifying schema expectations, quality requirements, and service‑level commitments.

Built‑in lineage, security, and compliance controls

Actian offers enterprise‑grade governance controls that address demanding compliance requirements:

- End‑to‑end lineage tracking captures data movement from source to consumption.

- Role‑based access controls enforce least‑privilege principles.

- Encryption at rest and in flight protects sensitive data.

- Comprehensive audit logs provide tamper‑proof records for compliance.

- Automated data classification identifies and tags sensitive information.

- Policy enforcement applies governance rules based on data classification.

These controls create a governance‑by‑design approach where compliance is built‑in and automatic.

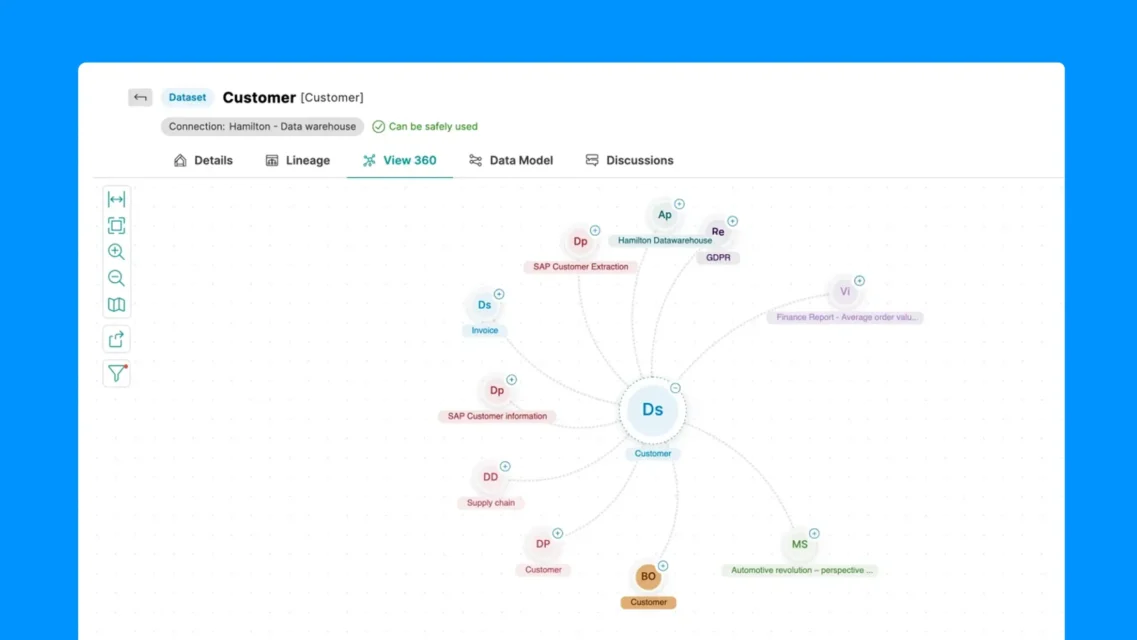

Real‑time discovery with the Explorer app

The Explorer application transforms data discovery into an intuitive, Google‑like experience. Users can perform instant graph traversal, semantic search, and visual lineage exploration through a single interface.

For example, a business analyst searching “customer‑order‑status” receives a ranked list of related data products, including relevant datasets, dependencies, and visual lineage maps. This capability accelerates time‑to‑insight for both technical and business users.

Evaluation Criteria for Selecting a Knowledge Graph

Scalability and performance in hybrid‑cloud environments

Evaluate knowledge graph platforms based on their ability to:

- Scale horizontally to over 100 billion edges without performance degradation.

- Maintain query latency under 1 second for complex graph traversals.

- Support distributed deployment across multiple cloud regions and on‑premises data centers.

- Handle concurrent users with consistent performance.

Automated metadata synchronization and governance automation

Require platforms that provide:

- Bi‑directional synchronization with catalog tools like Collibra and Alation.

- Support for metadata standards including ISO 11179 and FAIR principles.

- API‑first architecture for custom integrations.

- Real‑time change propagation to update dependent systems.

- Conflict resolution for inconsistent metadata.

Automated synchronization eliminates the manual effort that makes traditional governance unsustainable.

AI‑ready semantics, search, and inference capabilities

Evaluate platforms based on built‑in AI capabilities:

- Natural language processing for automatic metadata enrichment.

- Embedding generation for semantic similarity search.

- Graph‑based inference for discovering hidden relationships.

- Machine learning integration with frameworks like TensorFlow.

- Automated ontology construction from existing data schemas.

Knowledge graphs are essential infrastructure for AI initiatives, with 78% of organizations planning to implement graph‑based AI solutions within two years.

Integration ecosystem and API‑first design

Essential integration capabilities include:

- REST, GraphQL, and SPARQL endpoints for flexible API access.

- Pre‑built connectors for major data warehouses.

- Lakehouse integration with Delta Lake and Apache Iceberg.

- Streaming platform support for Kafka and Kinesis.

- Business intelligence tools including Tableau and Power BI.

API‑first design ensures the knowledge graph can adapt to evolving technology stacks.

Feature‑by‑Feature Comparison – Actian vs. Leading Vendors

While graph databases and governance tools address isolated aspects of metadata management, Actian unifies both. The table below summarizes where Actian’s federated knowledge graph differentiates itself.

| Feature | Actian | Neo4j | Amazon Neptune |

|---|---|---|---|

| Graph model | Multi‑model (property graphs + RDF triples) | Property graph only | Must choose one model per cluster |

| Data productization | Studio app with embedded data contracts, CI/CD governance | Requires external tooling | Limited governance capabilities |

| Lineage | Real‑time, interactive lineage maps via Explorer app | Static lineage; manual updates required | Limited lineage features |

| Deployment | Hybrid, cloud, SaaS | Managed service only, limited on‑prem | AWS‑only managed |

| Pricing | Transparent node‑based subscription | Consumption‑based, premium tiers | Pay‑per‑instance, hidden fees |

Cost, ROI, and Total Cost of Ownership

Licensing structures and hidden fees

Actian offers transparent node‑based subscriptions that include enterprise‑grade governance features. Competitor models often require premium tiers or additional services that increase cost.

Implementation effort and time‑to‑value

Implementation timelines vary by scope:

- Large enterprise rollout: 6–9 months.

- Pilot projects: 3–4 months.

- Proof of concept: 4–6 weeks.

Actian’s “zero‑code onboarding” reduces implementation effort by about 30%.

Quantified ROI

Case studies show:

- Data onboarding reduced from days to minutes — saving $1.2 M annually for a major bank.

- 2–3× faster query performance for graph‑based operations vs. relational joins.

- Significant reductions in data discovery time and quality incidents.

Support, services, and ecosystem costs

Actian professional services offer implementation consulting, custom integration development, and training programs. Comprehensive training boosts adoption rates by up to 40%.

How is a Knowledge Graph Used by Businesses?

Businesses use knowledge graphs to unlock the value of data by making it more connected, contextual, and usable. In today’s data-driven economy, companies face the challenge of dealing with siloed data isolated across different departments and systems. A knowledge graph helps break down those silos and create a unified view.

Here are six common business applications:

1. Customer 360-Degree View

By linking customer interactions, transactions, and behaviors across platforms, companies can build a complete, up-to-date view of each customer. This enables more personalized marketing, proactive customer service, and better sales targeting.

2. Product Information Management

Retailers and manufacturers can use knowledge graphs to organize complex product catalogs, connect related items, and manage specifications across brands and categories.

3. Enterprise Searches

Instead of relying on traditional keyword-based searches, businesses can implement semantic searches using knowledge graphs. This lets employees find information based on meaning and relationships, not just text matches.

4. Fraud Detection

By modeling relationships between users, transactions, and accounts, knowledge graphs help detect suspicious patterns that indicate fraud, such as unusual connections between accounts.

5. Recommendation Systems

Whether it’s for individuals choosing movies, books, or products, knowledge graphs enhance recommendations by understanding the relationships between a user’s preferences and available items.

6. Regulatory Compliance

Businesses in finance, healthcare, and pharmaceuticals use knowledge graphs to track data lineage, manage sensitive information, and comply with regulations like GDPR or HIPAA.

In short, knowledge graphs turn raw data into strategic insights, enabling smarter, faster business decisions.

How is a Knowledge Graph Different From a Traditional Database?

Traditional databases and knowledge graphs both store and manage data, but they serve different purposes and have different structures. Here are five key differences:

1. Structure: Tables vs. Graphs

- Traditional databases, like SQL databases, use tables with rows and columns to store data.

- Knowledge graphs use nodes and edges to represent entities and their relationships.

2. Focus: Data vs. Relationships

- Databases are optimized for storing and retrieving data efficiently.

- Knowledge graphs are designed to model relationships and context, making it easier to perform complex queries across connected information.

3. Schema Flexibility

- Traditional databases require a fixed schema, which means changes can be slow and disruptive.

- Knowledge graphs allow for schema evolution. Users can add new types of data or relationships without redesigning the entire structure.

4. Query Language

- SQL is used to query relational databases.

- SPARQL for RDF graphs or Cypher for property graphs like Neo4j is used to query knowledge graphs.

5. Data Integration

- Knowledge graphs are better suited for integrating diverse data sources, thanks to their flexible and semantic nature.

What are Key Components of a Knowledge Graph?

A knowledge graph is made up of several building blocks that work together to create a rich, semantic network of information. Here are the six most important components:

1. Entities (Nodes)

These are the “things” the graph represents. They can be datapoints like people, organizations, locations, products, etc.

2. Relationships (Edges)

These are the connections between entities, such as “works_for,” “founded_by,” or “located_in.” Relationships are as important as the entities themselves.

3. Properties (Attributes)

Each entity or relationship can have metadata or attributes. For example, a “Person” entity might have attributes such as “name,” “birthdate,” or “email.”

4. Ontology (Schema)

This is the underlying model that defines the types of entities, relationships, and their rules. Ontologies provide consistency, helping both machines and humans understand what each part of the graph means.

5. Triples (for RDF Graphs)

In RDF-based graphs, data is stored as triples:

Subject – Predicate – Object

For example:

Steve Jobs – founded – Apple

6. Graph Database or Triple Store

This is the engine that stores and queries the graph. Examples include Neo4j (property graph), GraphDB (RDF), and Amazon Neptune (hybrid).

Together, these components enable knowledge graphs to model complex domains in a highly connected, scalable, and semantically rich way.

How are Knowledge Graphs Used for AI?

Knowledge graphs play a pivotal role in artificial intelligence (AI) by providing structured, interpretable, and explainable knowledge. While AI models like neural networks are often treated as “black boxes,” knowledge graphs offer transparency and reasoning capabilities.

Five key uses for AI include:

1. Knowledge Augmentation for NLP

Natural Language Processing (NLP) applications use knowledge graphs to enhance context. For example, chatbots can reference a knowledge graph to clarify terms, answer questions, or provide consistent definitions.

2. Contextual Reasoning

AI models can use knowledge graphs to make inferences and logical deductions. If a graph knows that “X is a type of Y” and “Y has a feature Z,” it can infer that “X probably has Z.”

3. Semantic Search and Question Answering

Search engines and virtual assistants like Siri or Alexa use knowledge graphs to map user queries to real-world entities and relationships. This improves result accuracy and relevance.

4. Explainability

In machine learning, knowledge graphs help explain why a model made a decision. For example, a recommendation engine might show this reasoning:

“We suggested this book because you liked another book by the same author.”

5. Hybrid AI Systems

Modern AI systems increasingly combine statistical AI such as neural networks with symbolic AI like knowledge graphs. This hybrid approach improves robustness, especially in critical areas like healthcare and finance.

In essence, knowledge graphs give AI systems memory, context, and logic, allowing them to reason more like humans.

How is Data Quality Maintained in a Knowledge Graph?

Maintaining data quality in a knowledge graph is crucial because inaccurate or inconsistent data can corrupt relationships and lead to faulty insights. Here are six key strategies used to ensure data integrity:

1. Schema Validation

The graph’s ontology enforces rules about what types of entities and relationships are allowed. Violations can be flagged automatically.

2. Entity Resolution

Also known as deduplication, this involves identifying when different data entries refer to the same real-world entity. For example, “IBM” and “International Business Machines” likely refer to the same company.

3. Data Provenance and Lineage

Tracking where data comes from, or provenance, and how it has changed over time, shown in data lineage, helps ensure trust and accountability.

4. Automated Inference and Consistency Checks

Graphs can use reasoning engines to infer missing data or detect contradictions. For instance, if a person’s birthdate is after their graduation date, the graph can flag it.

5. Curation Tools

Many organizations offer user-friendly interfaces or knowledge graph editors to allow data stewards to manually review and correct graph content.

6. Integration from Trusted Sources

Feeding the knowledge graph with validated, high-quality sources like Wikidata, authoritative databases, or internal master data helps reduce errors at the source.

Quality control in knowledge graphs isn’t just a technical challenge. It’s also a governance and process issue that requires collaboration across teams.

Can a Knowledge Graph Help With Data Governance?

Absolutely. In fact, knowledge graphs are becoming a foundational component of modern data governance frameworks. They help organizations understand, control, and trust their data.

Here are five ways knowledge graphs support data governance:

1. Data Lineage and Impact Analysis

Knowledge graphs make it easier to track where data comes from, how it’s transformed, and where it’s used. This is essential for audits, troubleshooting, and compliance.

2. Metadata Management

They can integrate and represent metadata, or data about data, in a way that is interconnected and allows for querying. This capability is much more powerful than traditional spreadsheets or wikis.

3. Policy Enforcement

By associating data elements with governance policies, like retention rules or access restrictions, knowledge graphs help enforce compliance automatically.

4. Ownership and Stewardship

Organizations can assign ownership to entities or datasets directly in the graph, ensuring accountability and clear lines of responsibility.

5. Semantic Clarity

Governance is often hampered by inconsistent definitions, such as “What does ‘active customer’ mean?” A knowledge graph captures and shares standardized business terms and definitions across an organization.

Can Knowledge Graphs Work With Large Language Models?

Yes, and this integration is one of the most promising frontiers in AI today. Large Language Models (LLMs) like GPT-4 or Claude are excellent at generating and understanding human language, but they have limitations in accuracy, memory, and reasoning. Knowledge graphs can close those gaps. Here are five ways:

1. Grounding and Fact-Checking

LLMs can “hallucinate,” or generate false or unverified information. Integrating a knowledge graph allows the model to ground its outputs in verified data, improving factual accuracy.

2. Semantic Search Over Graphs

LLMs can act as a natural language interface to query a knowledge graph. For example, instead of writing SPARQL queries, a user could ask, “Who are all the employees who joined in 2023?” and the LLM can translate that into a graph query.

3. Personalized Responses

With access to a user or product knowledge graph, LLMs can tailor responses more intelligently, taking into account relationships, history, and preferences.

4. Contextual Memory

While LLMs have short-term memory limits, knowledge graphs can act as a long-term contextual memory, helping chatbots and agents remember user preferences or ongoing workflows.

5. Symbolic Reasoning

LLMs excel at language but struggle with formal logic. Knowledge graphs provide structured logic and reasoning paths, enabling hybrid systems to reason more effectively.

Together, knowledge graphs and LLMs offer the potential for deep, explainable, and context-rich AI systems. These are ideal for enterprise applications, research, and intelligent digital assistants.

Choosing the Right Platform – Use‑Case Scenarios

Finance

Fraud detection, regulatory reporting, risk analytics — mapping complex transaction networks and identifying suspicious patterns in real time.

Life Sciences

Patient data integration, drug discovery — integrating disparate sources for unified patient profiles and semantic linking.

Manufacturing

Predictive maintenance, supply‑chain visibility — using graph analytics to identify failure patterns and enhance supply‑chain resilience.

Cross‑industry

Data mesh enablement, self‑service analytics — enabling decentralized data ownership with centralized semantic trust for faster AI delivery.

Request a demo to explore how the Actian Data Intelligence Platform meets your organization’s needs.

FAQ

A knowledge graph is a structured data model that represents entities (people, products, systems), their attributes, and the relationships between them. It provides contextual meaning so machines can understand how data points connect across an organization.

Knowledge graphs unify siloed data sources and provide a semantic layer that improves data discovery, lineage tracking, governance, and analytics. They give AI models the context needed for more accurate search, recommendations, and reasoning.

Export current catalog metadata in standard formats, map entities to graph nodes, and use Actian’s bulk import API to ingest the metadata. Migration typically takes 4-6 weeks, providing immediate value through enhanced search and lineage visualization.

Define contract schemas in the Studio app, commit them to your Git repository, and configure your CI/CD pipeline to run contract validation tests. Successful validation publishes the product to the catalog, while failures block deployment and notify stakeholders.

Yes, Actian’s architecture ingests IoT events in real time and updates the graph structure immediately, enabling instantaneous querying and alerting based on current device states.

The platform includes pre-configured privacy policies, automated workflows, and audit-ready lineage tracking that satisfy compliance requirements. Automated retention policies support “right-to-be-forgotten” requests.

A phased rollout typically spans 6-9 months, with early value delivered within the first 3 months. Organizations with existing governance programs often achieve faster timelines.

Build a comprehensive TCO model including license fees, infrastructure costs, integration effort, and hidden costs. Normalize costs by projected annual data volume and request detailed pricing from each vendor, factoring in implementation services.