Data ingestion is the process of collecting and importing data from various sources into a central repository for storage and analysis. It is critical to any data management strategy, to ensure businesses can access and use data efficiently across different systems. Key aspects of data ingestion include:

- Collecting data from diverse sources (applications, cloud, databases, IoT devices, etc.).

- Support for batch or real-time ingestion depending on business needs.

- Data consistency, quality, and security during the ingestion process.

- Integrating data into downstream systems for analysis or operational use.

What is Data Ingestion?

Data ingestion is essential for any modern business that relies on data to drive decision-making. In its simplest form, it is the first step in moving data from various sources into a centralized data platform for storage, processing, or analysis. Whether the source is cloud-based, on-premises, or IoT devices, data ingestion ensures that information is properly formatted and organized for later use in a data pipeline.

Without adequate data ingestion, businesses face many challenges, from incomplete datasets to inefficient processing which could delay analysis. The flexibility to ingest data at scale — whether in real-time or through batch processing — directly impacts an organization’s ability to gain competitive insights and optimize its operations.

Where Data Ingestion Fits in the Data Pipeline

Data ingestion forms the foundational layer of the data pipeline, which moves data from sources to repositories where it can be analyzed or stored. Within the broader pipeline, ingestion is the process that ensures all relevant data from various inputs is brought into a system in a consistent and usable format.

In a typical data pipeline, ingestion is followed by data transformation.. The ingested data is cleaned, formatted, and enriched before being stored in a data warehouse, data lake, or other storage system or moving to a target application. From there, it can be processed, queried, or used to generate insights through analytics tools. Without proper data ingestion, the entire data pipeline can break down and cause delays, incomplete datasets, or errors in business reporting.

Types of Data Ingestion

Data ingestion occurs in two primary forms, depending on the speed and frequency of data collection:

Batch Data Ingestion

This method collects and processes data at scheduled intervals. It is ideal for businesses that don’t require real-time updates, such as companies running nightly reports or processing large volumes of historical data.

Real-Time Data Ingestion

As the name suggests, this method ingests data as it is created, providing near-instantaneous updates. Real-time ingestion is essential for applications like fraud detection, where immediate action is required based on the latest data.

Choosing between batch and real-time ingestion depends on how data is being used. However, most enterprises use a combination of both, depending on the specific data streams involved.

Methods of Data Ingestion

Several data ingestion methods can be applied to meet diverse business requirements. What data ingestion framework is ultimately used will depend on data sources and a business’s ingestion needs. Below is a breakdown of common data ingestion methods:

- Ingestion Through Parameters: This method allows data ingestion based on pre-defined parameters, like time intervals or specific triggers. For example, a company may set parameters so that sales data is ingested every 24 hours.

- Ingestion of Array Data: Ingesting structured array data — such as tables or matrices — typically involves handling multiple rows and columns of information. This is commonly used in database or spreadsheet ingestion processes.

- Transaction Record Entry: Common in banking and financial services, this method ingests individual transaction records as they are generated. Real-time ingestion is typically required for these systems due to the critical nature of the data.

- File Record Ingestion: Many businesses rely on ingesting data from flat files — like CSV or JSON — which store structured or semi-structured data. This method is often used to ingest archived data.

- Ingesting Cloud Data: With the rise of cloud computing, ingesting data directly from cloud-based services or applications has become common. Cloud data ingestion involves capturing data from cloud services like AWS or Google Cloud, and it often supports data integration for further analysis.

- Ingesting Trading and Gaming Data: Both industries rely heavily on real-time data ingestion. For example, a stock trading platform may ingest real-time market data to update prices instantly, while gaming platforms capture player interaction data to improve UX and personalization.

- Ingesting Database Records: Ingestion from relational or non-relational databases involves pulling data from multiple tables, ensuring data is consistent and up to date. This can be done either in batch or in real-time, depending on the system’s needs.

- Ingesting Data into a Database: For many businesses, the destination for ingested data is a database. This process involves transforming raw data into structured formats that can be efficiently organized.

- Streaming Data Ingestion: This method is used when data is generated continuously and needs to be processed in real-time. For example, businesses can use Apache Kafka to handle continuous streams of log data, social media updates, or sensor data.

- IoT Data Ingestion: As IoT devices become more and more common, ingesting data from sensors, devices, and applications has become crucial. IoT data ingestion enables companies to capture and analyze device data in real-time for insights into machine health, energy consumption, or user behavior.

Challenges and Best Practices in Data Ingestion

Despite its importance, what is the data ingestion process without its share of challenges? Ensuring efficient, accurate, and scalable ingestion requires addressing several hurdles:

- Data Consistency: When dealing with multiple data sources, ensuring data remains consistent can be difficult, especially when using real-time ingestion.

- Real-Time Processing: As the need for immediate insights grows, real-time data ingestion can strain system resources, requiring infrastructure changes to handle continuous streams.

- Data Quality: Ingesting raw data often leads to inconsistencies or incomplete datasets and may also lead to unnecessary costs due to processing unneeded or inaccurate data. Implementing proper cleansing and validation processes is critical.

Best practices for successful data ingestion include:

- Using data integration platforms that streamline the ingestion of data from diverse sources.

- Prioritizing scalability to ensure that the ingestion process can handle larger volumes efficiently as data volumes and complexity grow.

- Implementing monitoring systems to detect bottlenecks or errors during the ingestion process.

By following these practices, businesses can ensure their ingestion processes are efficient, reliable, and scalable.

The Role of Data Integration in Simplifying Ingestion

Data integration is crucial in simplifying the ingestion process by combining data from different sources into a unified system. For businesses handling multiple data streams — such as cloud, IoT, and databases — an integrated approach to data ingestion eliminates silos and improves data accessibility across an organization.

For example, using an enterprise-level data integration platform, a company can automate the ingestion of data from cloud services and internal databases, creating a seamless data pipeline. Integration platforms can also handle the transformation of data to ensure that it adheres to the necessary target format. This speeds up the ingestion process and ensures that data is consistently formatted, cleansed, and ready for analysis.

Data integration serves as the backbone of a well-optimized ingestion process, reducing the complexity of managing various data streams and ensuring timely, accurate data delivery.

Actian and Data Ingestion

The Actian Data Platform offers businesses a powerful solution for managing the complexities of data ingestion. Actian provides robust capabilities for ingesting data from a wide range of sources, including cloud services, IoT devices, and legacy databases. By automating a significant portion of the ingestion process, the platform reduces the burden on IT teams while ensuring that data is readily available for analysis and decision-making.

One of Actian’s key strengths is its ability to handle both batch and real-time data ingestion, offering flexibility for businesses with diverse data needs. Additionally, Actian’s big data integration capabilities allow organizations to seamlessly combine data from multiple sources, delivering a unified view of the business.

For companies looking to integrate, transform, and manage their data, Actian’s comprehensive platform provides the scalability, performance, and security necessary to support data-driven business growth. It can even help with a business’s data integration and quality efforts.

What is the data ingestion process? For modern organizations, it ensures data from various sources is collected, processed, and made available for analysis. Whether it involves batch or real-time ingestion, organizations must prioritize scalability, efficiency, and integration to manage increasing data volumes effectively.

By addressing the challenges of data consistency, real-time processing, and data quality, businesses can optimize their data ingestion pipelines to deliver timely, actionable insights. With solutions like the Actian Data Platform, companies have the tools to build efficient, scalable ingestion processes supporting long-term success in today’s data-driven economy.

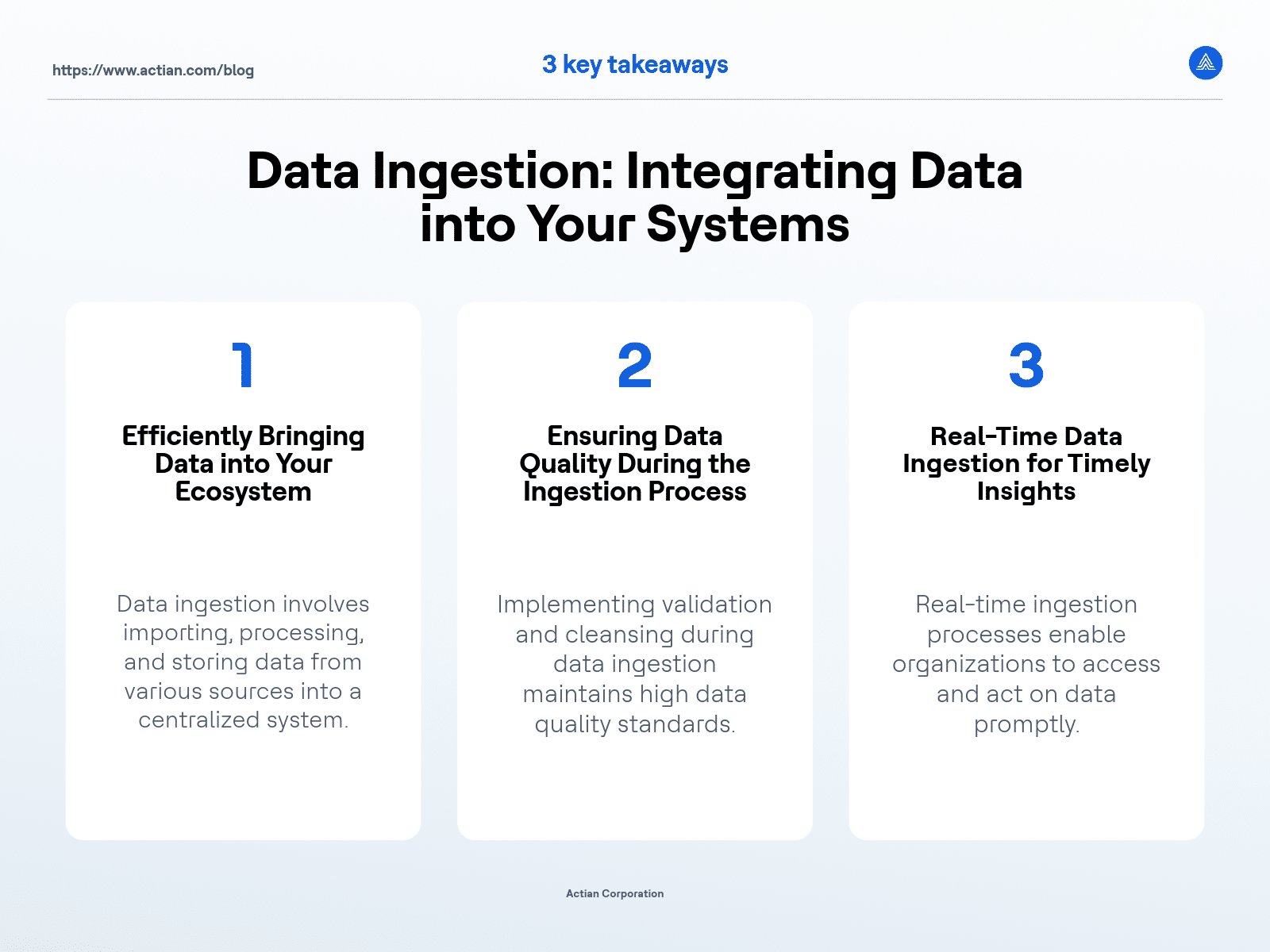

Key Takeaways