DataOps: Data Catalogs Enable Better Data Discovery in a Big Data Project

Actian Corporation

May 6, 2020

In today’s world, Big Data environments are more and more complex and difficult to manage. We believe that Big Data architectures should, among other things:

- Retrieve information on a wide spectrum of data.

- Use advanced analytics techniques such as statistical algorithms, machine learning, and artificial intelligence.

- Enable the development of data-oriented applications such as a recommendation system on a website.

In order to put in place a successful Big Data architecture, enterprise data is stored in a centralized data lake, destined to serve various purposes. However, the massive & continuous amount of diverse & varied data from different sources transforms a data lake into a data swamp. So, as business functions are increasingly working with data, how can we help them find their way?

In order for your Big Data to be exploited to their full potential, your data must be well documented.

Data documentation is key here. However, documenting data such as their business name, description, owner, tags, level of confidentiality, etc, can be an extremely time-consuming task, especially with millions of data points available in your lake!

With a DataOps approach, an agile framework focused on improving communication, integration, and automation of data flows between data managers and data consumers across an organization, enterprises are able to carry out their projects in an incremental manner. Supported by a data catalog solution, enterprises are able to easily map and leverage their data assets in an agile, collaborative, and intelligent manner.

How Does a Data Catalog Support a DataOps Approach in Your Big Data Project?

Let’s go back to the basics…what is a data catalog?

A data catalog automatically captures and updates technical and operation metadata from an enterprise’s data sources and stores them in a unique source of truth. It’s purpose is to democratize data understanding: to allow your collaborators to find the data they need via one easy-to-use platform above data systems. Data catalogs don’t require technical expertise to actually discover what is new and seize opportunities.

Effective Data Lake Documentation for Your Big Data

Think of Legos. Legos can be created and built into anything you want, but at its core, Legos are still just a set of bricks. Theses blocks can be shaped to any need, desire or resource.

In your quest to facilitate your data lake journey, it is important to create effective documentation through the following:

- Customizable layouts.

- Interactive components.

- A set of pre-created templates.

By offering modular templates, Data Stewards can simply and efficiently configure documentation templates according to their business users’ data lake search queries.

Monitor Big Data With Automated Capabilities

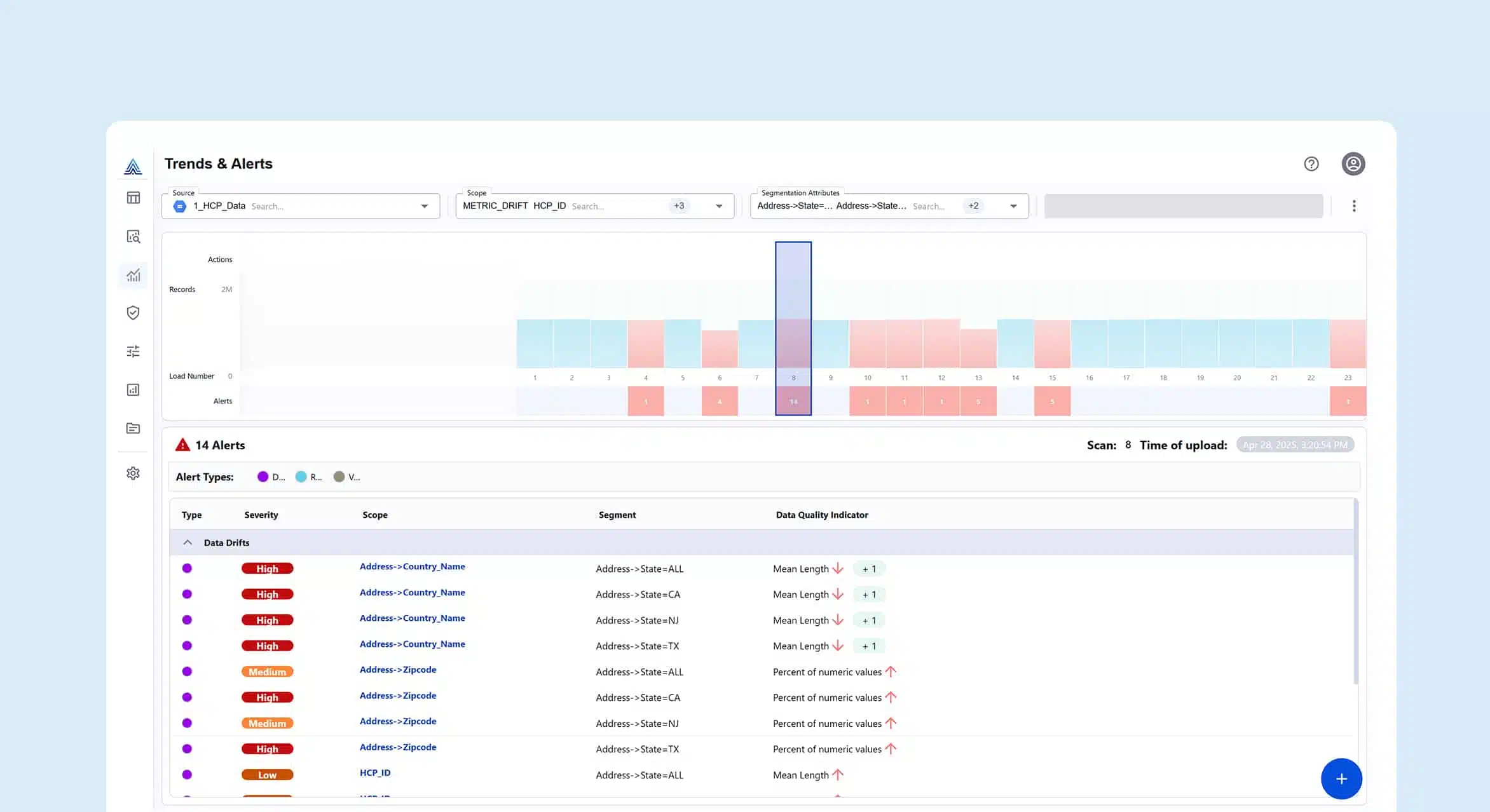

Through an innovative architecture and connectors, data catalogs can connect to your Big Data sources, where the IT department can monitor their data lake. They are able to map new incoming datasets, be notified of any deleted or modified datasets or even report errors to referring contacts for example.

Users are able to access to up-to-date information in real time.

These automated capabilities allow users to be notified of when new datasets appear, when they are deleted, when there are errors, when they were last updated, etc.

Support Big Data Documentation With Augmented Capabilities

Intelligent data catalogs are essential for data documentation. They rest on artificial intelligence and machine learning techniques, one being “fingerprinting” technology. This feature offers data users that are responsible for a particular data set some suggestions as for its documentation. These recommendations can, for example, be associated with tags, contacts, or even business terms of other data sets based on:

- The analysis on the data itself (statistical analysis).

- The schema resembling other data sets.

- The links on the other data set’s fields.

An intelligent data catalog also detects personal/private data in any given data set and report it on its interface. This feature helps enterprises respond to the different GDPR demands put into place in May 2018, as well as alert potential users on a data’s sensitivity level.

Enrich Your Big Data Documentation With Data Catalog

Enrich your data’s documentation with the Actian Data Intelligence Platform. Our metadata management platform was designed for Data Stewards, and centralizes all data knowledge in a single and easy-to-use interface.

Automatically imported, generated, or added by the administrator, data stewards are able to efficiently document their data directly within our data catalog. Give meaning to your data with metadata.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)