Data Quality and Data Observability: Why You Need Both

Actian Corporation

May 26, 2025

As data becomes more central to decision-making, two priorities are taking precedence for data leaders: data quality and data observability. Each plays a distinct role in maintaining the reliability, accuracy, and compliance of enterprise data.

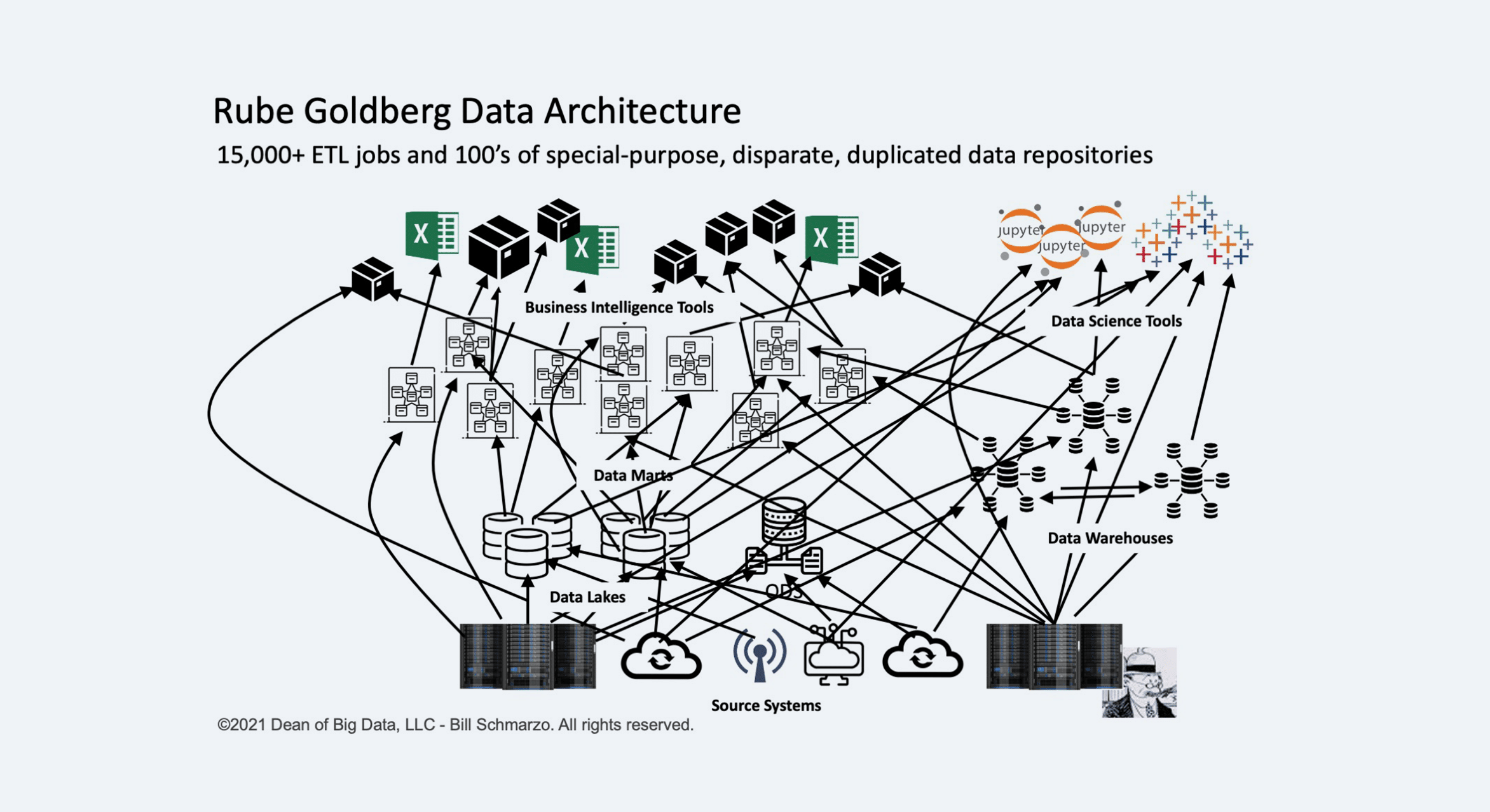

When used together, data quality and data observability tools provide a powerful foundation for delivering trustworthy data for AI and other use cases. With data systems experiencing rapidly growing data volumes, organizations are finding that this growth is leading to increased data complexity.

Data pipelines often span a wide range of sources, formats, systems, and applications. Without the right tools and frameworks in place, even small data issues can quickly escalate—leading to inaccurate reports, flawed models, and costly compliance violations.

Gartner notes that by 2026, 50% of enterprises implementing distributed data architectures will have adopted data observability tools to improve visibility over the state of the data landscape, up from less than 20% in 2024. Here’s how data quality and observability help organizations:

Build Trust and Have Confidence in Data Quality

Every business decision that stakeholders make hinges on the trustworthiness of their data. When data is inaccurate, incomplete, inconsistent, or outdated, that trust is broken. For example, incomplete data can negatively impact the patient experience in healthcare, or false positives in credit card transactions that incorrectly flag a purchase as fraudulent erode customer confidence and trust.

That’s why a well-designed data quality framework is foundational. It ensures data is usable, accurate, and aligned with business needs.

With strong data quality processes in place, teams can:

- Identify and correct errors early in the pipeline.

- Ensure data consistency across various systems.

- Monitor critical dimensions such as completeness, accuracy, and freshness.

- Align data with governance and compliance requirements.

Embedding quality checks throughout the data lifecycle allows teams and stakeholders to make decisions with confidence. That’s because they can trust the data behind every report, dashboard, and model. When organizations layer data observability into their quality framework, they gain real-time visibility into their data’s health, helping to detect and resolve issues before they impact decision-making.

Meet Current and Evolving Data Demands

Traditional data quality tools and manual processes often fall short when applied to large-scale data environments. Sampling methods or surface-level checks may catch obvious issues, but they frequently miss deeper anomalies—and rarely reveal the root cause.

As data environments grow in volume and complexity, the data quality architecture must scale with it. That means:

- Monitoring all data, not just samples.

- Validating across diverse data types and formats.

- Integrating checks into data processes and workflows.

- Supporting open data formats.

Organizations need solutions that can handle quality checks across massive, distributed datasets. And these solutions cannot slow down production systems or cause cost inefficiencies. This is where a modern data observability solution delivers unparalleled value.

Comprehensive Data Observability as a Quality Monitor

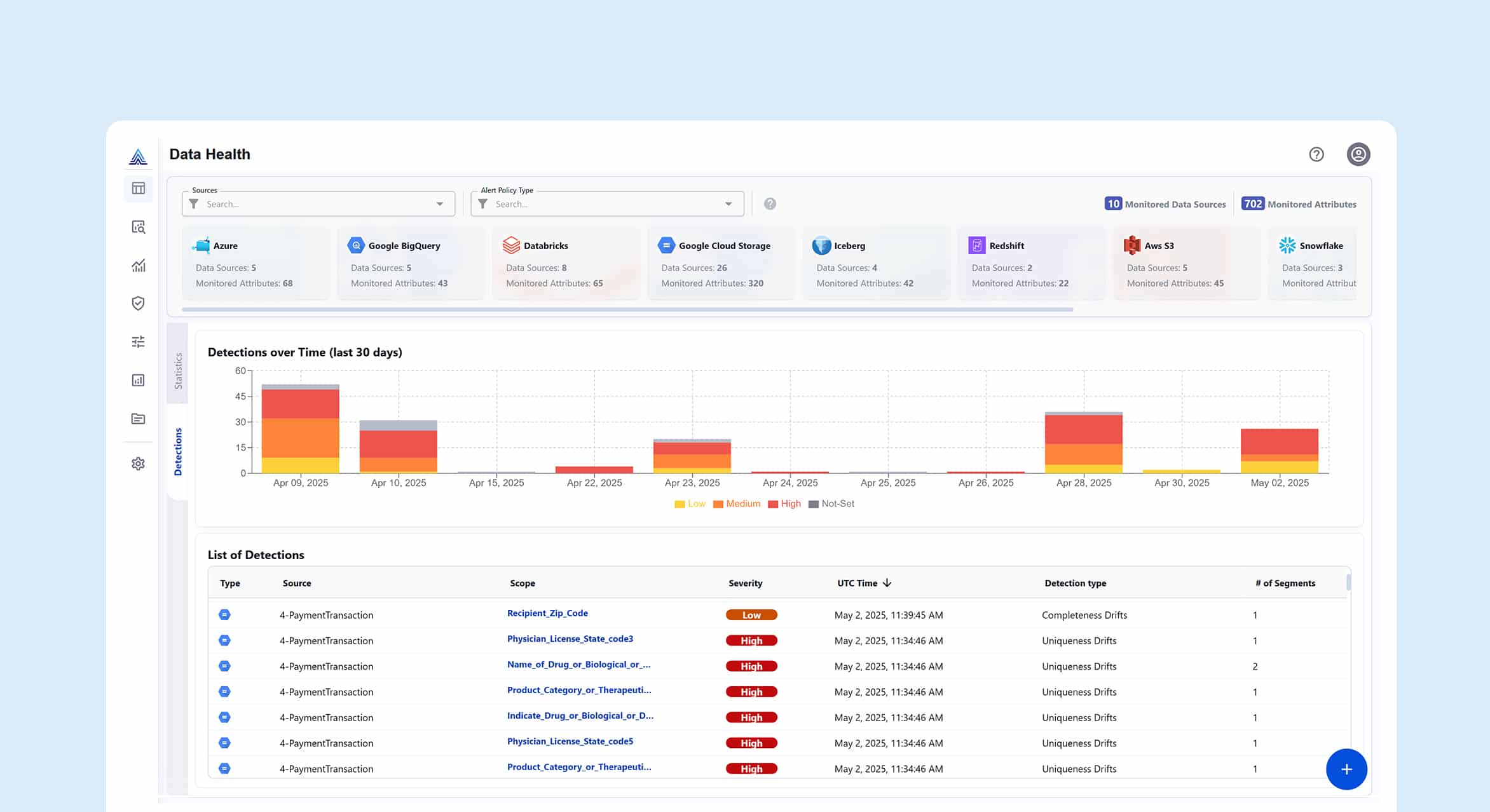

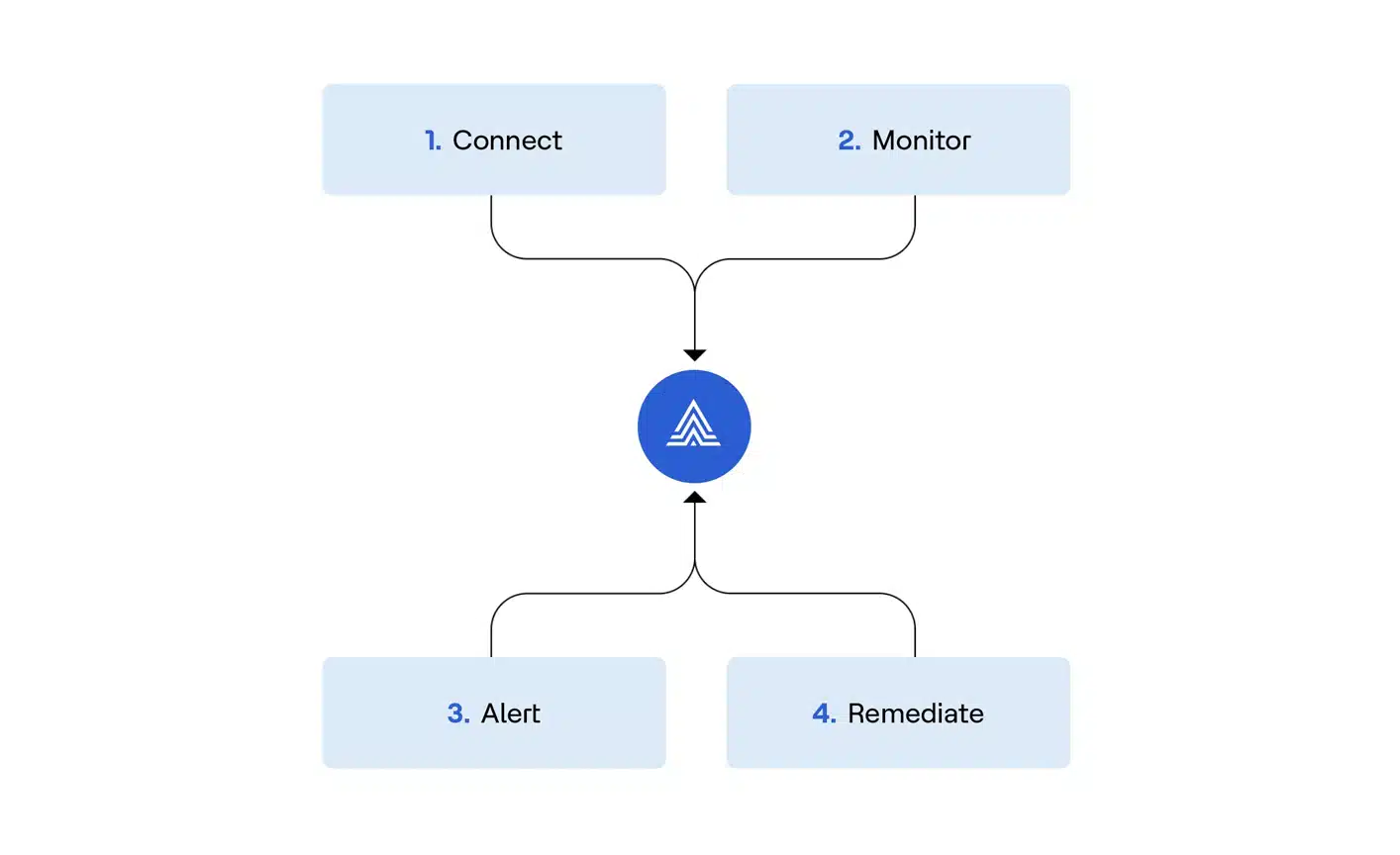

To understand the powerful role of data observability, think of it as a real-time sensor layer across an organization’s data pipelines. It continuously monitors pipeline health, detects anomalies, and identifies root causes before issues move downstream. Unlike static quality checks, observability offers proactive, always-on insights into the state of the organization’s data.

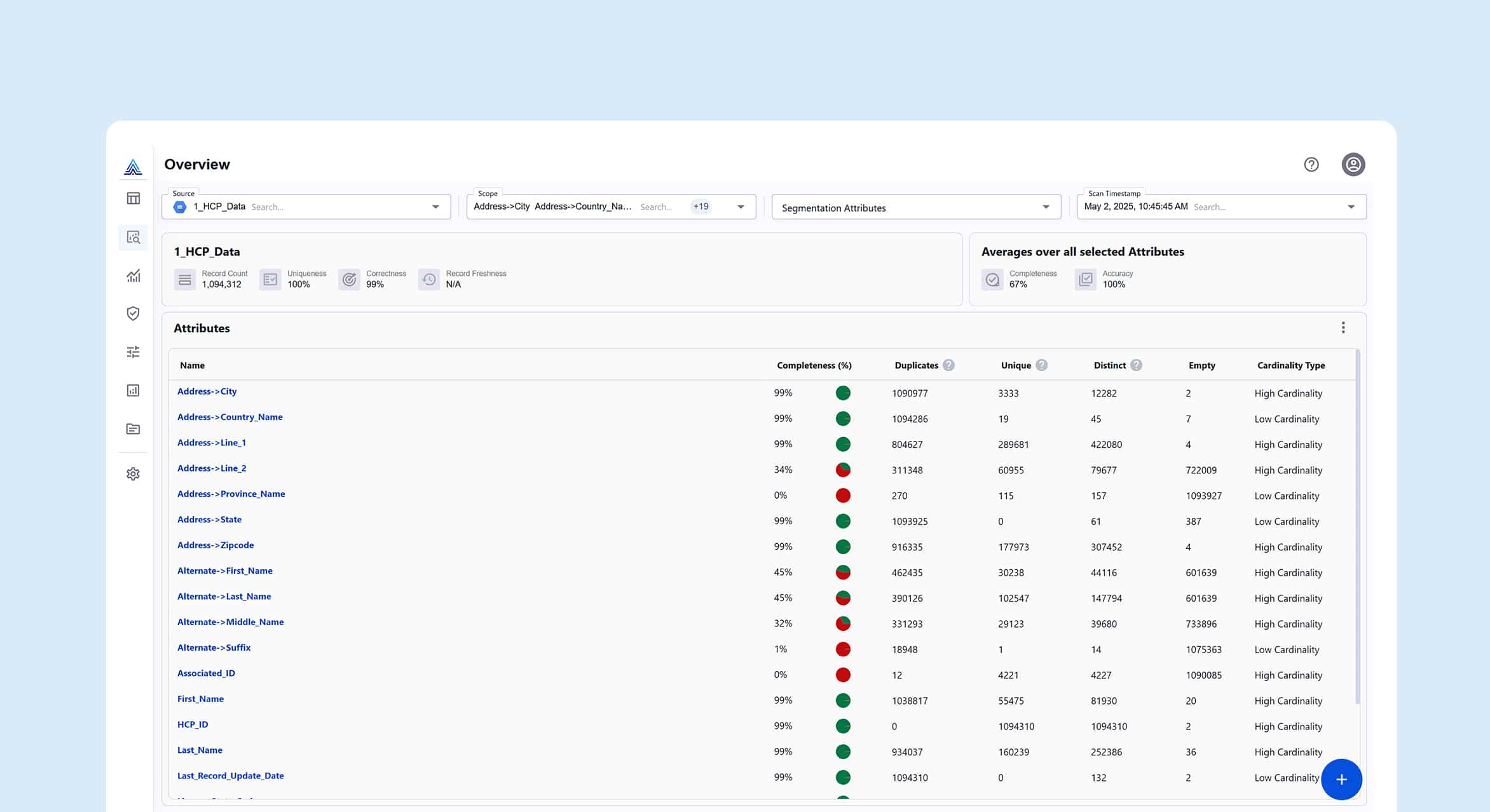

A modern data observability solution, like Actian Data Observability, adds value to a data quality framework:

- Automated anomaly detection. Identify issues in data quality, freshness, and custom business rules without manual intervention.

- Root cause analysis. Understand where and why issues occurred, enabling faster resolution.

- Continuous monitoring. Ensure pipeline integrity and prevent data errors from impacting users.

- No sampling blind spots. Monitor 100% of the organization’s data, not just a subset.

Sampling methods may seem cost-effective, but they can allow critical blind spots in data. For instance, an anomaly that only affects 2% of records might be missed entirely by the data team, until it breaks an AI model or leads to unexpected customer churn.

By providing 100% data coverage for comprehensive and accurate observability, Actian Data Observability eliminates blind spots and the risks associated with sampled data.

Why Organizations Need Data Quality and Observability

Companies don’t have to choose between data quality and data observability—they work together. When combined, they enable:

- Proactive prevention, not reactively fixing issues.

- Faster issue resolution, with visibility across the data lifecycle.

- Increased trust, through continuous validation and transparency.

- AI-ready data by delivering clean, consistent data.

- Enhanced efficiency by reducing time spent identifying errors.

An inability to effectively monitor data quality, lineage, and access patterns increases the risk of regulatory non-compliance. This can result in financial penalties, reputational damage from data errors, and potential security breaches. Regulatory requirements make data quality not just a business imperative, but a legal one.

Implementing robust data quality practices starts with embedding automated checks throughout the data lifecycle. Key tactics include data validation to ensure data meets expected formats and ranges, duplicate detection to eliminate redundancies, and consistency checks across systems.

Cross-validation techniques can help verify data accuracy by comparing multiple sources, while data profiling uncovers anomalies, missing values, and outliers. These steps not only improve reliability but also serve as the foundation for automated observability tools to monitor, alert, and maintain trust in enterprise data.

Without full visibility and active data monitoring, it’s easy for errors, including those involving sensitive data, to go undetected until major problems or violations occur. Implementing data quality practices that are supported by data observability helps organizations:

- Continuously validate data against policy requirements.

- Monitor access, freshness, and lineage.

- Automate alerts for anomalies, policy violations, or missing data.

- Reduce the risk of compliance breaches and audits.

By building quality and visibility into data governance processes, organizations can stay ahead of regulatory demands.

Actian Data Observability Helps Ensure Data Reliability

Actian Data Observability is built to support large, distributed data environments where reliability, scale, and performance are critical. It provides full visibility across complex pipelines spanning cloud data warehouses, data lakes, and streaming systems.

Using AI and machine learning, Actian Data Observability proactively monitors data quality, detects and resolves anomalies, and reconciles data discrepancies. It allows organizations to:

- Automatically surface root causes.

- Monitor data pipelines using all data—without sampling.

- Integrate observability into current data workflows.

- Avoid the cloud cost spikes common with other tools.

Organizations that are serious about data quality need to think bigger than static quality checks or ad hoc dashboards. They need real-time observability to keep data accurate, compliant, and ready for the next use case.

Actian Data Observability delivers the capabilities needed to move from reactive problem-solving to proactive, confident data management. Find out how the solution offers observability for complex data architectures.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)