Data Storytelling: How to Have Effective Data Communication

Actian Corporation

October 5, 2021

It’s been said time and time again that data can bring so much value to a company. However, for your data to give strategic insights into your customers, processes, and objectives, it must be understood by everyone! A good way to start is through Data Storytelling.

Let’s take a look at how to effectively communicate and represent complex concepts.

Data is everywhere. In marketing departments, in customer service, on production sites, on your website… Once you’ve managed to collect, centralize, deduplicate, and prioritize all of these information flows, you will need to draw operational lessons from them.

But data is, by nature, dry, complex, and often austere. Because your employees are not all data scientists, it is essential to make data “talk”.

How? By resorting to Data Storytelling. The principle is simple: to make data accessible to everyone, whatever their profile, training, or function, it’s better to tell a nice story than to hammer complex principles. Data Storytelling was born from the convergence of Storytelling and Data Visualization, also called Dataviz.

From Storytelling to Data Storytelling

From the time of Socrates to the present day, there is one constant: human beings love stories. Stories appeal to the imagination, allow the appropriation of the most elaborate concepts, and call upon mechanics that transport and transcend the mind. Politicians regularly use storytelling to reinforce the impact of their speeches. The media, too, regularly use this lever to popularize the most complex events or ideas.

The fact that data appears in many contexts and in many forms can be confusing for non-specialists.

However, data, when properly staged, lends itself perfectly to the creation of a narrative that will then be more easily accessible.

Although Dataviz has long been used for the purpose of popularizing the lessons and trends derived from the data, these graphic representations could still remain obscure for many employees. Going from numbers to images was no longer enough! From then on, the use of Data Storytelling became obvious in the business world.

Data Storytelling: Benefits all Around

The first benefit of well-designed Data Storytelling is that the message you want to convey to your teams, your prospects or your customers will be easily understandable.

Forget the technical jargon, the IT experts’ vocabulary, when faced with a story, we are all equal. But this is not the only advantage of Data Storytelling.

Beyond its accessibility, it is also a way to synthesize information. The more condensed the speech is, the more impactful and effective it is. But there is a third benefit. A good story is easily memorized and stays in the mind longer. Why is that? Simply because Data Storytelling, like any good story, places the message in an emotional dimension. The story makes the listener react, it appeals to him and strikes the mind, either because it is funny, or because it calls upon personal memories, or because it allows one to project oneself into the future.

This is the challenge of Data Storytelling: to move from raw data to a story that arouses emotion, reaction, empathy and, ultimately, involvement.

The Keys to Effective Data Storytelling

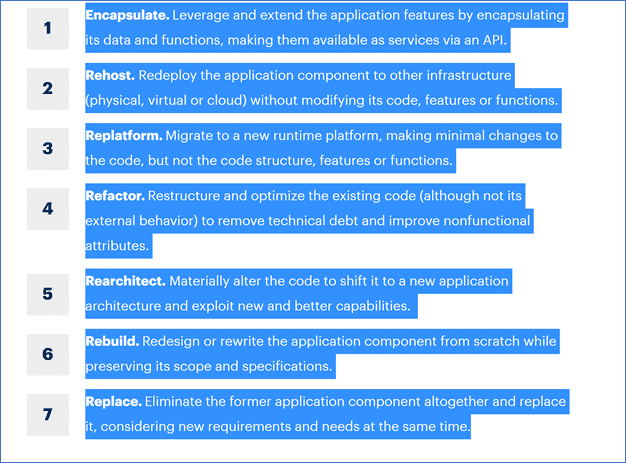

To create impactful data storytelling, it must be adapted to the target audience. We don’t talk the same way to a marketing team, a sales force or the customer service department. The Data Storytelling mechanism is based on three pillars. The first is your data, of course. Depending on the type of information you have, you will need to define the other two pillars.

First, look at the form of the narrative. The choice of lexical field, the nature of the story, the tone chosen (humorous, dramatic, satirical), all elements that must be defined according to the audience you are targeting. Finally, the third key element of your project is the visual representation.

Move away from mere aesthetic considerations, your challenge is to effectively convey the message and information. On the basis of these three essential elements, you can build your Data Storytelling and thus enhance your data capital even more.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)