Hybrid Cloud Benefits and Risks

Traci Curran

September 21, 2021

Hybrid cloud adoption is on the rise. As companies look to accelerate their cloud journey, it’s becoming clear that there are numerous values – and constraints – when blending the use of private on-premises clouds with those of public off-premise clouds. Creating a strategic balance between the two can often yield more favorable results for the business, but it’s important to carefully consider the hybrid cloud benefits and risks associated with data that moves between public and private infrastructures.

What is a Hybrid Cloud?

A hybrid cloud environment or architecture combines a public cloud and a private cloud to deliver or support a service. One application can be architected to take advantage of both types of clouds, forming the basic concept of a hybrid cloud. Using this hybrid model does create complexity that has to be managed, but at the same time, it often increases the application’s agility and performance. Basically, a cloud hybrid model is a combination of both public and private clouds.

All clouds have the five essential characteristics of resource pooling, on-demand self-service, broad network access, rapid elasticity, and measurable services. The characteristics of a hybrid cloud include the five essential characteristics but also allow better management of workloads, improved big data processing, improved data ownership, and improved overall performance.

Hybrid Cloud Benefits

Hybrid cloud benefits can be tied highly to the management of data. Many organizations are concerned about their data. Their data is the organization’s lifeblood; if it becomes compromised or corrupt, the organization is in danger of failure. With a hybrid cloud architecture, organizations can architect solutions that allow sensitive data to be held in a private cloud and non-sensitive data to be stored in a public cloud. The advantages of hybrid cloud storage can improve the overall analytical ability of the organization to run the day-to-day business as well as analyze the business trends.

Benefits of hybrid clouds include:

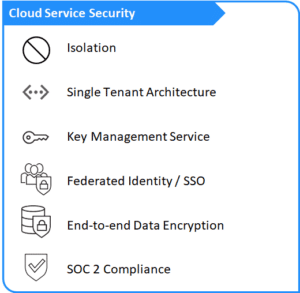

- Better security options for data and applications, data that needs to have increased security can be kept in a private cloud and managed by the organization.

- Better control of data allows for better management of data agility for services.

- Better control of demand with separation of transactional workloads between public and private clouds.

- Improved service innovation relative to taking advantage of capabilities of both private and public cloud abilities.

- Improved network management of data transactions by locating data closer to the user of the data.

- Improved scalability of a private cloud solution to allow the organization to make better usage of cloud scalability.

Business Benefits

When businesses have better control over their data, they also have better control of how decisions are made. Data is everywhere and massive. Timely, real-time management of data is essential for organizational decision support. Besides the management of data listed below, there are other business benefits of a hybrid cloud environment for the organization.

Business benefits of hybrid cloud include:

- Reduce cost, private data hosting costs the organization, and there is a trade-off between managing data and securing data. Organizations can move non-sensitive data to the public cloud and keep sensitive data in a highly secured cloud, such as one that has FedRamp certification. They also can choose to keep certain workloads in on-prem data centers.

- Better management and control of access to data anywhere at any time.

- Improve service continuity by improving the management of business service continuity, availability, and capacity.

- Improved governance, risk, and compliance (GRC) for the organization’s services’ strategy, tactics, and operations when using a hybrid cloud solution.

Disadvantages

The disadvantages of hybrid cloud pros and cons will always depend on the organization. Each organization should a have risk management and backup policy. This includes creating risk registers and deciding appropriate actions based on risks that the organization might experience. Listed are a few of the general cons and disadvantages associated with hybrid clouds, though mileage may vary depending on your organization.

Cons for the usage of a hybrid cloud solution are:

- Lack of knowledge in organizations for the usage of cloud computing, including public and private cloud knowledge. Organizations must have knowledgeable people or suppliers who understand their business needs and how cloud environments can help with their strategy and overall operations.

- Hybrid cloud complexity can be cumbersome. Current IT solutions need to be positioned adequately for now and the future based on business needs.

- Not enough budgets to invest in transformation efforts to the cloud because of other urgent business needs.

- May need enhanced network security between public and private cloud for hybrid cloud service usage.

Disadvantages of hybrid clouds:

- Increased complexity increases cost and the need for organizational expertise to manage public and private cloud environments, including vendors, platforms, and internal IT resources.

- Capital expenses associated with on-prem environments.

- With increased complexity also increases the risk of security attacks.

Disadvantages and cons are in the eye of the organization. Organizations should partner with external vendors to help with organizational assessments to determine challenges and then create actionable business plans and high-level roadmaps for success.

Is Hybrid Cloud Right for Your Business?

There are pros and cons to the usage of a hybrid cloud solution. Many of the reasons, either way, depend on the organization’s desired outcomes. Companies should assess their risk tolerance and carefully consider how risk is managed. Pros for the usage of a hybrid cloud solution are:

- Better management of workloads. Balancing workloads and access to data with a hybrid cloud solution will help improve the availability and performance of services.

- Improved utilization of IT resources. When tracking IT asset utilization, many organizations may find that utilization is very low, and it may be more cost-effective to move those workloads to a public cloud as needed instead of having IT assets that are not used for long periods consuming financial resources.

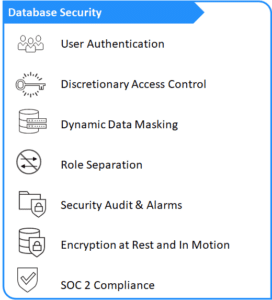

- Management of big data and improving big data analytics for organizational decision support. Big data management is enhanced with hybrid cloud solutions and data warehouses that can be public or private cloud solutions.

- Improve time to market for services. With public cloud IT assets readily available, organizations do not have to go through the timely provisioning of on-premises IT assets and make services available to customers anywhere around the globe.

Vendors of cloud solutions make the maintenance of IT components and capabilities easier. Infrastructure is becoming more of a commodity and many organizations can see cost savings using the cloud. Shifting the focus to business strategy and viewing hybrid clouds as an enabler of the business increases agility, competitiveness, and customer focus of the outcomes supported by hybrid cloud solutions.

The usage of Hybrid clouds will continue to grow as the organizational trust of these solutions grows. This will allow businesses to focus on core competencies and innovation so they can maintain an edge over the competition.

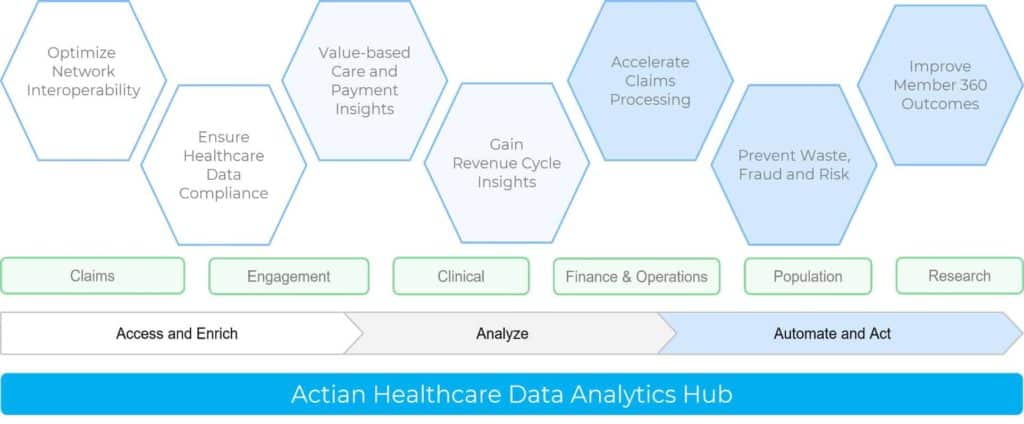

Actian is a fully managed hybrid cloud data warehouse service designed to deliver high performance and scale across all dimensions – data volume, concurrent user, and query complexity. It is a true hybrid platform that can be deployed on-premises as well as on multiple clouds, including AWS, Azure, and Google Cloud, enabling you to migrate or offload applications and data to the cloud at your own pace.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.