The Governance Gap: Why 60% of AI Initiatives Fail

Dee Radh

May 1, 2025

Summary

This blog presents a critical insight: without modern, proactive governance, a majority of AI initiatives will fail to deliver value. It explains what causes breakdowns and how federated, context-aware practices can close the “governance gap.”

- Gartner projects that 60% of AI projects will miss their value targets by 2027 due to fragmented, reactive governance structures that don’t align with business objectives.

- Common pitfalls include compliance-driven rollouts, siloed teams, and outdated tools, hindering scalability and strategic impact.

- A modern solution involves federated data governance via active metadata, context-rich data catalogs, and “shift-left” stewardship at the source—empowering decentralized teams while ensuring oversight.

AI initiatives are surging, and so are the expectations. According to Gartner, nearly 8 in 10 corporate strategists see AI and analytics as critical to their success. Yet there’s a sharp disconnect: Gartner also predicts that by 2027, most organizations, 60%, will fail to realize the anticipated value of their AI use cases because of incohesive data governance frameworks.

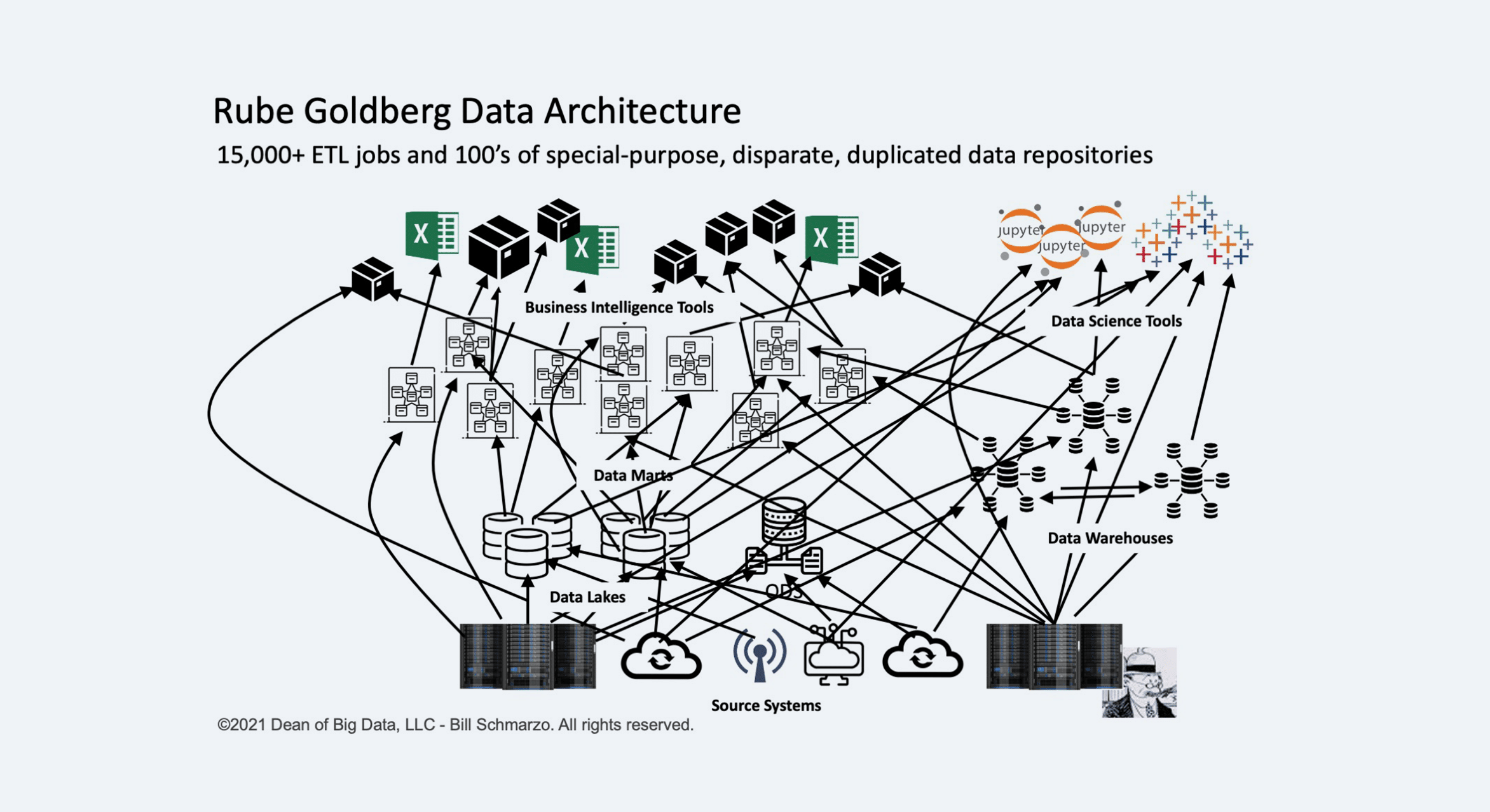

What’s holding enterprises back isn’t intent or even IT investments. It’s ineffective data processes that impact quality and undermine trust. For too many organizations, data governance is reactive, fragmented, and disconnected from business priorities.

The solution isn’t more policies or manual controls. It’s modern technology, with a modern data catalog and data intelligence platform as the cornerstones. Modern catalogs can play a key role in data management and governance strategies.

Why Governance Efforts Fail

While many organizations strive toward and commit to better data governance, they often fall short of their goals. That’s because governance programs typically suffer from one of three common pitfalls:

- They’re launched in response to compliance failures, not strategic goals.

- They struggle to scale due to legacy tools and siloed teams.

- They lack usable frameworks that empower data stewards and data users.

According to Gartner, the top challenges to establishing a data governance strategy include talent management (62%), establishing data management best practices (58%), and understanding third-party compliance (43%). With these issues at play, it’s no wonder that data governance remains more aspirational than operational.

Shifting this narrative requires organizations to embrace a modern approach to data governance. This approach entails decentralizing control to business domains, aligning governance with business use cases, and building trust and understanding of data among all users across the organization. That’s where a modern data catalog comes into play.

Going Beyond Traditional Data Catalogs

Traditional data catalogs can provide an inventory of data assets, but that only meets one business need. A modern data catalog goes much further by embedding data intelligence and adaptability into data governance, making it more beneficial and intuitive for users.

Here’s how:

Shift-Left Capabilities for Data Stewards

Moving data governance responsibility upstream can enable new benefits. This shift-left approach empowers data stewards at the source—where data is created and understood the best, supporting context-aware governance and decentralized data ownership.

With granular access controls, flexible metamodeling, and business glossaries, data stewards can apply governance policies when and where they make the most sense. The result? Policies that are more relevant, data that’s more reliable, and teams that gain data ownership and confidence, not friction or bottlenecks.

Federated Data Governance With Active Metadata

A modern data catalog supports federated governance by allowing teams to work within their own domains while maintaining shared standards. Through active metadata, data contracts, and data lineage visualization, organizations gain visibility and control of their data across distributed environments.

Rather than enforcing a rigid, top-down approach to governance, a modern catalog uses real-time insights, shared definitions, and a contextual understanding of data assets to support governance. This helps mitigate compliance risk and promotes more responsible data usage.

Adaptive Metamodeling for Evolving Business Needs

Governance frameworks must evolve as data ecosystems expand and regulations change. Smart data catalogs don’t force teams into a one-size-fits-all model. Instead, they enable custom approaches and metamodels that grow and adapt over time.

From supporting new data sources to aligning with emerging regulations, adaptability helps ensure governance keeps pace with the business, not the other way around. This also promotes governance across the organization, encouraging data users to see it as a benefit rather than a hurdle.

Support Effective Governance With the Right Tools

Adopting a modern data catalog isn’t just about using modern features. It’s also about providing good user experiences. That’s why for data governance to succeed, tools must integrate seamlessly and work intuitively for users at all skill levels.

This experience includes simplifying metadata collection and policy enforcement for IT and data stewards, and providing intuitive search and exploration capabilities that make data easy to find, understand, and trust for business users. For all groups of users, the learning curve should be short, encouraging data governance without being limited by complex processes.

By supporting all types of data producers and data consumers, a modern data catalog eliminates the silos that often stall governance programs. It becomes the connective tissue that aligns people, processes, and policies around a shared understanding of data.

Go From Data Governance Aspirations to Outcomes

Most organizations know that data governance is essential, yet few have the right tools and processes to fully operationalize it. By implementing a modern data catalog like the one from Actian, organizations can modernize their governance efforts, empower their teams, and deliver sustainable business value from their data assets.

Organizations need to ask themselves a fundamental question: “Can we trust our data?” With a modern data catalog and strong governance practices, the answer becomes a confident yes.

Find out how to ensure accessible and governed data for AI and other use cases by exploring our data intelligence platform with an interactive product tour or demo.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.