Building a Data Management Tech Stack for Tomorrow, Today

Traci Curran

January 24, 2023

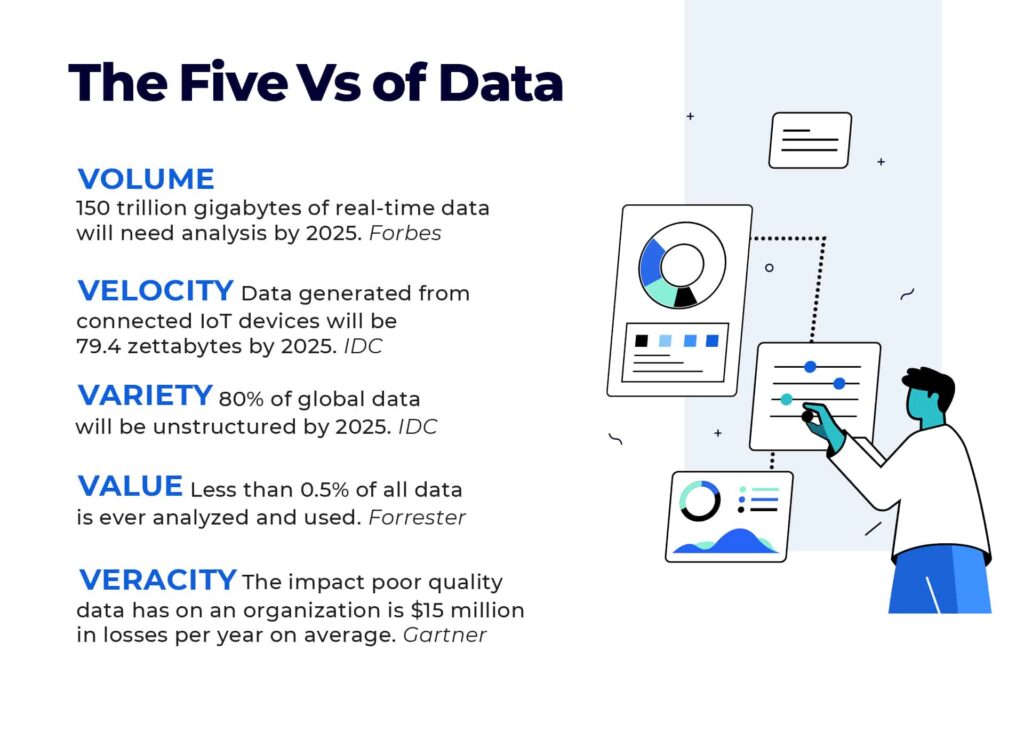

An effective data management tech stack is essential to the health and success of any business, regardless of industry. Today, data is generated at faster rates and higher volumes than ever before, so businesses need systems that are unified and agile in order to keep up. As businesses try to keep up with the surge in data volume, now is the time to reassess if their stacks are up for the job.

The way a business builds up its data management stack has deep implications across the business. Data constantly needs to be shared, be it from sales teams to customer service teams, or user data being shared with application developer teams. If data is the lifeblood of business, then an effective tech stack is the backbone.

Here, we’ll look at factors to consider when modernizing data management software, including the steps enterprises should take before deciding on a solution, common challenges to adoption of new systems and best practices for implementing new technology into the stack.

What to Consider Ahead of an Upgrade

Modernizing the tech stack can help an enterprise deliver more effective results for customers, cut costs, and increase operational agility. In order to deliver on the potential of a modern-day tech stack however, there are several considerations that businesses need to undertake first.

This includes taking an audit of which systems are performing at a level that can keep pace with today’s innovation, which are performing only adequately, and which are underperforming. Understanding where efficiencies can be created is essential, as enterprise data management needs vary from business to business. Taking the time to understand the most important aspects as they relate to your business goals can elevate your data management modernization strategies to the next level.

To kickstart the modernization process, businesses must first assess their data warehouse needs and the types of datasets that are going to be processed. This involves business leaders asking themselves how well their current data management plan is functioning, and if it can integrate data internally and externally to paint 360-degree views of customers. A working understanding of how the current system is functioning helps identify the areas that can be improved from an operational agility perspective and for the future.

When assessing how future-ready the current IT architecture is, businesses must also consider how well it’s set up for future innovation. This includes being agile enough to collect data from edge devices, IoT devices, sensors, and other connected systems being used in smart enterprises. Additionally, if the current IT infrastructure can process data from these sources, will it be available in real time for instant analysis?

As datasets and IT tools continue growing in complexity, businesses are often running multiple data processing systems and tools alongside each other. As they assess data management strategies, business leaders need to ask themselves if their systems are able to run concurrently with one another, and if that concurrence will exist even with increasingly complex datasets.

A future-proof data management tech stack will give enterprises the clearest picture of their business and potential for future innovation. In order to be “future-proof”, business must prepare for and overcome certain obstacles.

Challenges to Modernization

Data storage and processing is one of the largest pieces of the enterprise pie, and as such, takes up a lot of IT resources. When businesses identify a part of their data management stack to upgrade, they should be advised that there are some size-related hurdles that they’ll need to clear first.

Though many data management solutions nowadays are cloud-based, enterprises do use a mix of on-premises and cloud solutions. This means that upgrades to a data processing system can sometimes include onsite setup and maintenance costs associated with the data storage center.

Additionally, if a business opts to set up a hybrid mix of cloud and on-premises for storage, there will be a need for data migration and integration. Based on the size of data being shared from one storage site to the other, this can take some time. This is also where issues of data quality can be discovered, which will require the data to either be scrubbed for use or discarded as unusable.

Finally, another main challenge associated with modernization of data management systems is the compute and storage capacity. As mentioned, data management systems are oftentimes the largest IT element in a business, and it’s critical to have storage that can handle the datasets. This includes having enough power and physical space for on-premises storage sites. For data stored in the cloud, businesses should prepare for costs to vary based on data volume.

Upgrading a data management tech stack doesn’t have to be a daunting task. Preparing today for tomorrow’s innovation involves taking an honest look at how well prepared your stack is for the future, and if it can still support future business growth.

There are plenty of choices today for enterprises looking to improve their data management strategies as businesses leverage the cloud more and more. See how cloud-based services like the Actian Data Platform can take your data game to the next level, no matter what the future holds: www.actian.com/data-platform

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.