Enterprise-grade by design

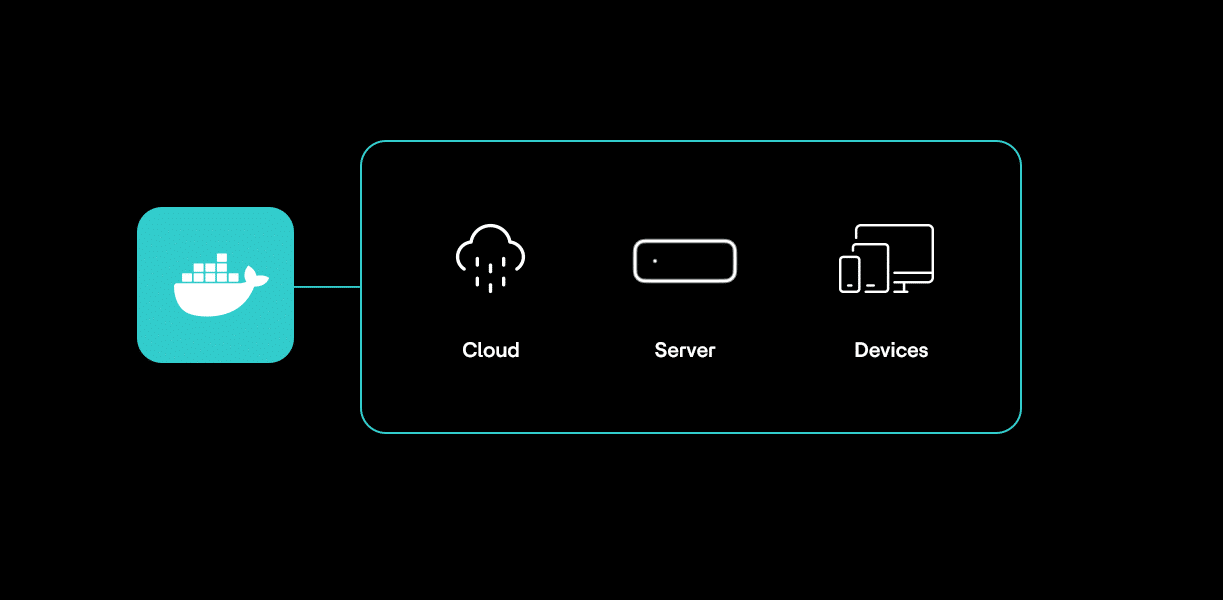

Deploy anywhere: cloud, edge, or fully offline

Run vector search wherever your application lives. Works on your laptop, at the edge, in your data center, or in air-gapped environments. No cloud dependency, no connectivity assumptions.

Developer-first, production ready from day one

Start local, deploy to production without re-platforming. Familiar APIs, standard integrations, and clear documentation get you from prototype to production fast.

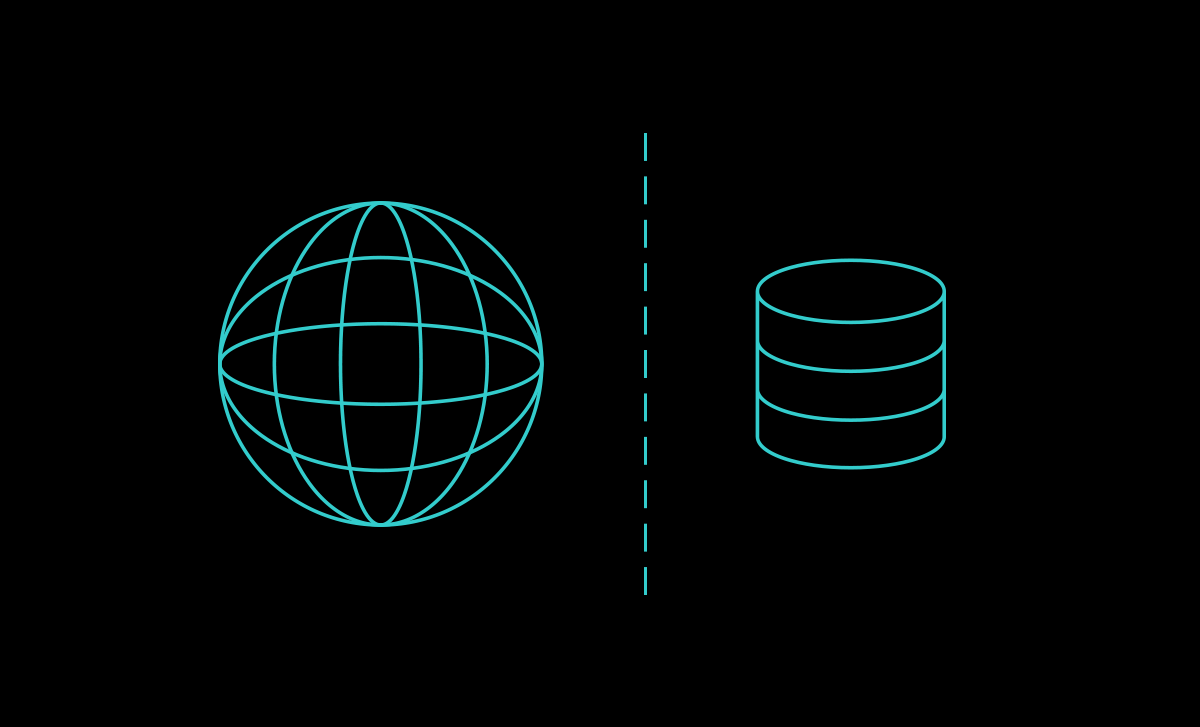

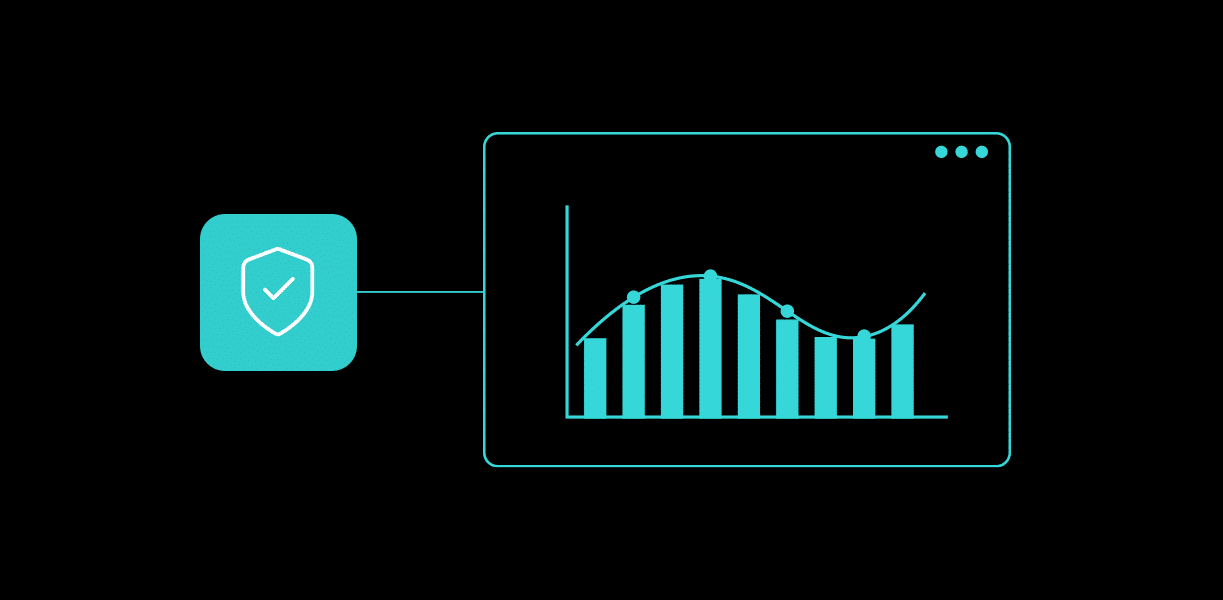

Your data stays yours

Deploy AI where your data resides. Meet privacy, compliance, and residency requirements without sending vector embeddings to third-party cloud services.

Designed to do more

Actian VectorAI DB enables portable AI by offering:

Production deployments in regulated and edge-constrained environments

Deploy AI-powered support agents that answer questions using your knowledge base and support history. Run RAG pipelines on-premises without sending customer data to the cloud.

Power real-time inventory tracking and product search at retail locations and warehouses. Works offline during network outages, syncs when connected.

Run equipment failure prediction and maintenance documentation search directly on factory floors. Works in isolated facilities where cloud connectivity is unreliable or unavailable.

Search and analyze contracts, regulatory filings, and confidential agreements locally. Meet data residency requirements while deploying semantic search across sensitive documents.

Deploy facial recognition for security, access control, and identity verification at the edge. Process biometric data locally without cloud dependencies for privacy and compliance.

Optimized for local development and on-premises deployment

Build locally, deploy securely, and scale without changing your stack.

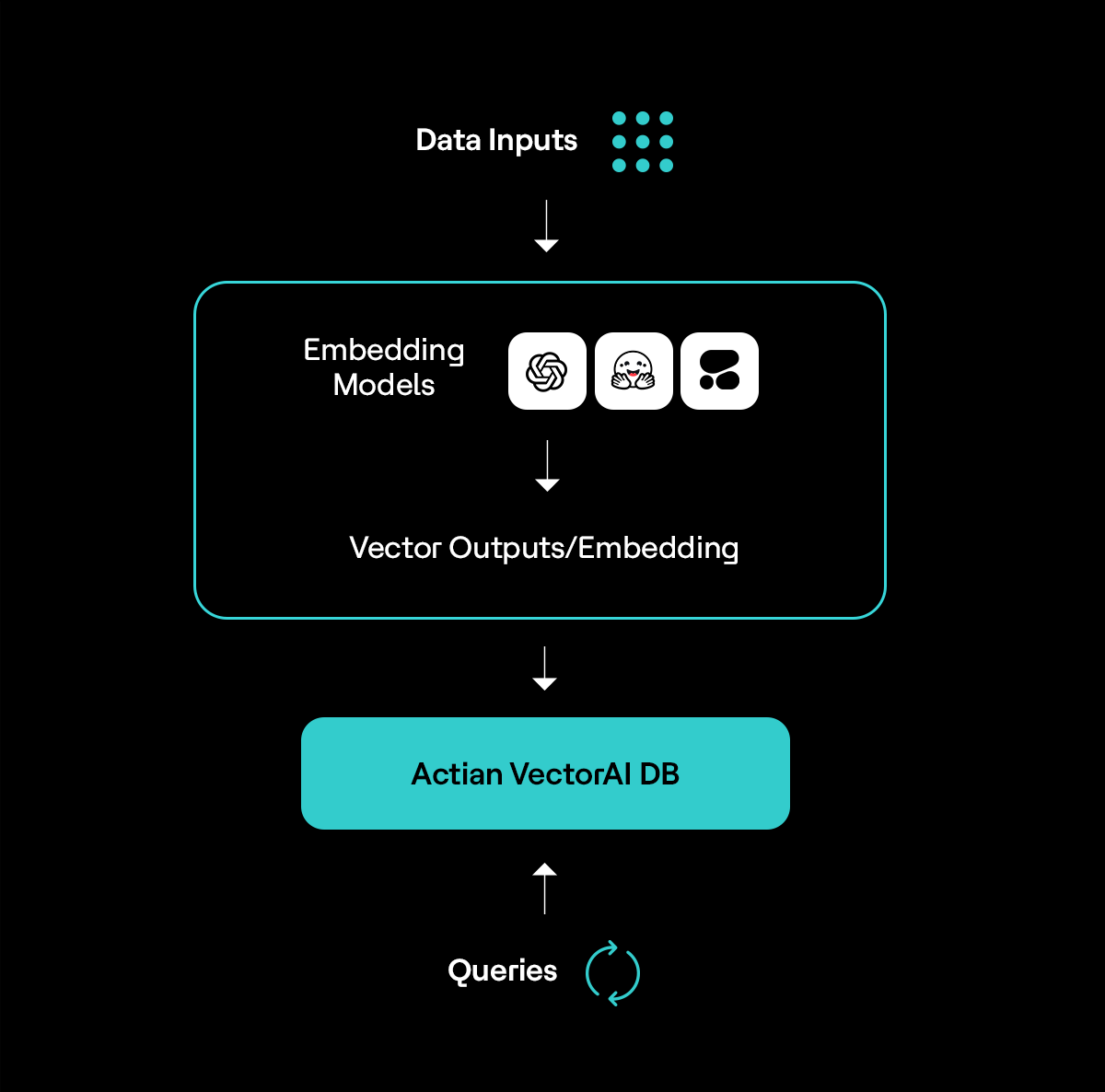

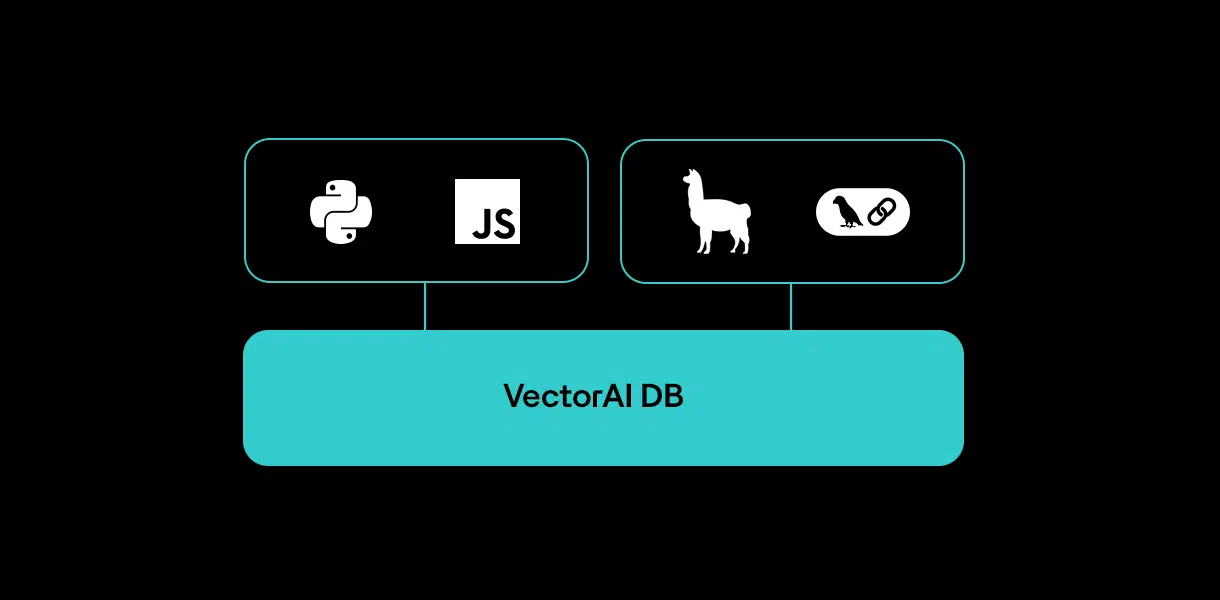

Python & JavaScript SDKs, REST & SQL APIs. Native LangChain & LlamaIndex support with OpenAI, & Cohere models.1 1 Full integration suite available at public beta launch.

Docker container for local development & production deployment. Linux, Windows, & x86 support. Kubernetes, bare metal, & ARM support coming soon.

Encryption at rest & in transit. Local built-in management UI with monitoring, analytics, & health dashboards.

Works with your existing AI stack

Works with your existing AI stack

Edge-optimized performance without cloud latency

Sub-15ms query latency

Fast local queries eliminate cloud round-trip overhead for responsive applications.

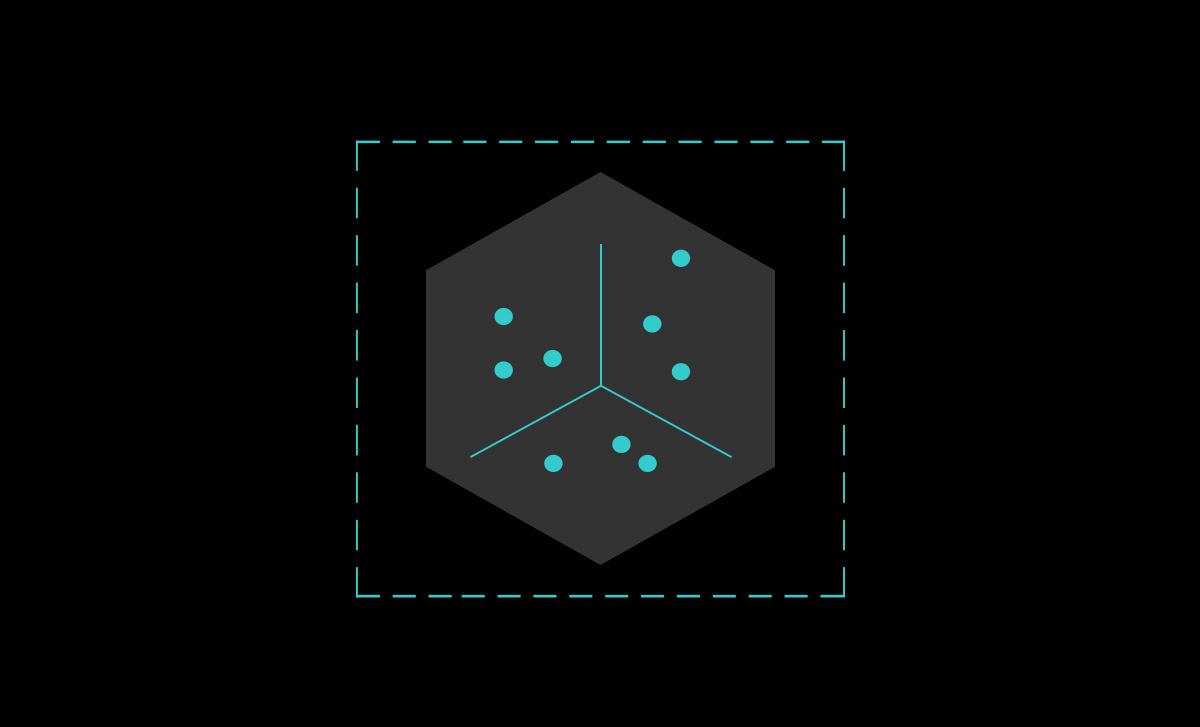

HNSW-based indexing

Optimized HNSW implementation for high recall accuracy and fast load times, validated against industry-standard benchmarks.

Real-time indexing

Updates are available immediately without eventual consistency delays.

Transparent performance data

Openly published benchmark results on ANN-Benchmarks and Vector DB Bench for independent validation.

Flexible deployment for any application architecture

Local development

Install on your laptop or workstation with Docker. Build and test vector search applications locally before deploying to production.

Use for: Prototyping, testing, local development workflows.

Production deployment

Deploy with Docker in your data center, private cloud, or public cloud infrastructure. Run a vector search where your data resides.

Use for: Production applications, regulatory compliance, data sovereignty.

Edge & embedded

Single-node deployment at remote locations and edge devices. Operates independently during network outages with optional synchronization.

Use for: Retail locations, manufacturing sites, and distributed operations.

Get Notified When VectorAI DB Launches

Join the waitlist for early access to the public beta on April 28th. Deploy local-first vector search for RAG applications and semantic search without cloud dependencies.

(i.e. sales@..., support@...)