An Enterprise Data Platform (EDP) supports analytic applications by providing access to multiple data sources, such as data warehouses and data lakes. Unlike traditional enterprise data warehouses, an EDP does not try to centralize all analytical data in a single location. The EDP acts as an index for all the essential data assets in a business. The EDP catalogs data assets using metadata and hosts its own data warehouses.

Creating the Enterprise Data Platform

An EDP needs to be architected to make it easy for users to find the data and analytics they need. The EDP needs to support a role-based security access system to limit access to the assets that a particular individual or business group are authorized to view. Modern data platforms such as the Actian Data Platform from Actian can work with existing security frameworks such as Active Directory to map data sets to the user’s security role.

The EDP must have its own data warehouses that support batch data loading and provide access to streaming data. Data not stored internally, such as some semi-structured and unstructured data, must be accessible through data integration connectors.

Existing data lakes, such as Hadoop clusters, can be connected to the EDP, but the EDP should be able to access the file formats that Hadoop uses, such as Parquet and ORC.

Key Functions of an Enterprise Data Platform

- Data ingestion functions ease provisioning with connectors and utilities such as parallel fast loaders.

- Data storage needs to handle different data formats by storing tables as rows for On-Line Transaction Processing (OLTP) applications and as columnar formats for data analytics applications.

- Data processing provides the functions for querying data, parallelizing operations, and managing consistency and concurrency for the EDP.

- User interfaces functions. These include connectors to Business Intelligence (BI) tools, APIs such as Open Database Connectivity (ODBC), and direct connectors to operational systems.

- The data pipeline is responsible for the orderly flow of data from various source systems to the analytics database.

Scaling the EDP

An EDP needs to support hybrid on-premise and cloud-based data warehouse instances. The former can be a requirement for compliance reasons. Cloud platforms offer on-demand elastic compute and storage infrastructure scaling to keep pace with user loads as they grow.

Universal Connectivity

The EDP must be accessed from embedded Structured Query Language (SQL) in applications, so APIs are needed to support scripts and development languages. BI tools must connect to the EDP to allow users to query and visualize analytic data.

Benefits of an Enterprise Data Platform

Below are some reasons for creating an enterprise data platform:

- An EDP makes valuable data assets easy to find. The business can curate the best data sources and encourage their use by including them in the EDP.

- The EDP increases the utilization of the most valued data assets as more users share preferred versions of data versus creating their own unmanaged copies. The cost of maintaining more assets than are needed is avoided by focusing the organization on the highest quality data sources.

- Reduces the duplication of data sets in siloes by promoting the reuse of existing assets that could otherwise get overlooked.

- Flexibility to support multiple data storage formats, adding value to existing big data and data warehouse investments. The EDP does not dictate the wholesale replacement of existing data warehouses. As systems are modernized, they can get included as first-level repositories in the EDP.

- It improves regulatory compliance as the EDP can apply appropriate security controls.

- Enables faster deployment of new data sources using existing instances as templates.

- Creates a foundation for data mesh and data fabric initiatives. Both data meshes and data fabrics help to raise the quality of data assets and reduce management costs.

Leveraging Actian as an Enterprise Data Platform

Below are some key features that make the Actian Data Platform a solid foundation for an EDP:

- Built-in connectors to hundreds of data sources.

- Scheduler for data pipeline operations.

- Columnar storage for relation tables to eliminate the need for traditional database indexes.

- Support for external data formats, including Hadoop Spark.

- Distributed query processing.

- Vector processing on commodity processors.

- Hybrid-cloud provisioning.

- Multi-cloud support.

- Programming APIs and BI tool integrations.

Central Visibility for Distributed Data

The enterprise data platform can deploy data warehouses on-premise and in cloud environments and uses features such as a data catalog, distributed queries, and data connectors to external data sources to simplify navigation to distributed data.

Data Pipeline

As operational data sources change over time, Extract Transform Load (ETL) pipelines can be used to refresh data warehouses that the EDP manages. Incoming data can be transformed, filtered, and normalized before storing it in the data warehouses to which the EDP is connected. Extract Load Transform (ELT) provides an alternative to ETL, where uploaded data is cleaned and transformed as needed within a data warehouse. Data integration technology can manage complete data pipelines, from pre-defined connectors to scheduling functions for running pipeline scripts. More comprehensive data integration technology will provide enterprise-wide monitoring of data pipelines with the ability to check operations, retry failed scripts and alert for any problems.

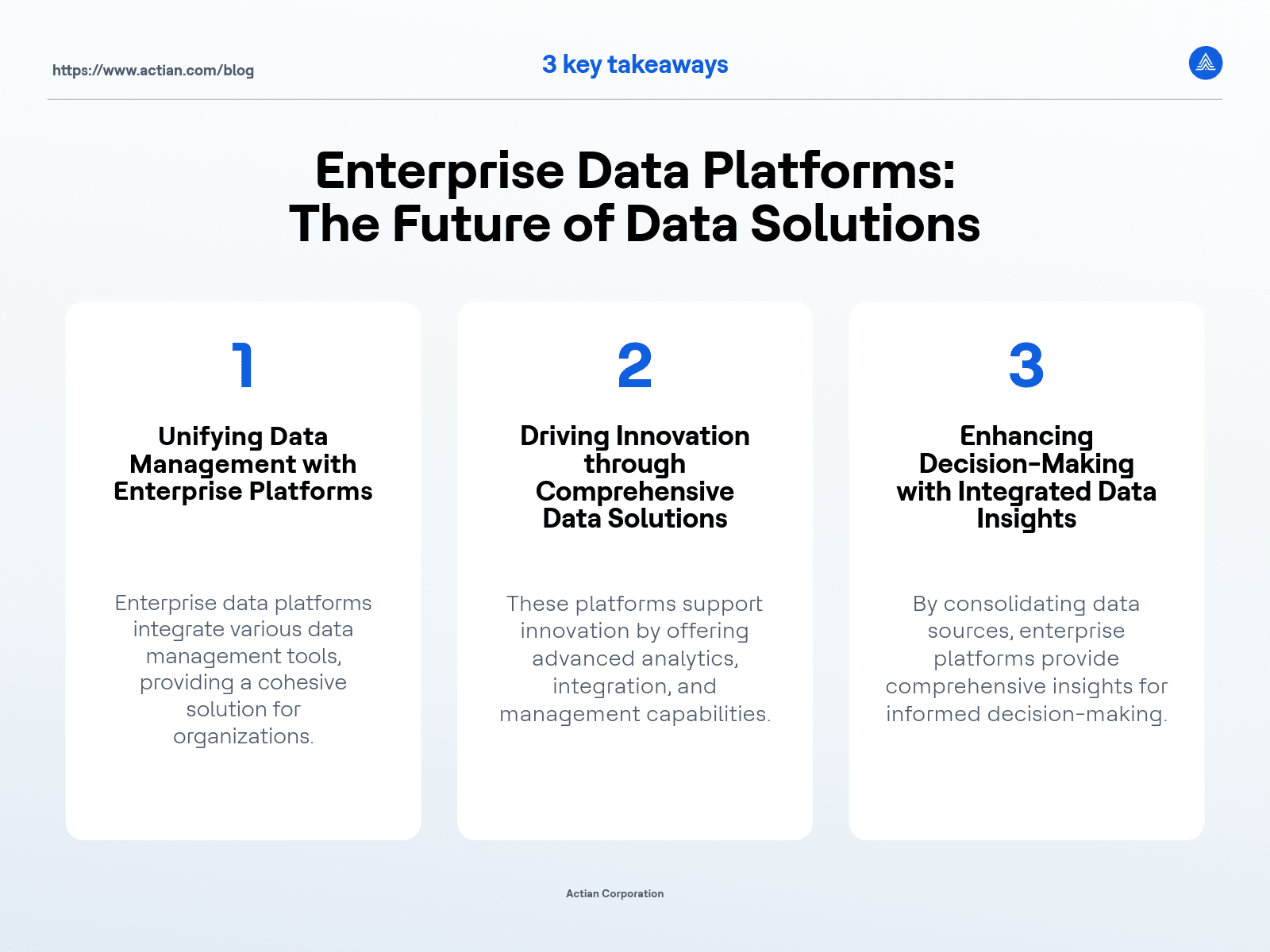

Key Takeaways