Table of Contents

Basics of Data Observability

What is Data Observability?

The Business Imperative of Data Observability

The Technical Role of Data Observability

The Human Side of Data: Key User Personas and Their Pain Points

Data Observability Use Cases For Modern Organizations

6 Common Business Use Cases

- Financial Services: Risk Management and Fraud Detection

- Healthcare: Patient Safety and Operational Excellence

- Retail and E-commerce: Customer Experience Optimization

- Manufacturing: Production Optimization and Quality Control

- Telecommunications: Network Performance and Customer Service

- Energy and Utilities: Infrastructure Monitoring and Regulatory Compliance

8 Technical Use Cases

- Data Pipeline Monitoring and Automated Recovery

- ML Model Performance and Data Drift Detection

- Regulatory Compliance and Data Governance Automation

- Cost Optimization and Resource Management

- Data Quality Scoring and SLA Management

- Incident Response and Root Cause Analysis

- Data Lineage and Impact Analysis

- Data Quality Monitoring

Basics of Data Observability

The importance of data has never been more important. Companies are handling and generating more data than ever before, and making critical business decisions based on that data. This has brought data observability to the forefront of conversations, and to better understand it, here’s a comprehensive overview of what data observability is and what it looks like to use it day-to-day.

What is Data Observability?

Data Observability is the ability to understand the health and state of data in your systems by measuring quality, reliability, and lineage. It enables you to be the first to know when: the data is wrong, what broke, and how to fix it. A healthy data observability system will keep your data ecosystem running smoothly, reducing issues and improving data insights.

Data observability is a holistic approach that automates the identification and resolution of data problems, simplifying data system management and improving performance. It encompasses understanding and expertly managing the health and performance of data, pipelines, and critical business processes.

Prominent examples of data observability platforms include: Acceldata, Actian Data Observability, Anomalo, Bigeye, Datafold, Great Expectations, Monte Carlo, and Soda.

Proper data monitoring, quality assessment, and integration with your existing data ecosystem are crucial for a comprehensive data strategy and visibility into the business. A data observability solution without this holistic view provides an incomplete narrative, limiting the insights you can draw for your data.

The Business Imperative of Data Observability

Data observability is instrumental in enabling organizations to make informed decisions quickly and efficiently. The stakes couldn’t be higher – Gartner estimates that “through 2025, at least 30% of AI projects will be abandoned after proof of concept due to poor data quality, inadequate risk controls, escalating costs or unclear business value.” and “93% teams fail to detect data issues before it impacts business”

Most organizations believe their data is unreliable, and the impact of bad data can’t be underestimated. In May 2022, Unity Software discovered it had been ingesting bad data from a large customer, which led to a 30% plunge in the company’s stock and ultimately cost the business $110 million in lost revenue. The cost of fixing bad data grows exponentially at each stage of use.

Data observability is a strategic layer that is essential for any organization looking to maintain competitiveness in a data-driven world. The ability to act quickly on trustworthy data translates to improved operational efficiencies, better customer relationships, and enhanced profitability.

The Technical Role of Data Observability

The primary function of data observability is to facilitate reliable data operations, not just to monitor data itself. Data observability refers to the practice of monitoring, managing, and maintaining data in a way that ensures its quality, availability, and reliability across various processes, systems, and pipelines within an organization.

Achieving the business imperatives of data observability relies heavily on these 4 key technical capabilities:

- Data Monitoring: This is critical for applications that require immediate action, such as fraud detection systems, customer interaction management, and dynamic pricing strategies.

- Automated Issue Detection and Resolution: Modern data observability platforms must handle large datasets and support complex anomaly detection efficiently. This capability is particularly vital in retail, finance, and telecommunications, where the ability to detect and resolve issues automatically is necessary for maintaining operational efficiency and customer satisfaction.

- Data Quality and Accessibility: The quality of insights directly correlates with the quality of data ingested and stored throughout the systems. Ensuring data is accurate, clean, and easily accessible is paramount for effective analysis, reporting, and training AI models.

- Advanced Capabilities: Modern data observability platforms are evolving to meet new challenges and opportunities through:

- AI-Powered Anomaly Detection: Leveraging machine learning to identify unusual patterns automatically.

- End-to-End Data Lineage: Enabling complete visibility into data flow and dependencies.

- Automated Root Cause Analysis: Supporting rapid troubleshooting and issue resolution directly within the observability environment.

The Human Side of Data: Key User Personas and Their Pain Points

Data observability has wide reaching benefits. Here are some examples of personas and how they benefit from data observability.

- Data Engineers who are responsible for building and maintaining the data infrastructure and pipelines that feed observability systems. They need comprehensive monitoring of data pipelines to proactively identify and resolve issues before they impact downstream consumers and business processes.

- Data Analysts who specialize in processing and analyzing data to extract insights, relying on high-quality, observable data for accurate reporting. They need confidence that the data they’re analyzing is accurate and up-to-date to provide reliable insights to stakeholders without questioning data quality.

- Data Scientists who use advanced analytics techniques and depend on observable, high-quality data for model training and deployment. They need visibility into data quality and lineage to trust the datasets feeding their models and understand when model performance degrades due to data issues.

- DevOps Engineers who manage the technical infrastructure that supports data observability platforms and ensure system reliability. They need monitoring and alerting systems that help them maintain the infrastructure supporting data operations while ensuring minimal downtime and optimal performance.

- Business Intelligence (BI) Developers who create dashboards and reports that rely on observable, trustworthy data sources. They need data observability tools that integrate with their reporting platforms to deliver accurate dashboards and alert business users when data quality issues might affect their reports.

- Compliance Officers who ensure that data management practices comply with regulatory requirements and benefit from observability into data handling. They need comprehensive data lineage and audit trails down to the field level to demonstrate regulatory compliance and quickly respond to data governance inquiries.

- IT Managers who oversee the technology infrastructure and need visibility into the performance and costs of data observability solutions. They need data observability platforms that provide clear ROI metrics and help optimize data infrastructure costs while maintaining high service levels.

- Chief Data Officers (CDOs) who lead enterprise data strategy and need executive-level visibility into data health across the organization. They need comprehensive metrics on data quality, usage, and business impact to demonstrate the value of their data investments and identify areas for strategic improvement.

Data Observability Use Cases for Modern Organizations

In this section, we’ll feature common use cases for both the business and IT sides of the organization.

6 Common Business Use Cases

This section highlights how data observability directly supports critical business objectives and strategies.

1. Financial Services: Risk Management and Fraud Detection

Enhances risk assessment and fraud prevention by monitoring transaction patterns, data quality, and regulatory compliance metrics in.

Examples:

- Banking: Detecting anomalies in transaction volumes and patterns to identify potential fraud, while ensuring compliance with anti-money laundering regulations through comprehensive data lineage tracking.

- Insurance: Monitoring claims data quality and processing times to identify fraudulent patterns and ensure accurate risk assessment models.

- Investment Management: Tracking data freshness and quality in market data feeds to ensure portfolio management decisions are based on accurate, timely information.

2. Healthcare: Patient Safety and Operational Excellence

Enables comprehensive monitoring of patient data quality, medical device outputs, and clinical workflow efficiency to improve patient outcomes and operational performance.

Examples:

- Hospitals: Monitoring electronic health record (EHR) data quality to ensure accurate patient information, medication dosing, and treatment recommendations.

- Pharmaceutical: Tracking clinical trial data integrity and regulatory compliance throughout drug development processes.

- Medical Devices: Ensuring IoT sensor data from patient monitoring equipment maintains accuracy and availability for critical care decisions.

3. Retail and E-commerce: Customer Experience Optimization

Improves customer experience through monitoring of customer data, inventory levels, and recommendation engine performance. It’s the business equivalent of having a personal shopper who never sleeps—constantly ensuring your customers get the right products at the right time.

Examples:

- E-commerce Platforms: Monitoring product catalog data quality, inventory accuracy, and recommendation algorithm performance to prevent customer disappointment and lost sales.

- Omnichannel Retail: Ensuring customer data consistency across online, mobile, and in-store touchpoints for seamless shopping experiences.

- Supply Chain: Tracking inventory data quality and supplier performance metrics to optimize stock levels and prevent out-of-stock situations.

4. Manufacturing: Production Optimization and Quality Control

Offers insights into production data quality, equipment performance, and supply chain efficiency to optimize manufacturing processes and ensure product quality.

Examples:

- Automotive: Monitoring sensor data from production lines to ensure quality control standards and predict equipment maintenance needs.

- Aerospace: Tracking component traceability data and quality metrics throughout the manufacturing process to ensure safety and regulatory compliance.

- Consumer Goods: Analyzing production data patterns to optimize batch processing and reduce waste while maintaining quality standards.

5. Telecommunications: Network Performance and Customer Service

Helps organizations manage network performance data, customer usage patterns, and service quality metrics to enhance network reliability and customer satisfaction.

Examples:

- Mobile Networks: Monitoring call quality data, network performance metrics, and customer usage patterns to optimize network capacity and reduce service disruptions.

- Internet Service Providers: Tracking bandwidth utilization, service availability data, and customer satisfaction metrics to improve service delivery.

- Enterprise Communications: Ensuring unified communications data quality and system availability for business continuity.

6. Energy and Utilities: Infrastructure Monitoring and Regulatory Compliance

Analyzes energy consumption data, grid performance metrics, and environmental compliance data to ensure reliable service delivery and regulatory adherence.

Examples:

- Electric Utilities: Monitoring smart meter data quality and grid performance metrics to optimize energy distribution and predict maintenance needs.

- Oil and Gas: Tracking pipeline sensor data and environmental monitoring information to ensure safe operations and regulatory compliance.

- Renewable Energy: Analyzing weather data quality and energy production metrics to optimize renewable energy generation and grid integration.

These use cases demonstrate how data observability has become the backbone of data-driven decision making for organizations across industries. In an era where data is often called “the new oil,” data observability serves as the refinery, turning that raw resource into high-octane business fuel.

8 Technical Use Cases

Ever wonder how business strategies transform into digital reality? This section pulls back the curtain on the technical wizardry of data observability. We’ll explore eight use cases that showcase how data observability technologies turn business visions into actionable insights and competitive advantages.

1. Data Pipeline Monitoring and Automated Recovery

Data observability platforms monitor complex ETL/ELT pipelines and can automatically recover from certain types of failures, providing the computational resilience needed for mission-critical data operations.

Key Features:

- Pipeline health monitoring with automated alerting for job failures, performance degradation, and data quality issues.

- Automated recovery mechanisms for common pipeline failures, including retry logic and fallback data sources.

- Performance optimization recommendations based on historical pipeline execution patterns and resource utilization.

2. ML Model Performance and Data Drift Detection

Organizations use data observability to monitor machine learning and AI model inputs and outputs, detecting data drift that could degrade model performance over time.

Key Features:

- Automated data drift detection using statistical methods to identify changes in input data distributions.

- Model performance monitoring with tracking of accuracy, precision, recall, and business-specific metrics.

- Feature store observability ensuring consistent data quality for model training and inference pipelines.

3. Regulatory Compliance and Data Governance Automation

Many organizations leverage data observability for automated compliance monitoring, ensuring sensitive data handling meets regulatory requirements like GDPR, HIPAA, and SOX. It’s like having a digital compliance officer that never sleeps and catches every data governance issue before auditors even knock on your door.

Key Features:

- Automated compliance reporting with pre-built templates for common regulatory frameworks.

- Data privacy monitoring including PII detection, access tracking, and retention policy enforcement.

- Audit trail generation with comprehensive logging of data access, modifications, and pipeline executions.

- Policy enforcement automation that can block or quarantine data that violates governance rules.

4. Cost Optimization and Resource Management

Facilitates intelligent resource allocation and cost optimization by monitoring data processing costs, storage utilization, and compute efficiency across cloud and on-premises infrastructure.

Key Features:

- Resource utilization dashboards that provide visibility into compute, storage, and network costs.

- Automated scaling recommendations based on workload patterns and business requirements.

- Cost anomaly detection that identifies unexpected spikes in data processing expenses.

- Multi-cloud cost optimization with recommendations for workload placement across different cloud providers.

5. Data Quality Scoring and SLA Management

Organizations implement automated data quality scoring systems that measure data health against predefined service level agreements (SLAs) and business requirements.

Key Features:

- Automated data quality scoring using configurable weights for different quality dimensions.

- SLA tracking and reporting with dashboards showing performance against agreed-upon metrics.

- Proactive alerting when data quality scores fall below acceptable thresholds.

- Historical trend analysis to identify patterns in data quality degradation and improvement.

6. Incident Response and Root Cause Analysis

Modern data observability platforms provide automated incident detection, triage, and root cause analysis capabilities that dramatically reduce mean time to resolution (MTTR).

Key Features:

- Intelligent incident correlation that groups related issues and identifies common root causes.

- Automated impact analysis showing which downstream systems and users are affected by data issues.

- Collaborative incident management with integrated communication tools and runbook automation.

- Post-incident analysis with automated report generation and process improvement recommendations.

7. Data Lineage and Impact Analysis

Provides comprehensive data lineage tracking and impact analysis capabilities that help organizations understand data dependencies and assess the potential impact of changes.

Key Features:

- Automated lineage discovery that maps data flow across complex, multi-vendor data ecosystems.

- Column-level lineage tracking showing detailed transformations and dependencies at the field level.

- Change impact analysis that predicts which downstream systems will be affected by upstream changes.

- Business glossary integration connecting technical data lineage with business terminology and definitions.

8. Data Quality Monitoring

Enables continuous monitoring of data quality metrics across streaming and batch processing systems, providing visibility into data health as it flows through pipelines.

Key Features:

- Anomaly detection using machine learning algorithms to identify quality issues in streaming data.

- Configurable quality thresholds with business-specific rules for different data types and use cases.

- Integration with data catalogs to automatically update data asset metadata based on quality monitoring results.

The technical capabilities discussed show that data observability is not just a nice to have, it’s a must have. As companies increasingly rely on data for decision making and training, a robust data observability framework can be the difference in making a good decision.

Data observability has evolved from a nice-to-have capability to an essential foundation for modern business operations. As organizations increasingly rely on data-driven decision making across every aspect of their operations the cost of unreliable data has become prohibitive. Companies can no longer afford the luxury of reactive data quality management, where issues are discovered only after they impact business outcomes. Data observability provides the proactive monitoring, automated anomaly detection, and comprehensive visibility needed to protect these critical business assets. Modern companies that fail to implement robust data observability frameworks risk falling behind competitors who can make faster, more accurate decisions based on trustworthy data.

It’s increasingly urgent to adopt data observability as organizations embrace artificial intelligence and machine learning. AI models are only as reliable as the data that feeds them, making data observability critical for preventing model drift, ensuring training data quality, and maintaining consistent AI performance in production environments. As data volumes continue to explode, with global data creation projected to grow from 33 zettabytes in 2018 to 175 zettabytes by 2025, traditional sampling-based monitoring approaches can’t keep up. Organizations need scalable data observability solutions that can monitor 100% of their data without creating compute cost explosions or performance bottlenecks.

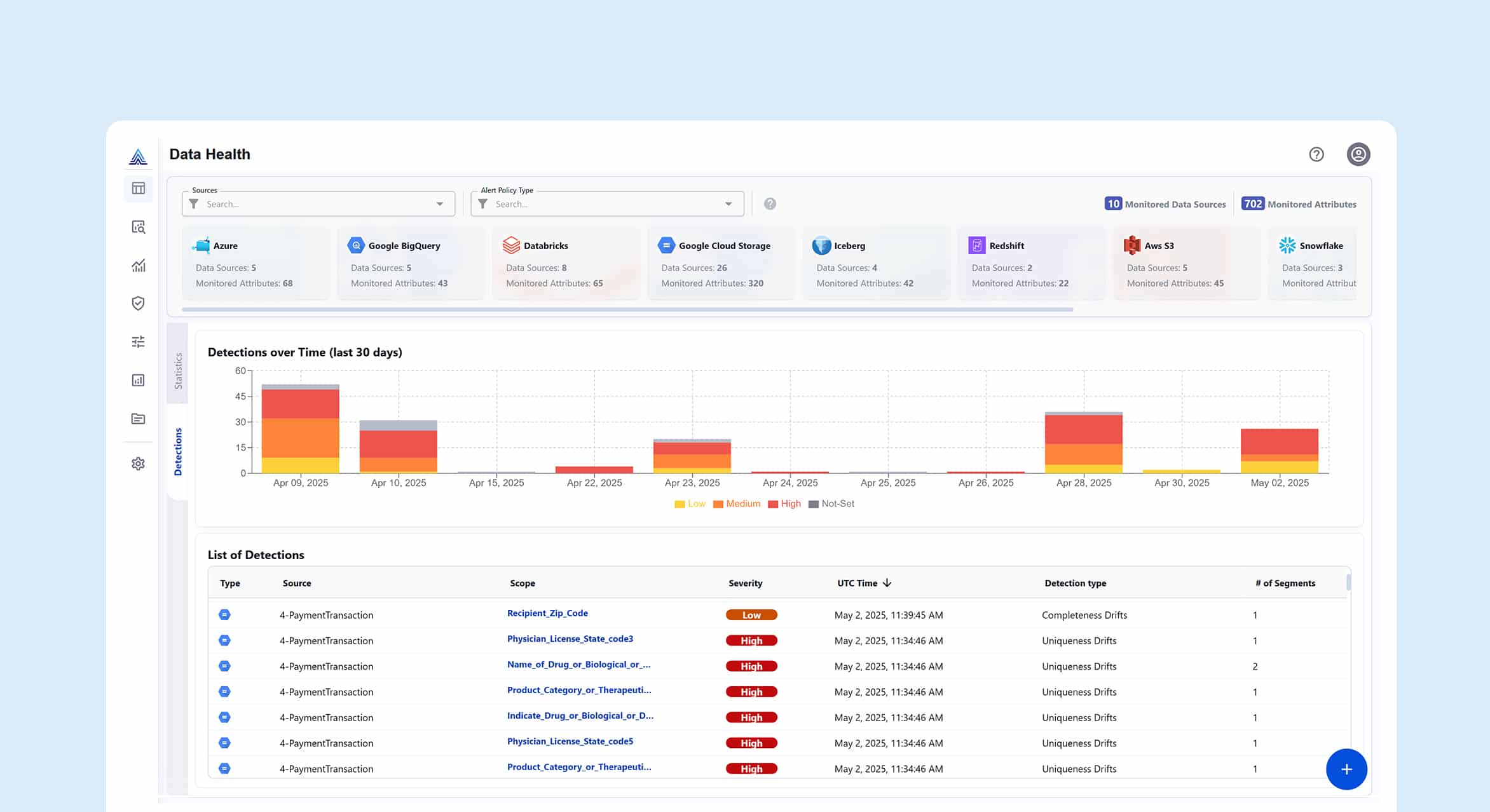

This is where Actian Data Observability shines as it’s engineered to address the operational demands of modern data environments, offering continuous monitoring across the full data lifecycle. It provides granular visibility into pipeline health, schema evolution, data quality metrics, and infrastructure performance across hybrid and multi-cloud architectures while keeping your data secure by accessing metadata directly where it resides. With built-in anomaly detection, automatic lineage tracing, and root cause diagnostics, Actian enables data teams to detect and resolve issues before they propagate, reducing downtime and minimizing downstream impact. Its scalable architecture is designed to handle high-volume workloads without excessive compute overhead, making it ideal for AI and analytics-driven organizations that require complete data reliability at enterprise scale.

Experience Actian Data Observability for yourself with a custom demo.