Choose a data discovery tool that matches your business needs, integrates with existing systems, scales securely, and embeds governance to turn scattered data into actionable insights.

Understand Your Business Data Challenges

Map your organization’s specific data obstacles and strategic goals before evaluating tools to ensure the selection solves real problems, not generic features. Common issues include fragmented data across systems, weak governance, heavy analytics dependencies on technical staff, and regulatory compliance gaps.

Account for sector-specific needs: financial services require strict audit trails and privacy controls; life sciences demand detailed lineage for submissions; manufacturing must integrate operational technology with business data while preserving quality across global operations.

Prioritize capabilities that directly address documented pain points—automated policy enforcement for compliance, federation and connectors for data silos, and intuitive self-service for adoption. Involve stakeholders from IT, business units, compliance, and executives to align technical requirements with business objectives and mandates.

Identify Essential Features of a Data Discovery Tool

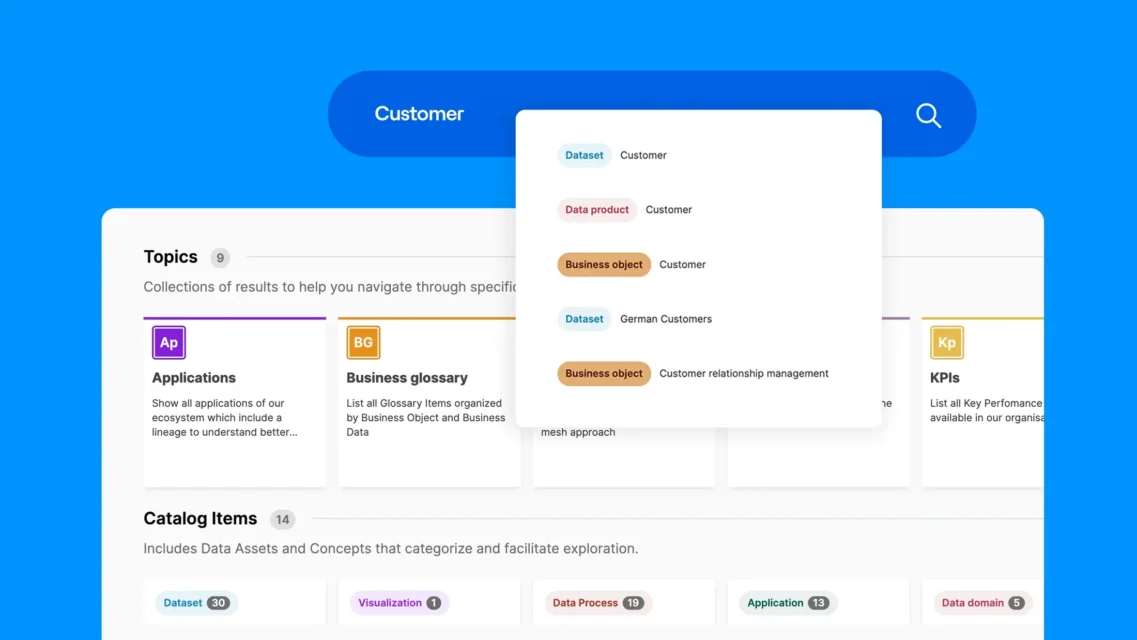

Focus on features that deliver measurable value. Multi-dimensional and smart search should surface relevant assets even when queries are vague. Automated classification using machine learning reduces manual effort and applies consistent security labels and governance suggestions.

Robust metadata management and knowledge graph technology improve searchability, context, lineage, and relationship discovery. Visualization must serve both business and technical users—drag-and-drop for business users and customizable dashboards for analysts.

Context enrichment, self-service analytics, and clear governance boundaries let users explore and create without constant IT support while protecting sensitive data. Ensure the platform supports varied data types and presents business meaning for technical elements.

Evaluate Integration With Existing Data Ecosystem

A discovery tool must integrate with your current investments—quality systems, security frameworks, analytics, and cloud infrastructure—without introducing duplication or extra complexity. Favor platforms that enhance existing workflows and minimize data movement.

Check for comprehensive APIs, webhook support, and pre-built connectors to enable automated workflows and real-time synchronization. Ensure hybrid and multi-cloud federation so data can remain in place, reducing storage costs, preserving freshness, and simplifying compliance.

Verify support for structured, unstructured, and streaming formats to avoid blind spots. Confirm the tool inherits and enforces your security policies, role-based controls, and audit requirements to prevent gaps and administrative overhead.

Prioritize Automated Data Governance and Compliance

Governance must be embedded and automated. Leading platforms apply policies, security classifications, and lineage tracking across assets to lower manual workload and regulatory risk.

Automated policy enforcement should apply classifications, access controls, and usage rules based on content and context. Comprehensive audit trails and detailed lineage are essential for reporting, forensics, impact analysis, and data quality work.

Use ML-driven sensitive-data detection to identify PII, financial, and regulated content at scale. Federated knowledge graphs, such as those offered by Actian’s platform, enable centralized governance while keeping data in place, supporting data sovereignty and minimizing movement.

Empower Users With Self-Service Data Access

Self-service enables authorized users to explore and analyze data without IT bottlenecks while preserving governance. Marketplace-style discovery interfaces, business-term search, and clear data quality and lineage indicators lower technical barriers and boost adoption.

Enforce role-based access so users only see authorized data; log access for governance. Support multiple search paradigms—natural language for business users and SQL/metadata filters for technical users—while ensuring consistent results.

Collaboration features—comments, annotations, ratings, and usage analytics—build institutional knowledge, reduce duplicate efforts, and improve data quality over time.

Review Vendor Support and Documentation

Vendor support and documentation affect deployment speed and operational success. Look for automated metadata extraction that discovers and catalogs sources, suggests classifications, and reduces manual configuration.

Technical documentation should include installation, integration, APIs, code samples, and troubleshooting; user documentation should be role-based and practical. Align support SLAs to your operational needs—response times, escalation paths, and access to specialized expertise matter.

Active user communities, marketplaces, training, and certifications indicate vendor commitment to platform evolution and customer success.

Plan for Scalability and Future Growth

Choose a platform that scales with data volumes, users, and evolving use cases like AI/ML. Automated scaling and robust architecture minimize performance issues and administrative burden.

Centralized policy management with hierarchical delegation maintains enterprise consistency while allowing business-unit autonomy. Knowledge graph architectures support complex relationships and advanced analytics requirements.

Consider vendor recognition and analyst ratings as validation—Actian’s 83% (A-) rating in Product Experience for manageability is an example of demonstrated capability. Confirm the vendor roadmap includes AI/ML integration, data science workflow support, and multi-cloud deployments to avoid lock-in.

Implement a Decision Framework for Tool Selection

Use a structured framework to keep selection objective and aligned with strategy. Start with comprehensive requirements gathering from IT, business units, compliance, and executives.

Map features to requirements, validate use cases with proofs of concept, and assess vendor stability, references, and partnerships. Build a weighted decision matrix covering features, integration, governance, user experience, TCO, and support quality.

Include implementation planning, success metrics, change management, and pilot programs to prove value before full-scale rollout. Industry endorsements such as Actian’s ISG Leader rating and Data Breakthrough Award recognition can supplement your evaluation.

Request a demo to explore how Actian Data Intelligence Platform meets your specific needs.

FAQ

Essential features are automated classification, advanced metadata management, user-friendly search for varied skill levels, role-based access controls, visualization and self-service analytics, and integration capabilities that align with your infrastructure.

Calculate the total of license fees, integration effort, training, and governance expenses, then compare against projected time-to-insight savings. Include hidden costs like custom connector development and professional services. Most organizations see ROI within 6-12 months.

Define data contracts using your platform’s API, establish automated schema validation, and configure quality rule synchronization. Actian’s API enables seamless integration with popular CI/CD tools for automatic deployment with code changes.

Choose a platform with built-in audit logs, role-based access controls, and automated data masking. Verify compliance certifications and configure automated policy enforcement to prevent unauthorized access.

Use platforms like Actian offering no-code connector builders or extensible SDKs for custom integration. Modern platforms often provide REST APIs and standardized frameworks for legacy systems.

Most enterprises see measurable ROI within 6-12 months, driven by faster onboarding and reduced data quality issues. Organizations with strong change management may see benefits within 3-6 months.

Critical capabilities include comprehensive APIs, webhooks, pre-built connectors, hybrid and multi-cloud federation, and support for diverse data formats to enable real-time workflows and preserve existing investments.

Yes, successful pilots should create a single data product with enforced contracts, validate search usability, and demonstrate automated quality monitoring. Choose a domain with active data consumers and clear success metrics.

A federated knowledge graph links metadata across sources in real-time, enabling AI-driven semantic search without manual curation. Unlike traditional catalogs, federated graphs maintain relationships between distributed datasets while respecting security boundaries.