Tackling Complex Data Governance Challenges in the Banking Industry

Actian Corporation

June 19, 2025

The banking industry is one of the most heavily regulated sectors, and as financial services evolve, the challenges of managing, governing, and ensuring compliance with vast amounts of information have grown exponentially. With the introduction of stringent regulations, increasing data privacy concerns, and growing customer expectations for seamless service, banks face complex data governance challenges. These challenges include managing large volumes of sensitive data, maintaining data integrity, ensuring compliance with regulatory frameworks, and improving data transparency for both internal and external stakeholders.

In this article, we explore the core data governance challenges faced by the banking industry and how the Actian Data Intelligence Platform helps banking organizations navigate these challenges. From ensuring compliance with financial regulations to improving data transparency and integrity, the platform offers a comprehensive solution to help banks unlock the true value of their data while maintaining robust governance practices.

The Data Governance Landscape in Banking

The financial services sector generates and manages massive volumes of data daily, spanning customer accounts, transactions, risk assessments, compliance checks, and much more. Managing this data effectively and securely is vital to ensure the smooth operation of financial institutions and to meet regulatory and compliance requirements. Financial institutions must implement robust data governance to ensure data quality, security, integrity, and transparency.

At the same time, banks must balance regulatory requirements, operational efficiency, and customer satisfaction. This requires implementing systems that can handle increasing amounts of data while maintaining compliance with local and international regulations, such as GDPR, CCPA, Basel III, and MiFID II.

Key Data Governance Challenges in the Banking Industry

Below are some common hurdles and challenges facing organizations in the banking industry.

Data Privacy and Protection

With the rise of data breaches and increasing concerns about consumer privacy, banks are under immense pressure to safeguard sensitive customer information. Regulations such as the General Data Protection Regulation (GDPR) in the EU and the California Consumer Privacy Act (CCPA) have made data protection a top priority for financial institutions. Ensuring that data is appropriately stored, accessed, and shared is vital for compliance, but it’s also vital for maintaining public trust.

Regulatory Compliance

Banks operate in a highly regulated environment, where compliance with numerous financial regulations is mandatory. Financial regulations are continuously evolving, and keeping up with changes in the law requires financial institutions to adopt efficient data governance practices that allow them to demonstrate compliance.

For example, Basel III outlines requirements for the management of banking risk and capital adequacy, while MiFID II requires detailed reporting on market activities and transaction records. In this landscape, managing compliance through data governance is no small feat.

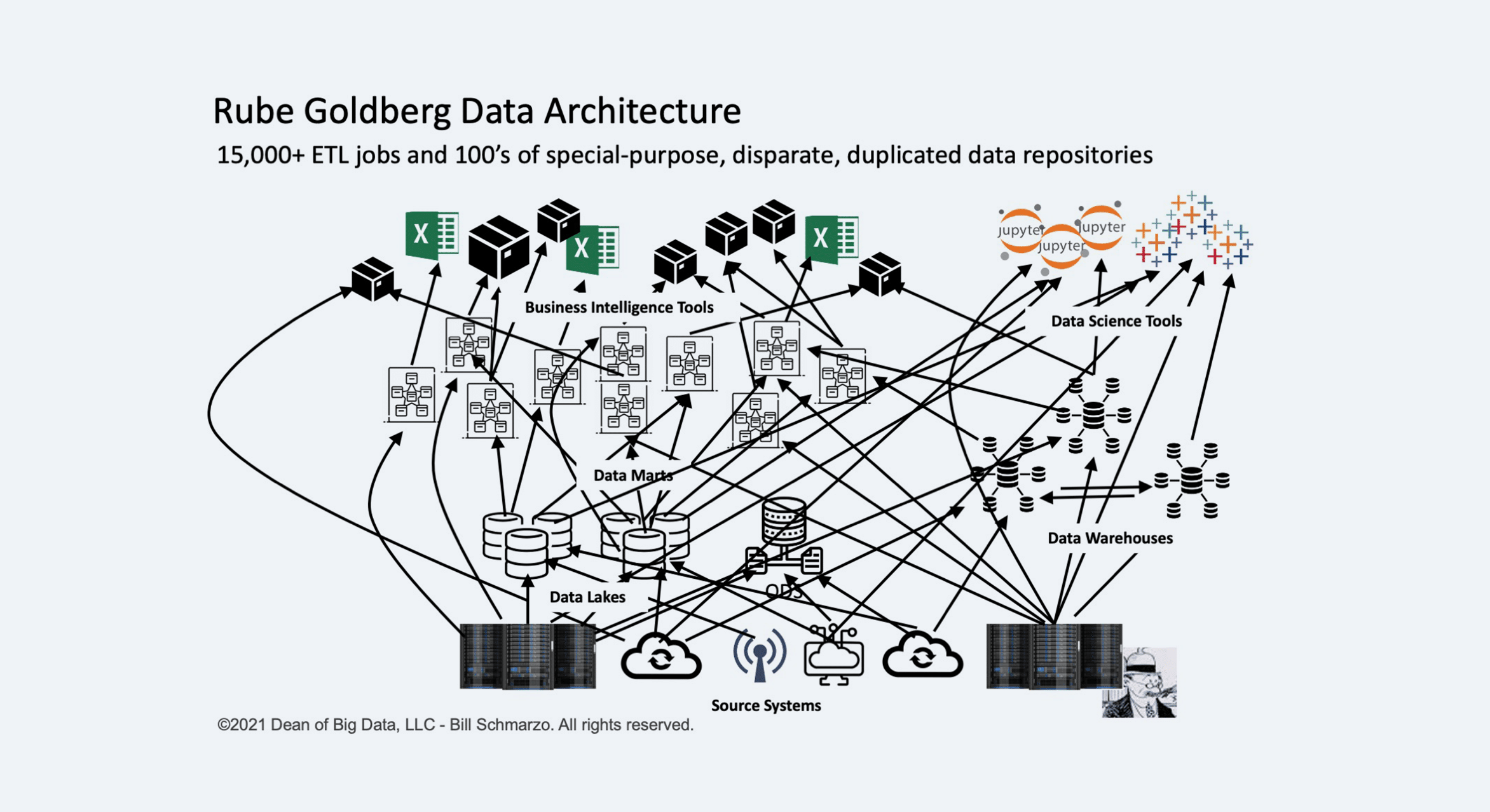

Data Silos and Fragmentation

Many financial institutions operate in a fragmented environment, where data is stored across multiple systems, databases, and departments. This lack of integration can make it difficult to access and track data effectively. For banks, this fragmentation into data silos complicates the management of data governance processes, especially when it comes to ensuring data accuracy, consistency, and completeness.

Data Transparency and Integrity

Ensuring the integrity and transparency of data is a major concern in the banking industry. Banks need to be able to trace the origins of data, understand how it’s been used and modified, and provide visibility into its lifecycle. This is particularly important for audits, regulatory reporting, and risk management processes.

Operational Efficiency

As financial institutions grow and manage increasing amounts of data, operational efficiency in data management becomes increasingly challenging. Ensuring compliance with regulations, conducting audits, and reporting on data use can quickly become burdensome without the right data governance tools in place. Manual processes are prone to errors and inefficiencies, which can have costly consequences for banks.

How the Actian Data Intelligence Platform Tackles Data Governance Challenges in the Banking Industry

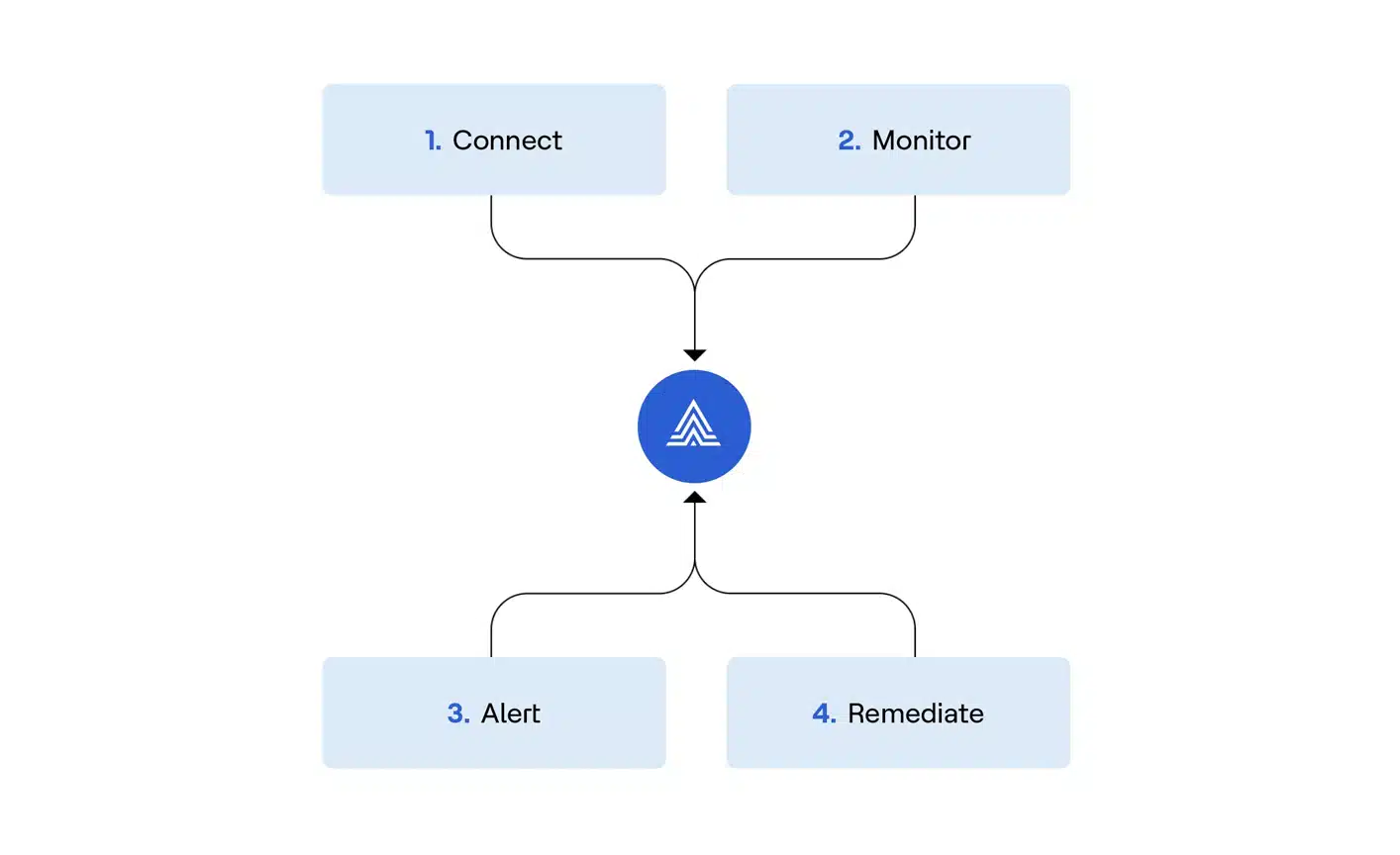

The Actian Data Intelligence Platform is designed to help organizations tackle the most complex data governance challenges. With its comprehensive set of tools, The platform supports banks by helping ensure compliance with regulatory requirements, improving data transparency and integrity, and creating a more efficient and organized data governance strategy.

Here’s how the Actian Data Intelligence Platform helps the banking industry overcome its data governance challenges.

1. Ensuring Compliance With Financial Regulations

The Actian Data Intelligence Platform helps banks achieve regulatory compliance by automating compliance monitoring, data classification, and metadata management.

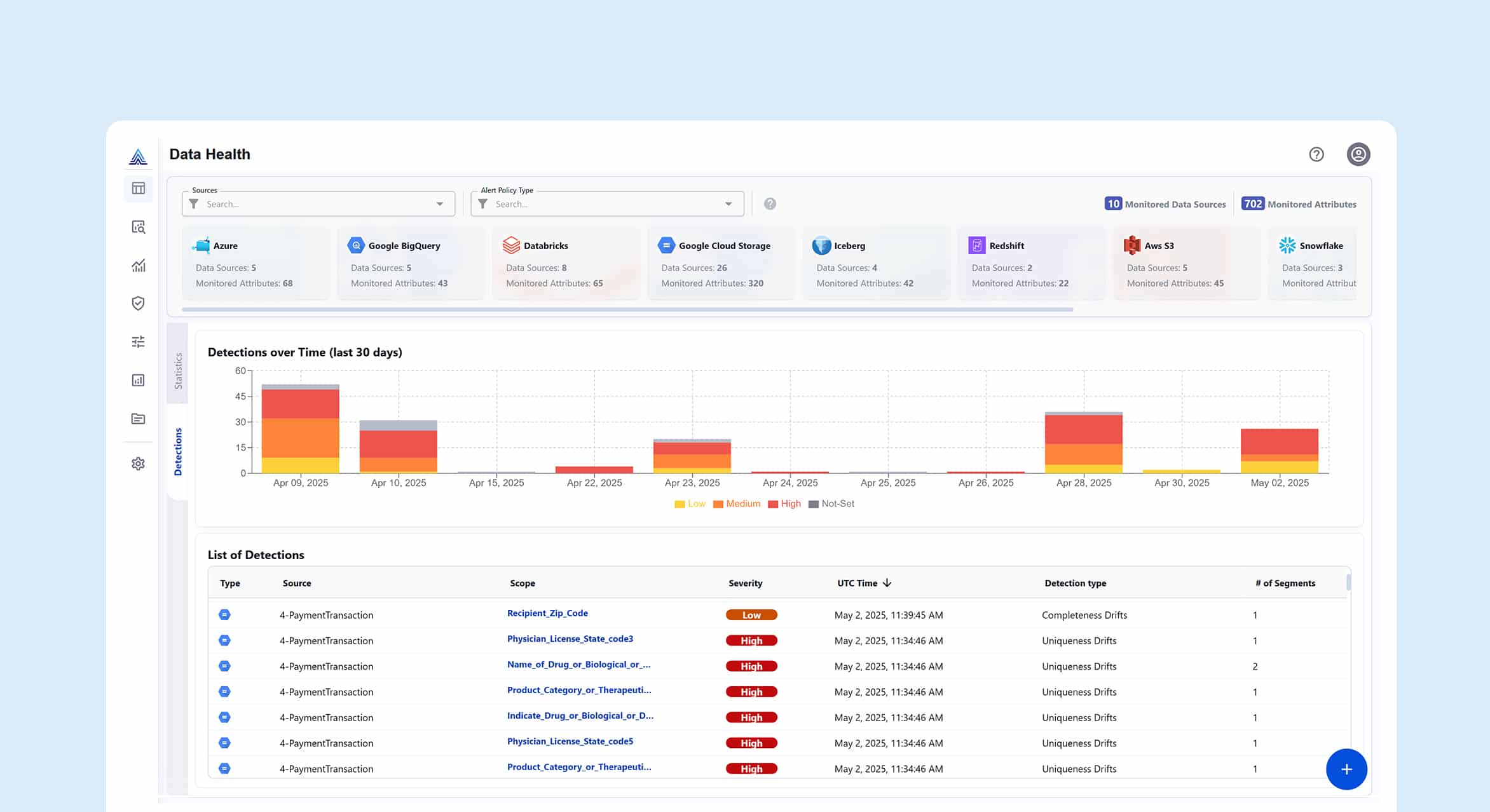

- Regulatory Compliance Automation: The Actian Data Intelligence Platform enables banks to automate compliance tracking and continuously monitor data access and usage. This helps banks ensure that they are consistently meeting the requirements of regulatory frameworks like GDPR, Basel III, MiFID II, and others. The Actian Data Intelligence Platform’s compliance monitoring tools also automatically flag any data access or changes to data that may violate compliance rules, giving banks the ability to react quickly and mitigate risks.

- Data Classification for Compliance: The Actian Data Intelligence Platform allows banks to classify and categorize data based on its sensitivity and compliance requirements. By tagging data with relevant metadata, such as classification labels (e.g., personal data, sensitive data, financial data), The Actian Data Intelligence Platform ensures that sensitive information is handled in accordance with regulatory standards.

- Audit Trails and Reporting: The Actian Data Intelligence Platform’s audit trail functionality creates comprehensive logs of data access, usage, and modifications. These logs are crucial for financial institutions when preparing for audits or responding to regulatory inquiries. The Actian Data Intelligence Platform automates the creation of compliance reports, making it easier for banks to demonstrate their adherence to regulatory standards.

2. Improving Data Transparency and Integrity

Data transparency and integrity are critical for financial institutions, particularly when it comes to meeting regulatory requirements for reporting and audit purposes. The Actian Data Intelligence Platform offers tools that ensure data is accurately tracked and fully transparent, which helps improve data governance practices within the bank.

- Data Lineage: The Actian Data Intelligence Platform’s data lineage functionality provides a visual map of how data flows through the bank’s systems, helping stakeholders understand where the data originated, how it has been transformed, and where it is currently stored. This is essential for transparency, especially when it comes to auditing and compliance reporting.

- Metadata Management: The Actian Data Intelligence Platform’s metadata management capabilities enable banks to organize, track, and maintain metadata for all data assets across the organization. This not only improves transparency but also ensures that data is properly classified and described, reducing the risk of errors and inconsistencies. With clear metadata, banks can ensure that data is correctly used and maintained across systems.

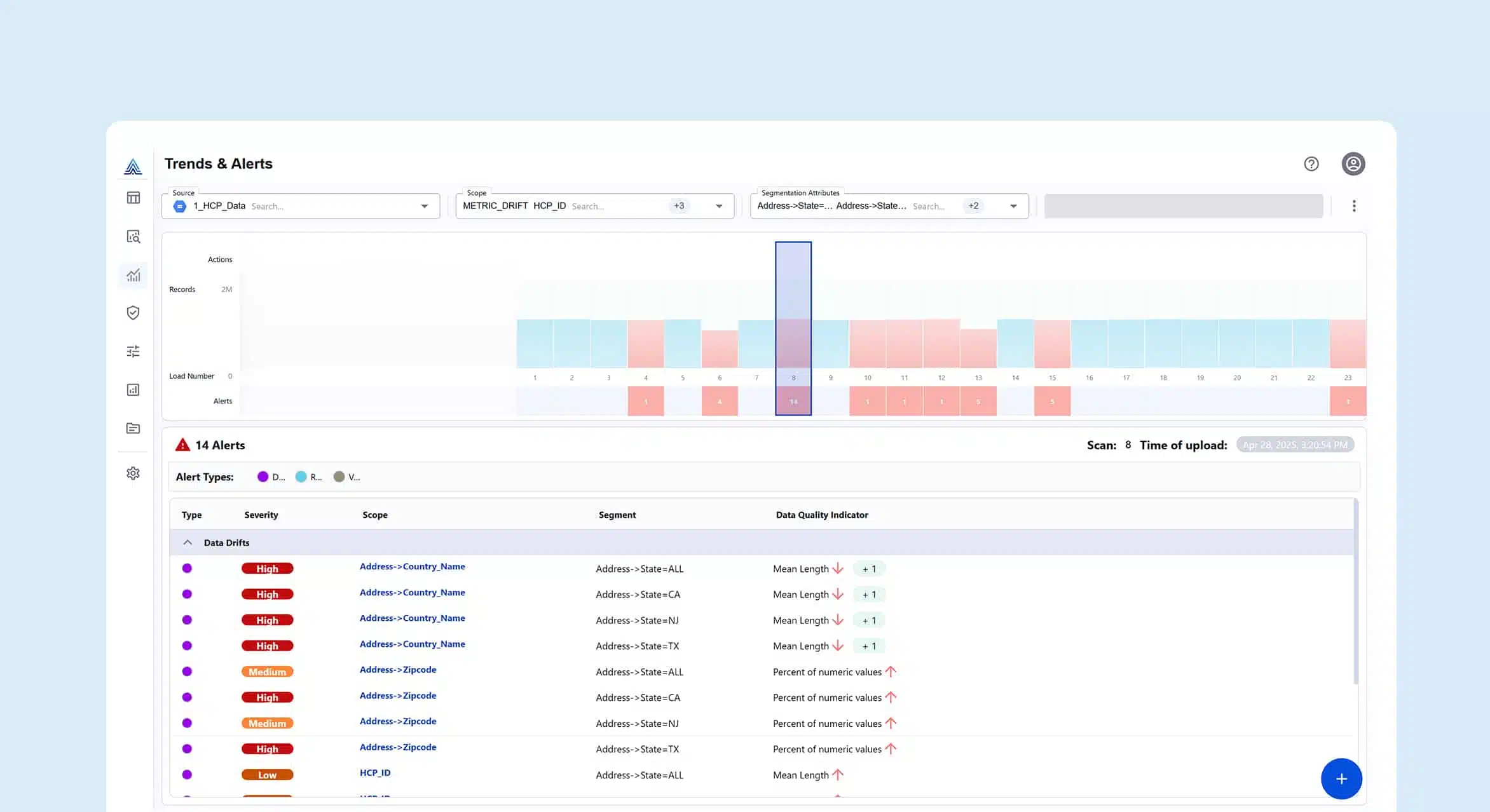

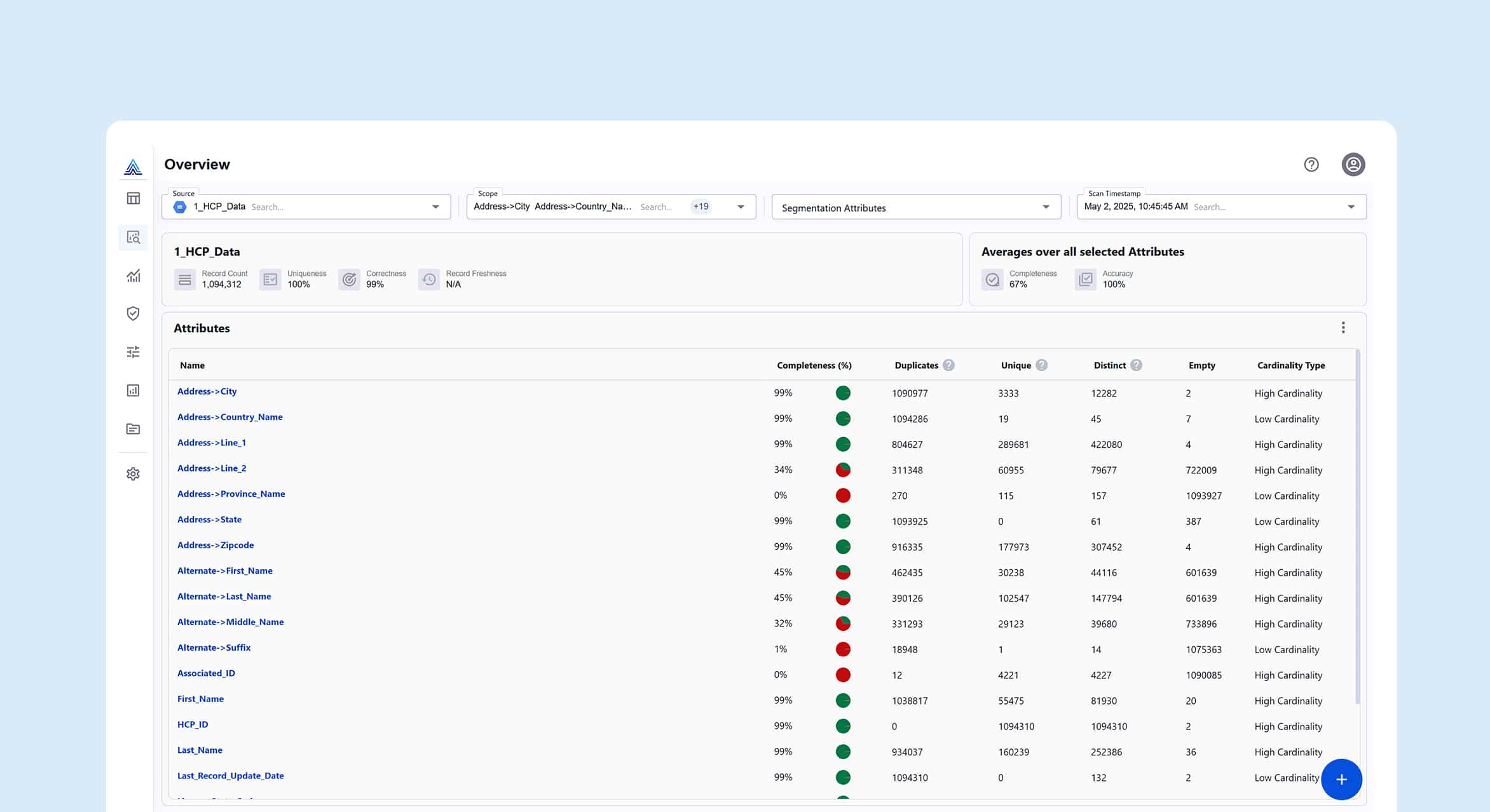

- Data Quality Monitoring: The Actian Data Intelligence Platform continuously monitors data quality, ensuring that data remains accurate, complete, and consistent across systems. Data integrity is crucial for banks, as decisions made based on poor-quality data can lead to financial losses, reputational damage, and non-compliance.

3. Eliminating Data Silos and Improving Data Integration

Fragmented data and siloed systems are a common challenge for financial institutions. Data often resides in disparate databases or platforms across different departments, making it difficult to access and track efficiently. The platform provides the tools to integrate data governance processes and eliminate silos.

- Centralized Data Catalog: The Actian Data Intelligence Platform offers a centralized data catalog that enables banks to consolidate and organize all their data assets using a single platform. This centralized repository improves the discoverability of data across departments and systems, helping banks streamline data access and reduce inefficiencies.

- Cross-Department Collaboration: With the Actian Data Intelligence Platform, departments across the organization can collaborate on data governance. By centralizing governance policies, data access, and metadata, The Actian Data Intelligence Platform encourages communication between data owners, stewards, and compliance officers to ensure that data governance practices are consistent across the institution.

4. Enhancing Operational Efficiency

Manual processes in data governance can be time-consuming and prone to errors, making it challenging for banks to keep pace with the growing volumes of data and increasing regulatory demands. The Actian Data Intelligence Platform’s platform automates and streamlines key aspects of data governance, allowing banks to work more efficiently and focus on higher-value tasks.

- Automation of Compliance Monitoring: The Actian Data Intelligence Platform automates compliance checks, data audits, and reporting, which reduces the manual workload for compliance teams. Automated alerts and reports help banks quickly identify potential non-compliance issues and rectify them before they escalate.

- Workflow Automation: The Actian Data Intelligence Platform enables banks to automate workflows around data governance processes, including data classification, metadata updates, and access management. By streamlining these workflows, The Actian Data Intelligence Platform ensures that banks can efficiently manage their data governance tasks without relying on manual intervention.

- Data Access Control: The Actian Data Intelligence Platform helps banks define and enforce fine-grained access controls for sensitive data. With The Actian Data Intelligence Platform’s robust access control mechanisms, banks can ensure that only authorized personnel can access specific data, reducing the risk of data misuse and enhancing operational security.

The Actian Data Intelligence Platform and the Banking Industry: A Perfect Partnership

The banking industry faces a range of complex data governance challenges. To navigate these challenges, they need robust data governance frameworks and powerful tools to help manage their vast data assets.

The Actian Data Intelligence Platform offers a comprehensive data governance solution that helps financial institutions tackle these challenges head-on. By providing automated compliance monitoring, metadata tracking, data lineage, and a centralized data catalog, the platform ensures that banks can meet regulatory requirements while improving operational efficiency, data transparency, and data integrity.

Actian offers an online product tour of the Actian Data Intelligence Platform as well as personalized demos of how the data intelligence platform can transform and enhance financial institutions’ data strategies.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.