Life in an Enterprise Data Team: Before and After Data Intelligence

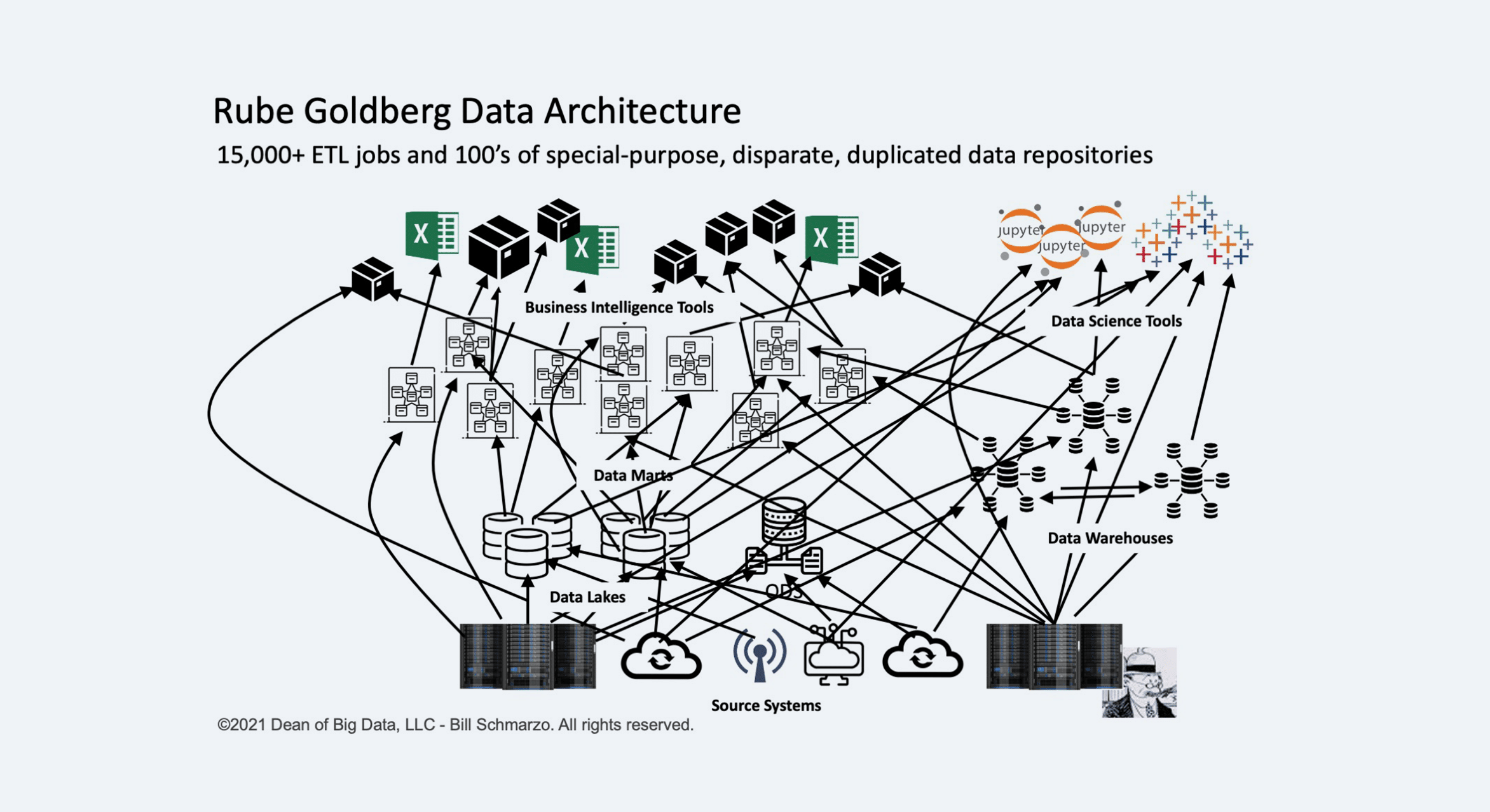

In the world of enterprise data management, there’s perhaps no image more viscerally recognizable to data professionals than the infamous “Rube Goldberg Data Architecture” diagram. With its tangled web of arrows connecting disparate systems, duplicate data repositories, and countless ETL jobs, it perfectly captures the reality many organizations face today: data chaos.

Life Before a Data Catalog

Imagine starting your Monday morning with an urgent request: “We need to understand how customer churn relates to support ticket resolution times.” Simple enough, right?

Without a data catalog or metadata management solution, your reality looks something like this:

The Dig

You start by asking colleagues which data sources might contain the information you need. Each person points you in a different direction. “Check the CRM system,” says one. “I think that’s in the marketing data lake,” says another. “No, we have a special warehouse for customer experience metrics,” chimes in a third.

The Chase

Hours are spent exploring various systems. You discover three different customer tables across separate data warehouses, each with slightly different definitions of what constitutes a “customer.” Which one is the source of truth? Nobody seems to know.

The Trust Crisis

After cobbling together data from multiple sources, you present your findings to stakeholders. Immediately, questions arise: “Are you sure this data is current?” “How do we know these calculations are consistent with the quarterly reports?” “Which department owns this metric?” Without clear lineage, business glossary or governance, confidence in your analysis plummets.

The Redundancy Trap

A week later, you discover a colleague in another department conducted almost identical analysis last month. Their results differ slightly from yours because they used a different data source. Both of you wasted time duplicating efforts, and now the organization has conflicting insights.

This scenario reflects what MIT Technology Review described in their article “Evolution of Intelligent Data Pipelines”: complex data environments with “thousands of data sources, feeding tens of thousands of ETL jobs.” The result is what Bill Schmarzo aptly illustrated – a Rube Goldberg machine of data processes that’s inefficient, unreliable, and ultimately undermines the strategic value of your data assets.

Enter the Data Catalog:

Now, let’s reimagine the same scenario with a data intelligence solution like Actian in place.

Knowledge Graph-Powered Discovery in Minutes, Not Days

That Monday morning request now begins with an intelligent search in your data catalog. Leveraging knowledge graph technology, the system understands semantic relationships between data assets and business concepts. Within moments, you’ve identified the authoritative customer data source and the precise metrics for support ticket resolution times. The search not only finds exact matches but understands related concepts, synonyms, and contextual meanings, surfacing relevant data you might not have known to look for.

Federated Catalogs With a Unified Business Glossary

Though data resides in multiple systems across your organization, the federated catalog presents a unified view. Every term has a clear definition in the business glossary, ensuring “customer” means the same thing across departments. This shared vocabulary eliminates confusion and creates a common language between technical and business teams, bridging the perennial gap between IT and business users.

Comprehensive Lineage and Context

Before running any analysis, you can trace the complete lineage of the data – seeing where it originated, what transformations occurred, and which business rules were applied. The catalog visually maps data flow across the entire enterprise architecture, from source systems through ETL processes to consumption endpoints. This end-to-end visibility provides critical context for your analysis and builds confidence in your results.

Integrated Data Quality and Observability

Quality metrics are embedded directly in the catalog, showing real-time scores for completeness, accuracy, consistency, and timeliness. Automated monitoring continuously validates data against quality rules, with historical trends visible alongside each asset. When anomalies are detected, the system alerts data stewards, while the lineage view helps quickly identify root causes of issues before they impact downstream analyses.

Data Products and Marketplace

You discover through the catalog that the marketing team has already created a data product addressing this exact need. In the data marketplace, you find ready-to-use analytics assets combining customer churn and support metrics, complete with documentation and trusted business logic. Each product includes clear data contracts defining the responsibilities of providers and consumers, service level agreements, and quality guarantees. Instead of building from scratch, you simply access these pre-built data products, allowing you to deliver insights immediately rather than starting another redundant analysis project.

Regulatory Compliance and Governance by Design

Questions about data ownership, privacy, and compliance are answered immediately. The catalog automatically flags sensitive data elements, shows which regulations apply (GDPR, CCPA, HIPAA, etc.), and verifies your authorization to access specific fields. Governance is built into the discovery process itself – the system only surfaces data you’re permitted to use and provides clear guidance on appropriate usage, ensuring compliance by design rather than as an afterthought.

Augmented Data Stewardship

The catalog shows that the customer support director is the data owner for support metrics, that the data passed its most recent quality checks, and that usage of these specific customer fields is compliant with privacy regulations. Approval workflows, access requests, and policy management are integrated directly into the platform, streamlining governance processes while maintaining robust controls.

Discovery in Minutes, Not Days

That Monday morning request now begins with a quick search in your data catalog. Within moments, you’ve identified the authoritative customer data source and the precise metrics for support ticket resolution times. The system shows you which tables contain this information, complete with detailed descriptions.

Tangible Benefits

The MIT Technology Review article highlights how modern approaches to data management have evolved to address exactly these challenges, enabling “faster data operations through both abstraction and automation.” With proper metadata management, organizations experience:

- Reduced time-to-insight: Analysts spend less time searching for data and more time extracting value from it

- Enhanced data governance: Clear ownership, lineage, and quality metrics build trust in data assets

- Automated data quality monitoring: The system continually observes and monitors data against defined quality rules, alerting teams when anomalies or degradation occur

- SLAs and expectations: Clear data contracts between producers and consumers establish shared expectations about the usage and reliability of data products

- Improved collaboration: Teams build on each other’s work rather than duplicating efforts

- Greater agility: The business can respond faster to changing conditions with reliable data access

From Rube Goldberg to Renaissance

The “Rube Goldberg Data Architecture” doesn’t have to be your reality. As data environments grow increasingly complex, data intelligence solutions like Actian become essential infrastructure for modern data teams.

By implementing a robust data catalog, organizations can transform the tangled web depicted in Schmarzo’s illustration into an orderly, efficient ecosystem where data stewards and consumers spend their time generating insights, not hunting for elusive datasets or questioning the reliability of their findings.

The competitive advantage for enterprises doesn’t just come from having data – it comes from knowing your data. A comprehensive data intelligence solution isn’t just an operational convenience; it’s the foundation for turning data chaos into clarity and converting information into impact.

This blog post was inspired by Bill Schmarzo’s “Rube Goldberg Data Architecture” diagram and insights from MIT Technology Review’s article “Evolution of Intelligent Data Pipelines.”