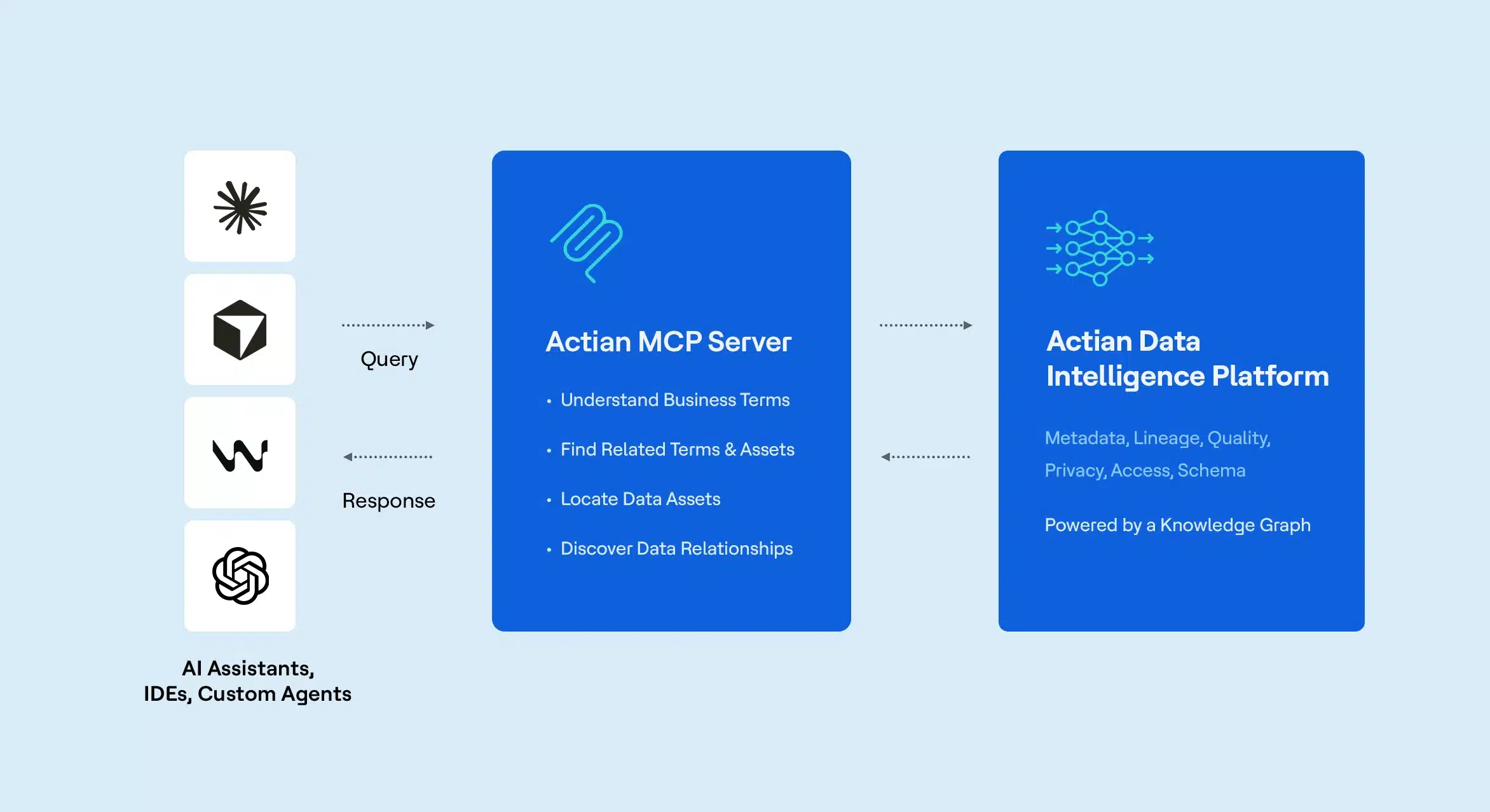

A data catalog is a centralized metadata system that helps organizations discover, understand, and govern their data. It provides automated discovery, lineage, classification, and policy enforcement so teams can find trusted data quickly and use it responsibly.

A modern data catalog, with automated discovery, centralized metadata, and policy enforcement, turns fragmented, risky data governance into proactive, trusted self-service access that improves compliance, quality, and decision-making.

Key Benefits of a Data Catalog

- Centralizes metadata from all sources.

- Improves data quality and trust.

- Automates classification and policy enforcement.

- Speeds analytics and self-service access.

- Strengthens compliance with GDPR, HIPAA, and industry regulations.

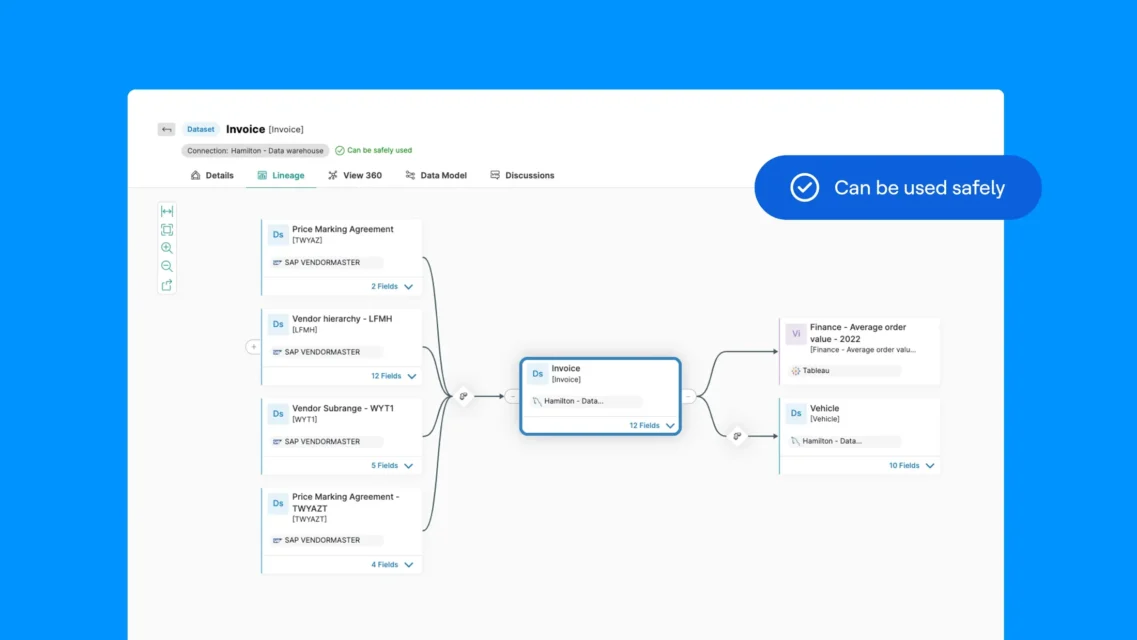

- Provides lineage to understand data origin and usage.

- Reduces operational risk and manual governance work.

Understand Key Data Governance Challenges

Data governance is the set of policies, roles, and processes that ensure data is available, usable, accurate, and secure across the enterprise. As organizations ingest more data from diverse sources, governance grows harder and more important.

Key challenges include fragmented metadata across disconnected systems, entrenched data silos, inconsistent business terminology, and manual compliance processes that are slow and error-prone. Poor visibility—teams unable to find datasets, assess quality, or trace lineage—drives unreliable analytics and bad decisions. Without clear ownership and stewardship, data quality decays and trust erodes.

The consequences go beyond inefficiency: regulatory fines, security incidents, and stalled analytics or AI initiatives. To scale trusted self-service and become data-driven, organizations need governance that is automated, centralized, and integrated into everyday workflows.

Automate Data Discovery and Metadata Collection

Automated discovery continuously scans databases, files, cloud storage, and applications to identify structured and unstructured data assets, eliminating manual inventories and ensuring comprehensive coverage. Modern discovery tools detect source locations, schema, relationships, and usage patterns, improving accuracy over time with machine learning.

Automated metadata harvesting extracts schema details, data types, business purpose, sensitivity labels, lineage, and usage statistics—creating richer, more current metadata than manual efforts. These processes keep the catalog synchronized: when sources change or new assets appear, the catalog updates in near real time, preventing ungoverned data from spreading.

Automation dramatically shortens onboarding times for new sources—from weeks or months to hours or days—so analytics projects start faster while governance policies and access controls apply from ingestion onward.

Build a Centralized, Comprehensive Data Catalog

A centralized data catalog indexes and organizes all enterprise data assets into a single searchable interface, breaking down silos and creating a single source of truth. This consolidation saves time, reduces duplicate work, and ensures governance policies are uniformly applied.

Centralization also enforces consistent business language: standardized definitions, classifications, and glossaries reduce ambiguity and align teams across departments. Treating datasets as products—with ownership, quality metrics, and usage guidelines—fosters collaboration between producers and consumers while preserving controls.

Modern catalogs store technical and business metadata, usage examples, and quality assessments so users understand what data means, how it’s produced, how reliable it is, and the appropriate use cases and limitations.

Enforce Role-Based Access Controls and Security Policies

Role-based access control (RBAC) assigns permissions by role, ensuring only authorized users access sensitive data while enabling legitimate business use. In a catalog, RBAC maps job functions to specific viewing, editing, and usage rights so access is consistent and auditable.

Integrating RBAC with enterprise security policies centralizes enforcement and simplifies compliance audits. Automating access decisions based on predefined rules reduces the IT burden and removes ad-hoc permission practices that create gaps.

Advanced RBAC can be context-aware—adapting permissions by time, location, device, or purpose—balancing strict protection of sensitive information with operational flexibility for legitimate workflows.

Implement Automated Classification and Policy Enforcement

Automated classification applies algorithms and ML to label data by type, sensitivity, and regulatory requirements, enabling consistent handling across the data estate. This replaces error-prone manual tagging and ensures sensitive records (PII, financials, IP) are reliably identified.

Policy enforcement uses those classifications to apply controls automatically—access restrictions, masking, retention rules, and monitoring—while continuously scanning for policy violations. The platform can flag unusual access, generate alerts, and trigger remediation workflows to reduce human error and enforcement lag.

Automated compliance reporting produces audit trails and reports (who accessed what, when, and under which controls) required for GDPR, HIPAA, and other regulations, reducing the effort and risk of manual reporting.

Maintain Audit Trails and Enable Proactive Compliance Monitoring

Audit trails record chronological actions on data assets—accesses, edits, metadata changes, and lineage updates—providing essential evidence for accountability, incident investigations, and regulatory audits. Logs capture direct and indirect usage (reports, analytics, pipelines) to support forensic analysis and risk assessment.

Proactive compliance monitoring continuously analyzes access patterns, policy adherence, and usage anomalies to detect issues before they escalate. When anomalies arise, the system can alert stakeholders, launch remediation workflows, or enforce automatic corrections depending on severity.

Advanced monitoring can offer predictive insights from historical patterns, helping teams anticipate and prevent compliance risks rather than react to them after the fact.

Facilitate Collaboration With Template-Driven Documentation

Template-driven documentation standardizes how metadata, business context, steward assignments, and policies are collected and presented, reducing variability and manual effort. Drag-and-drop and guided forms let non-technical contributors add context, business rules, and usage guidance without specialized skills.

Platforms commonly provide modules tailored to roles: studio modules for stewards to manage workflows and policies, and explorer modules for business users to discover assets and contribute domain knowledge. Templates support asset registers, glossaries, stewardship assignments, policy declarations, and usage guidelines, all with approval workflows and version control to ensure accuracy.

This structured, collaborative approach distributes documentation work, maintains quality, and ensures published information is reviewed and governed.

Best Practices for Successful Data Catalog Implementation

Implementing a catalog successfully requires addressing technology and people. Key practices include:

- Assign clear stewardship: designate owners and stewards for all major assets with defined responsibilities for documentation, quality, and access.

- Develop and maintain a standardized business glossary to align terminology and reduce misunderstandings across teams.

- Automate metadata synchronization so the catalog updates with CI/CD and data pipeline changes, keeping content current and trustworthy.

- Provide role-based training tailored to stewards, analysts, and business users with practical scenarios to drive adoption.

- Integrate the catalog into development and deployment workflows so governance is embedded, not an extra step.

Organizations that apply these practices report better data visibility, faster time-to-insight, stronger auditability, and higher confidence in analytics outcomes.

Request a demo to explore how the Actian Data Intelligence Platform meets your specific needs.

FAQ

A data catalog is a centralized metadata system that helps teams discover, understand, and govern enterprise data. It works by automatically scanning data sources, harvesting metadata, classifying sensitive information, mapping lineage, and enforcing governance policies so users can quickly find trusted data for analytics and AI.

A data catalog provides the visibility and control that governance teams need. It centralizes metadata, standardizes definitions, enforces access policies, and automates compliance monitoring. This reduces risk, improves data quality, and ensures consistent governance across the entire data estate.

A data catalog eliminates fragmented metadata, data silos, inconsistent terminology, and manual compliance tasks. It helps teams find data faster, understand its quality and lineage, reduce duplicate work, and prevent unauthorized access. This leads to more reliable analytics and stronger regulatory compliance.

AI improves a data catalog by automating discovery, classification, and metadata enrichment. Machine learning identifies data patterns, detects anomalies, recommends related assets, flags quality issues, and predicts potential compliance risks. These capabilities help organizations scale governance with less manual work.

Enterprises should look for automated discovery, unified metadata management, lineage visualization, business glossaries, role-based access control, classification and masking, collaboration tools, API integration, and automated policy enforcement. These capabilities support consistent governance at scale.

Yes. Modern data catalogs connect to on-premises systems, cloud platforms, and SaaS applications. They provide a unified metadata layer across AWS, Azure, GCP, private clouds, and hybrid architectures so governance policies and access controls remain consistent everywhere.

Open-source catalogs offer flexibility for teams that want to customize their own tooling but may require more engineering resources. Enterprise data catalogs provide built-in automation, governance workflows, security controls, scalability, support, and integration with broader data platforms—making them better suited for regulated or large-scale environments.