Why Data Privacy is Essential for Successful Data Governance

Actian Corporation

April 8, 2022

Data Privacy is a priority for organizations that wish to fully exploit their data. Considered the foundation of trust between a company and its customers, Data Privacy is the pillar of successful data governance. Understand why in this article.

Whatever the sector of activity or the size of a company, data now plays a key role in the ability for organizations to adapt to their customers, ecosystem, and even competitors. The numbers speak for themselves! Indeed, in a study by Stock Apps, the global Big Data market was worth $215.7 billion in 2021 and is expected to grow 27% in 2022 to exceed $274 billion.

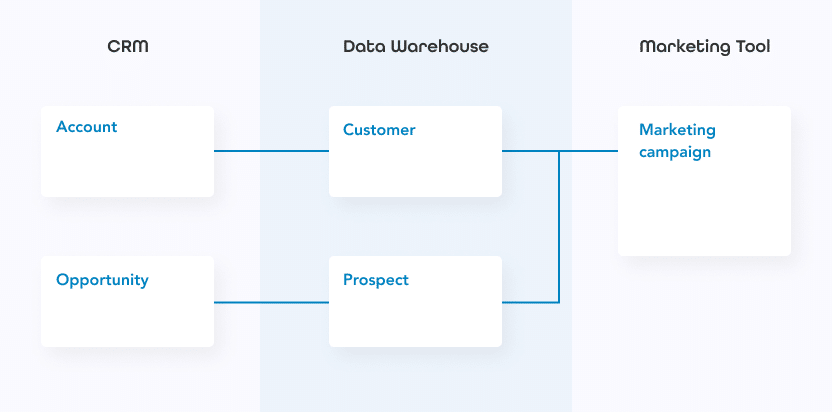

Companies are generating such large volumes of data that data governance has become a priority. Indeed, a company’s data is vital to identifying its target audiences, creating buyer personas, providing personalized responses to its customers, or optimizing the performance of its marketing campaigns. However, this is not the only issue. If data governance provides the possibility to create value with enterprise data assets, it also ensures the proper administration of data confidentiality, also known as Data Privacy.

Data Privacy vs. Data Security: Two Not-So-Very-Different Notions

Data Privacy is one of the key aspects of Data Security. Although different, they take part in the same mission: building trust between a company and its customers who want to entrust their personal data.

On the one hand, Data Security is the set of means implemented to protect data from internal or external threats, whether malicious or accidental (strong authentication, information system security, etc.).

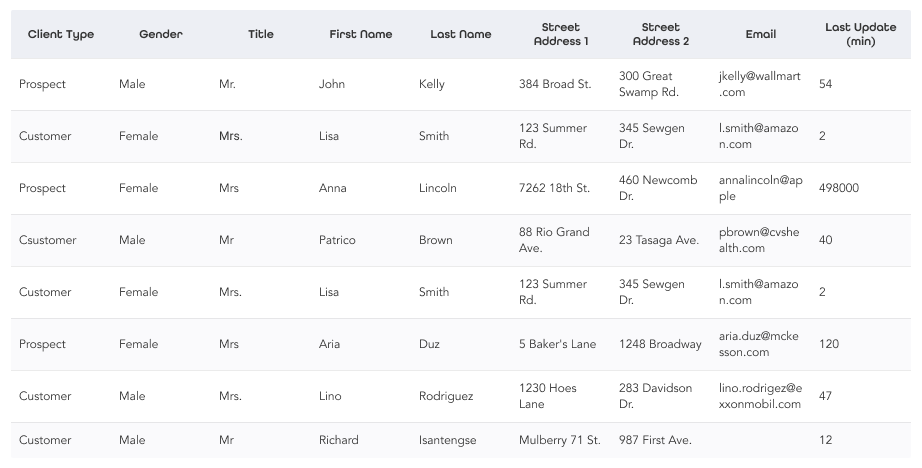

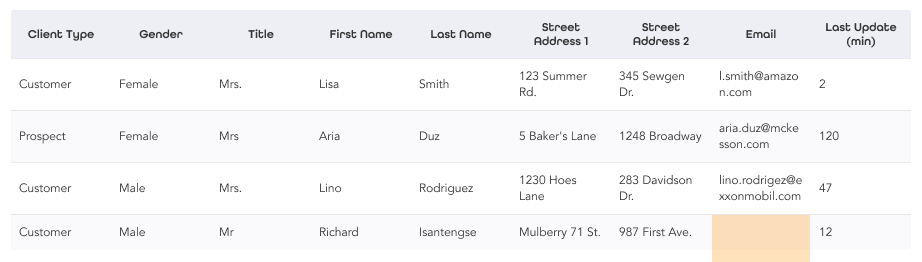

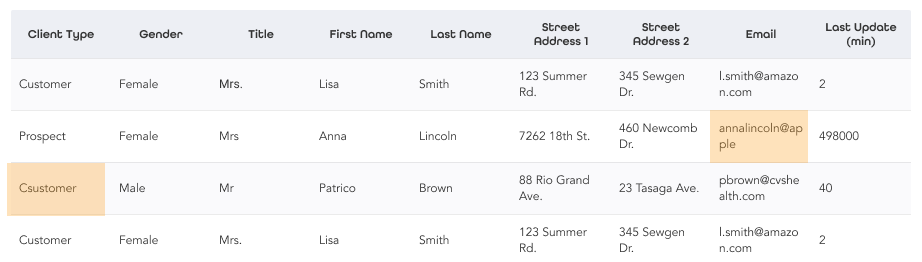

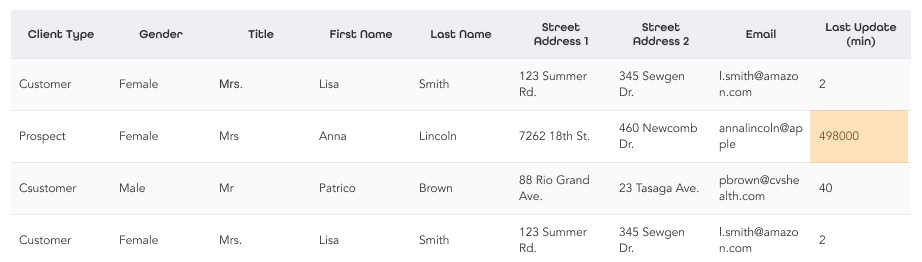

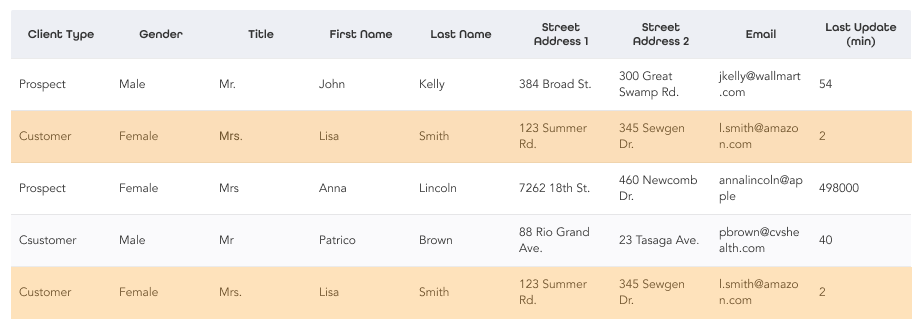

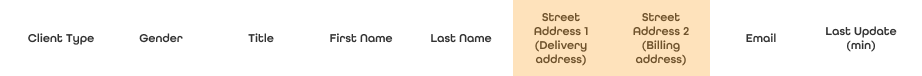

Data Privacy, on the other hand, is a discipline that concerns the treatment of sensitive data, not only personal data (also called PII for Personally Identifiable Information) but also other confidential data (certain financial data, intellectual property, etc.). Data Privacy is furthermore clearly defined in the General Data Protection Regulation (GDPR), which came into place in Europe in 2018 and has since helped companies redefine responsible and efficient data governance.

Data confidentiality has two main aspects. The first is controlling access to the data – who is allowed to access it and under what conditions. The second aspect of data confidentiality is to put in place mechanisms that will prevent unauthorized access to data.

Why is Data Privacy so Important?

While data protection is essential to preserve this valuable asset and to create the conditions for rapid data recovery in the event of a technical problem or malicious attack, data privacy addresses another equally important issue.

Consumers are suspicious of how companies collect and use their personal information. In a world full of options, customers who lose trust in one company can easily buy elsewhere. To cultivate trust and loyalty, organizations must make data privacy a priority. Indeed, consumers are becoming increasingly aware of data privacy. The GDPR has played a key role in the development of this sensitivity: customers are now very vigilant about the way their personal data is collected and used.

Because digital services are constantly developing, companies gravitate in a world of hyper-competition where customers will not hesitate to switch to a competitor if said company has not done everything possible to preserve the confidentiality of their data. This is the main reason why Data Privacy is so crucial.

Why is Data Privacy a Pillar of Data Governance?

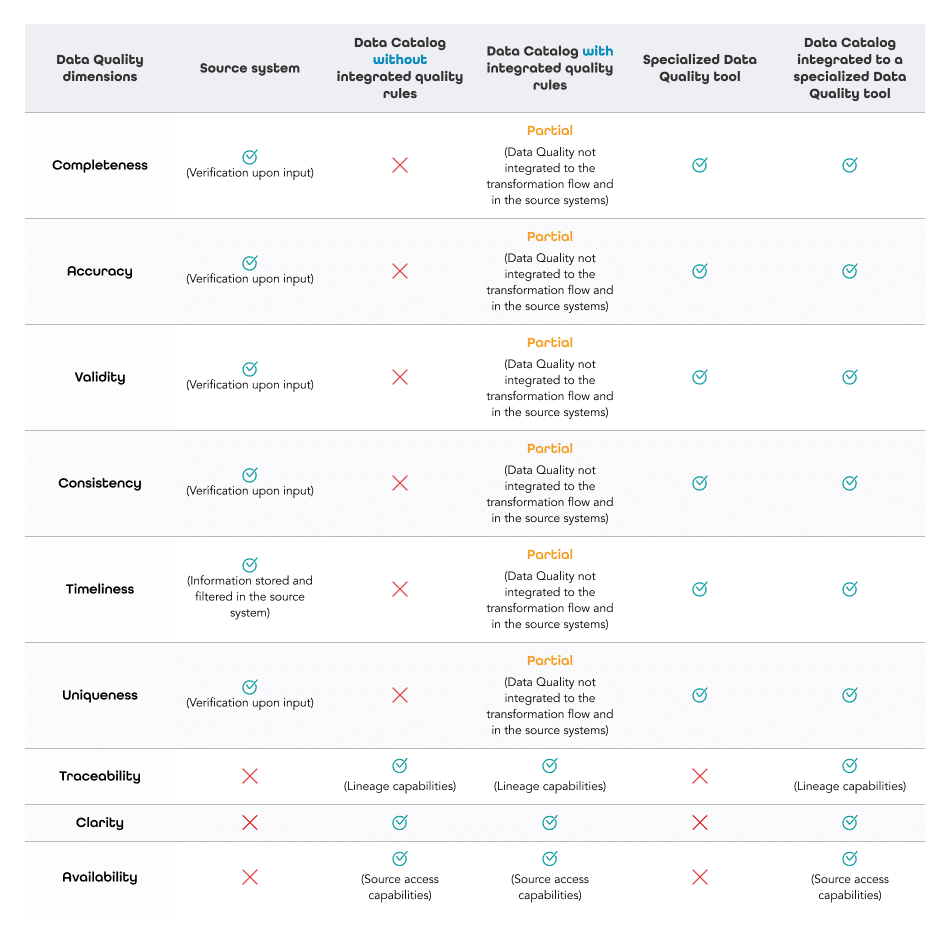

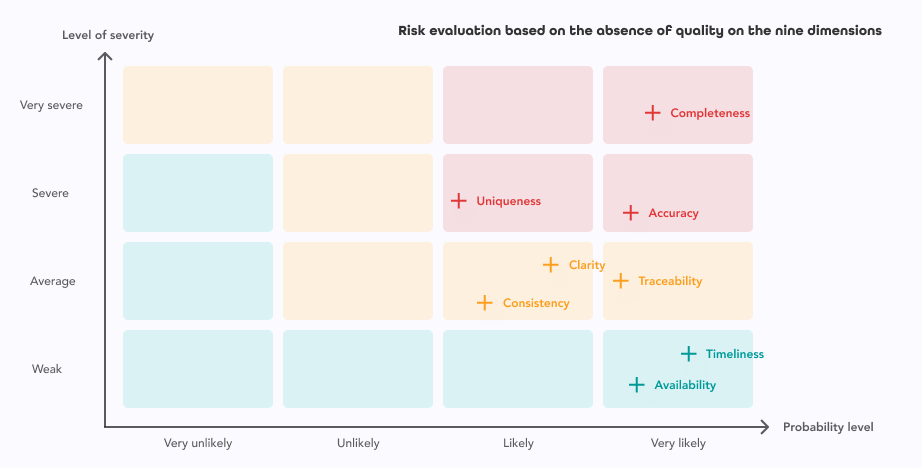

Data governance is about ensuring that data is of sufficient quality and that access is managed appropriately. The company’s objectives are to reduce the risk of misuse, theft, or loss. As such, data privacy should be understood as one of the foundations of sound and effective data governance.

Even if data governance embraces the data issue in a much broader way, it cannot be done without a perfect understanding of the levers to be used to ensure optimized data confidentiality.

Subscribe to the Actian Blog

Subscribe to Actian’s blog to get data insights delivered right to you.

- Stay in the know – Get the latest in data analytics pushed directly to your inbox.

- Never miss a post – You’ll receive automatic email updates to let you know when new posts are live.

- It’s all up to you – Change your delivery preferences to suit your needs.

Subscribe

(i.e. sales@..., support@...)